Automated Annotation Workflow for Legal Case Relevance Using LLMs

Overview

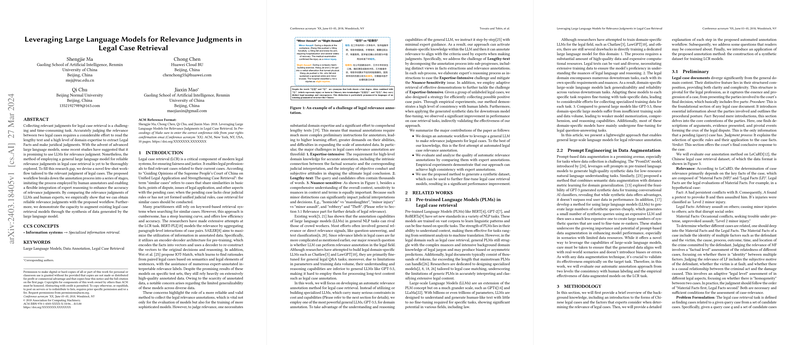

Recent advancements in LLMs have opened up new avenues for automating complex tasks that require deep understanding and reasoning capabilities. In the field of legal informatics, one of the longstanding challenges has been the retrieval of relevant cases for legal analysis—a task that not only demands meticulous reading of lengthy documents but also requires substantial domain expertise. A novel approach presented by Shengjie Ma et al. aims to address this challenge by leveraging the potential of LLMs, specifically targeting the task of relevance judgment in legal case retrieval. This paper introduces a tailored few-shot workflow that automates the annotation of legal case relevance, exhibiting a high consistency with human expert judgments and enhancing the performance of legal case retrieval models.

Methodology

The core of this paper is the innovative automated annotation workflow it proposes, designed to harness the reasoning power of general LLMs for assessing the relevance of legal cases. The workflow is comprised of four stages:

- Preliminary Legal Analysis: Engages legal experts to prepare detailed relevance indications by dissecting legal cases into Material and Legal Facts, which serve as a guiding framework for the LLM.

- Adaptive Demo-Matching (ADM): Uses BM25 to retrieve the most pertinent expert demonstrations for each case, optimizing the LLM's ability to mimic human expert reasoning.

- Fact Extraction (FE): Sequentially extracts Material and Legal Facts from the cases using step-by-step prompts, refined with selected demonstrations.

- Fact Annotation (FA): Evaluates the relevance of the extracted facts between pairs of cases, again guided by expert reasoning encapsulated in the demonstrations.

This multi-stage process mirrors the complex reasoning and annotation tasks performed by human experts, enabling the LLM to generate annotations that align well with expert judgments.

Experimental Results

The efficacy of the proposed annotation workflow was validated through a series of empirical experiments using the Chinese Legal Case Retrieval Dataset (LeCaRD). The findings revealed high reliability and consistency of the LLM-generated relevance judgments with human annotations, as indicated by Cohen's Kappa measures across different temperature settings.

The experiments further demonstrated the practical utility of the synthesized annotations in augmenting legal case retrieval models. When leveraged for fine-tuning, these annotations led to significant improvements in the performance of baseline retrieval models, suggesting that the method can effectively generate valuable synthetic data for model training.

Implications and Future Directions

The outcomes underscore the potential of leveraging advanced general LLMs for domain-specific annotation tasks, particularly in fields that require considering nuanced professional knowledge, such as law. The proposed methodology not only facilitates the scalable generation of high-quality annotated data but also promotes a deeper integration of AI into legal informatics. By automating parts of the legal analysis process, this approach stands to significantly enhance the efficiency and accessibility of legal case retrieval systems.

Looking forward, the adaptability of this workflow promises broader applicability across various legal domains and geographical jurisdictions, contingent on the availability of minimal expert guidance to tailor the process. It opens up intriguing possibilities for extending the application of automated relevance annotation to other complex legal tasks, potentially revolutionizing legal research and practice by integrating more sophisticated AI capabilities.

In conclusion, the work of Shengjie Ma and colleagues represents a critical step towards realizing the full potential of LLMs in automating and enhancing legal case retrieval, offering a scalable solution for generating annotated legal data and improving the efficacy of legal retrieval systems. Future research could explore the extension of this workflow to other complex domains, further unlocking the capabilities of LLMs in professional and academic fields.