COIG-CQIA: A High-Quality Chinese Instruction Tuning Dataset for Improved Human-Like Interaction

Introduction to COIG-CQIA

The evolution of LLMs has drastically enhanced machine understanding and response generation capabilities, especially in the context of instruction-following tasks. However, the existing resources for instruction tuning predominantly cater to English, leaving a significant void in high-quality datasets for Chinese instruction fine-tuning. This gap impairs the development of models capable of understanding and executing instructions in Chinese with high fidelity. To address this, the introduction of the COIG-CQIA dataset marks a significant step forward. It aims to offer a comprehensive corpus tailored for instruction tuning in Chinese, meticulously curated from diverse, authentic internet sources and processed to meet high-quality standards.

Dataset Curation

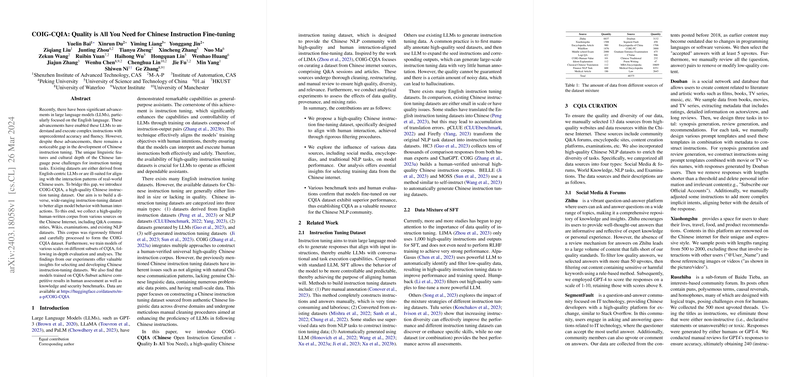

COIG-CQIA stands out due to its methodical curation process and the wealth of sources it taps into for data collection. The dataset is derived from a mixture of social media platforms, Q&A communities, encyclopedias, exams, and existing NLP datasets, ensuring a broad coverage that spans both formal and informal usage, as well as a variety of domains such as STEM, humanities, and general knowledge.

The compilation process involved rigorous steps to ensure the quality and relevance of the data:

- Filtering and Processing: Utilized both automated and manual review processes to filter out low-quality content, irrelevant information, and to ensure the cleanliness of the data.

- Diverse Sources: Collected data from over 22 unique sources, including prominent Chinese websites and forums, ensuring a rich diversity in the types of instruction-response pairs in the dataset.

Dataset Composition and Characteristics

- Task Variety: COIG-CQIA encompasses a wide array of task types, from question answering and knowledge extraction to generation tasks, facilitating comprehensive model training.

- Volume and Diversity: The dataset boasts of 48,375 instances, a testament to its volume and the diversity it encapsulates. This variety is crucial for training models to understand and generate a wide range of responses.

Data Analysis and Evaluation

The dataset was rigorously analyzed to ascertain its diversity, quality, and coverage. The potential influence of the data sourced from various platforms on model performance was also evaluated across different benchmarks, demonstrating the dataset's effectiveness in enhancing models' capacity for understanding and executing Chinese instructions accurately.

Experimental Findings and Implications

Models trained on the COIG-CQIA dataset showcased competitive results in both human assessment and benchmark evaluations, particularly highlighting its efficacy in tasks requiring deep understanding and complex response generation. This finding underscores COIG-CQIA's potential to significantly contribute to advancing the development of instruction-tuned LLMs capable of comprehensively understanding and interacting in Chinese.

Conclusion and Future Directions

The development of COIG-CQIA represents a formidable stride towards bridging the gap in resources for Chinese instruction tuning tasks. Its comprehensive curation from a wide range of sources, coupled with the meticulous cleaning and processing efforts, ensures high-quality and diversity, making it an invaluable asset for the Chinese NLP community.

The dataset’s release invites further research and exploration into instruction tuning for Chinese LLMs, with the potential to pave the way for models that demonstrate improved alignment with human interactions in Chinese. As the NLP field continues to evolve, datasets like COIG-CQIA will be instrumental in fostering advancements that bring us closer to achieving truly human-like interaction capabilities in AI systems.