This survey paper provides a detailed overview of fairness and bias in AI and Machine Learning (ML) models. It explores various definitions of fairness, categorizes different types of biases, investigates instances of biased AI across multiple application domains, and examines techniques to mitigate bias in AI models. The paper also discusses the impact of biased models on user experience and the ethical considerations involved in developing and deploying such models.

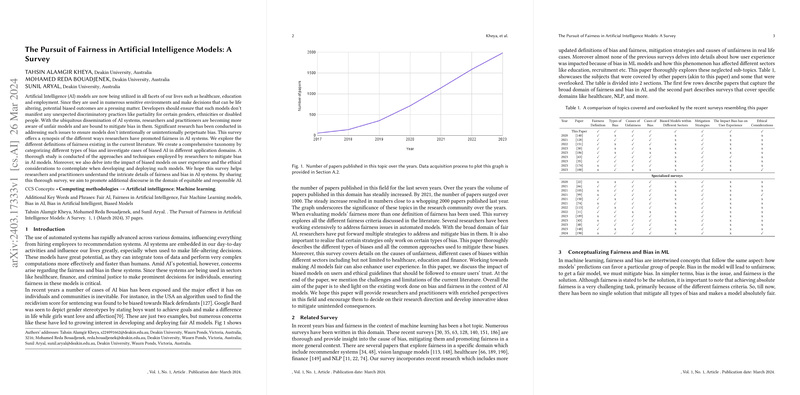

The authors begin by emphasizing the increasing use of AI systems in sensitive areas, making it crucial to ensure these models do not exhibit unexpected discriminatory behaviors. The paper highlights that while AI models can process vast amounts of data and perform complex computations, concerns about fairness and bias have grown. The number of research publications in this area has increased significantly, underscoring the importance of fairness in the AI research community. The paper distinguishes itself from other surveys by incorporating recent research, updated definitions of bias and fairness, mitigation strategies, causes of unfairness in real-world scenarios, and discussions on the impact of bias on user experience across various sectors.

The paper categorizes fairness definitions in machine learning into several groups, including group fairness, individual fairness, separation metrics, intersectional fairness, treatment equality, sufficiency metrics, and causal-based fairness.

- Group fairness is further divided into:

- Demographic Parity: This ensures that the model's predictions are independent of sensitive attributes such as gender or ethnicity. This can be formalized as , where is the predicted outcome, and is the sensitive attribute.

- Conditional Statistical Parity: This requires the outcomes for different sensitive groups to be the same even after adding additional features. This can be formalized as , where is an additional feature, and is the value of that feature.

- Individual fairness is divided into:

- Fairness through Unawareness: This ensures the model does not use any sensitive attributes when making decisions. The mapping is defined by , where the mapping excludes any sensitive attribute .

- Fairness through Awareness: This explicitly considers sensitive attributes during model training. The difference in the distribution assigned to two candidates should be less than or equal to their similarity: . Where is a metric to compute the similarity of candidates and is the model.

- Separation Metrics include:

- Predictive Equality: This requires the False Positive Rate (FPR) to be equal across all protected and unprotected groups, which can be formalized as , where is the actual outcome and is the sensitive attribute.

- Equal Opportunity: This ensures that individuals from different groups have an equal chance of receiving a positive outcome and can be formalized as .

- Balance for the Negative Class: This ensures the predicted scores assigned by the model to individuals belonging to the negative class are the same across groups and can be formalized as , where is the average predicted probability score.

- Balance for the Positive Class: This ensures the predicted scores assigned by the model to individuals belonging to the positive class are the same across groups, formalized as .

- Equalized Odds: This states that the predictor and sensitive attribute are independent given , which can be expressed as .

- Intersectional Fairness acknowledges discrimination arising from overlapping identities.

- Treatment Equality aims to achieve equal proportions of false negatives to false positives for both unprotected and protected groups, which can be formalized as , where the subscripts 1 and 2 represent protected and unprotected groups, respectively.

- Sufficiency Metrics ensure that a model is equally calibrated to make fair decisions for different sensitive groups.

- Equal Calibration: This ensures that for a given probability score , people in both protected and unprotected categories should possess an equal probability of being in the positive class, which can be formalized as .

- Predictive Parity: This ensures that the Positive Predictive Values (PPV) for protected and unprotected groups are equal, which can be formalized as .

- Conditional Use Accuracy Equality: This ensures that both PPVs and Negative Predictive Values (NPV) are the same regardless of the sensitive group, which is formalized as .

- Causal-based Fairness utilizes causal reasoning to identify and address unfairness:

- Counterfactual Fairness: This ensures that the model's predictions are the same for individuals with the same relevant features, even if the protected attributes are different and can be formalized as , where is the protected attribute, is the set of latent background variables, and is the remaining attributes.

- Unresolved Discrimination: This arises when a sensitive attribute unfairly impacts the predicted outcome.

The paper further describes different types of biases that can arise in the ML pipeline, categorized into data-driven, human, and model bias.

- Data-Driven Biases:

- Measurement Bias: Arises from subjective choices in model design, like selecting features and annotations.

- Representation Bias: Occurs when the probability distribution of training samples differs from the true underlying distribution.

- Label Bias: Occurs when the labels used to train the model are inaccurate, leading to a deviation from the true labels.

- Covariate Shift: Occurs when the distribution of features used to train the model is different during training and testing.

- Sampling Bias: Occurs when the sampling of subgroups is not random.

- Specification Bias: Arises when the design choices of the system are misaligned with the end goals.

- Aggregation Bias: Occurs when the way data is combined misrepresents true underlying patterns.

- Linking Bias: Occurs when true user behavior is misrepresented due to the attributes of networks derived from user interactions.

- Inherited Bias: Arises when the biased output from one tool is used as input for other machine-learning algorithms.

- Longitudinal Data Fallacy: Occurs when cross-sectional analysis is used to paper temporal data.

- Human Biases:

- Historical Bias: Occurs when models perpetuate bias present in historical records.

- Population Bias: Occurs when there are biased variations in user characteristics or demographics between the target population and the population represented in the data.

- Self-selection Bias: Arises when subjects can decide whether to participate in a paper.

- Behavioral Bias: Occurs when user behavior across platforms or contexts displays systematic distortions.

- Temporal Shift: Describes the systematic distortions that arise across behaviors over time or among different user populations.

- Content Production Bias: Occurs when user-generated content has behavioral bias.

- Deployment Bias: Emerges when a model is used in a way that is inappropriate for its real-world deployment.

- Feedback Bias: Arises when the output of a model influences features or inputs used for retraining the model.

- Popularity Bias: Arises when well-liked items get more exposure.

- Model Biases:

- Algorithmic Bias: Caused by the algorithm itself rather than the input data.

- Evaluation Bias: Arises when the data used to test the model is not representative of real-world scenarios.

The paper details numerous cases of unfairness in real-world settings, such as the criminal justice system, hiring processes, finance, healthcare, and education. It describes specific instances of biased predictions that discriminate against particular groups. In the criminal justice system, the COMPAS algorithm was found to discriminate against Black defendants by predicting a higher risk of re-offending, while the HART tool prioritized assigning high-risk individuals a low-risk score. In hiring, models were found to exhibit gender and racial biases in advertisement delivery systems. In finance, models have been found to exacerbate bias when lending loans, while also exhibiting historical bias against certain minority communities. In healthcare, models used for diagnosing conditions have been shown to exhibit both gender and ethnic biases, while in education, models used to grade and assess students have been shown to exhibit bias against some minority groups. The paper also includes examples of bias in other areas such as ride-hailing services and facial recognition.

The paper discusses several ways to mitigate bias and promote fairness, categorizing these strategies into pre-processing, in-processing, and post-processing.

- Pre-processing techniques focus on removing bias from the training data:

- Disparate Impact Remover: This technique transforms data to address fairness by modifying attributes to ensure distribution of protected and unprotected groups is closer.

- Sampling: Techniques like uniform and preferential sampling are used to address data misclassification and class imbalance.

- Re-weighting: This assigns different weights to data objects based on their relevance, up-weighting disadvantaged data and down-weighting data that cause discrimination.

- In-processing techniques focus on mitigating bias during model training:

- Adversarial Learning: This approach employs a predictor and an adversary and trains the predictor to not only fulfill its task but also actively counteract the adversary's ability to predict sensitive attributes.

- Causal Approaches: This aims to identify causal pathways through which sensitive attributes can influence model outcomes, and design interventions to reduce the bias from those pathways.

- Regularization: This involves adding constraints and penalties to the loss function to discourage unfair outcomes.

- Calibration: This ensures the proportions of positive predictions and positive examples are equal across different sensitive groups.

- Hyperparameter Optimization: This method considers fairness criteria during the hyperparameter tuning process.

- Reinforcement Learning: This uses reinforcement learning to improve the model's fairness by considering concept drifts and frequent data changes, with a focus on exploring and learning about underrepresented demographic groups.

- Post-processing techniques modify model predictions to mitigate unfairness:

- Causal Approach: Techniques are used to ensure the model does not make decisions based on factors outside of a person's control.

- Calibration: Techniques are used to better reflect the true outcomes.

- Regularization: Techniques are used to adjust the output of the model to ensure predictions are consistent for similar individuals.

The paper also covers how users are affected by unfair ML systems. Biased models can lead to negative user experiences, causing discrimination, limited opportunities, decline in reliance, and privacy concerns. The paper emphasizes the importance of good user experience (UX) in the design of AI systems. The paper also outlines various ethical considerations when developing AI models, including transparency, explainability, justice, fairness, equity, non-maleficence, responsibility, accountability, and privacy.

The authors also address challenges and limitations in mitigating bias. These include the trade-off between fairness and accuracy, the lack of a universally accepted definition of fairness, reliance on statistical methods that might not capture human behavior, and the difficulty in creating truly inclusive datasets. They point out that human intervention, while helpful, can be subjective and prone to bias.

In conclusion, the paper provides an overview of the research conducted to make AI models more fair and ethical, covering a range of topics from defining fairness and identifying bias, to real-world cases of biased models, mitigation strategies, and ethical considerations. The paper emphasizes the need for a multi-pronged approach when trying to mitigate bias in models, with adversarial learning and regularization as prominent strategies. The authors stress the importance of developing and deploying fair automated models, particularly in mitigating biases that can affect life-altering decisions. The survey highlights the critical need for ongoing research and development in the field of fair AI.