Enhancing LLMs in Low-Data Regimes through Iterative Data Augmentation

Introduction

LLMs have emerged as versatile tools for a wide array of NLP tasks. However, their application in specialized or data-scarce environments remains a challenge, mainly due to the inefficacy of conventional fine-tuning approaches in these contexts. In response to this issue, the paper introduces LLM2LLM, a novel method for targeted and iterative data augmentation. This approach significantly boosts LLM performance, especially in low-data regimes, by generating synthetic data that is focused on areas where the model demonstrates weaknesses.

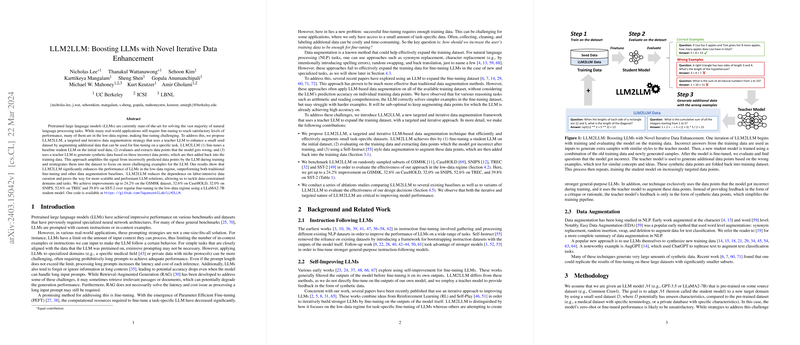

LLM2LLM Framework

LLM2LLM operates by employing a teacher-student model architecture. The process initiates with fine-tuning a baseline student LLM on available seed data, followed by assessment to identify incorrectly predicted data points. The innovative step involves employing a teacher LLM to generate synthetic data based on these identified weak points. This synthetic data, aimed at addressing specific areas of difficulty, is then incorporated into the training dataset for the student model. Through iterative application, this method ensures an increasingly refined focus on challenging examples, thereby enhancing the model's performance.

Empirical Evaluations

The effectiveness of LLM2LLM was rigorously tested across several datasets, including GSM8K, CaseHOLD, SNIPS, TREC, and SST-2. These datasets were chosen for their diversity in task types and complexity, ranging from mathematical problems to text classification and sentiment analysis. By employing LLM2LLM, the researchers observed significant improvements over baseline models, achieving up to a 24.2% increase in performance on GSM8K, 32.6% on CaseHOLD, 32.0% on SNIPS, 52.6% on TREC, and 39.8% on SST-2. Notably, these gains were most pronounced in scenarios where the amount of initial seed data was minimal, underlining LLM2LLM's potential in data-sparse situations.

Comparative Analysis

LLM2LLM was benchmarked against various data augmentation baselines, including traditional fine-tuning, Easy Data Augmentation (EDA), and AugGPT. Across all datasets, LLM2LLM outperformed these methods, demonstrating its superior capability in generating more effective and pertinent training data. Furthermore, an exploration into the influence of teacher model choices revealed that the quality of the generated data—and by extension, the achieved performance improvements—varied with the teacher model's capabilities.

Ablation Studies

Through a series of ablation studies, the paper delineates the impact of core components and design choices within the LLM2LLM framework. These studies confirmed the necessity of the iterative nature of data generation and the specific focus on augmenting based on incorrectly predicted examples. Moreover, the decision to periodically reset the student model before each fine-tuning phase was shown to prevent overfitting and facilitate more robust learning across iterations.

Implications and Future Directions

LLM2LLM presents a promising avenue for enhancing LLMs in specialized or data-sparse environments. By generating targeted synthetic data, it alleviates the need for extensive and potentially costly data collection efforts. Additionally, the iterative approach ensures that the model's evolving capabilities are continually matched with new and appropriately challenging data, fostering more effective learning. Looking ahead, further research could explore the integration of LLM2LLM with other model adaptation and data augmentation techniques, potentially opening up new realms of application for LLMs across diverse domains.