Enhancing LLMs with Episodic Memory for Dynamic Knowledge Updates

Introduction

In the rapidly evolving landscape of artificial intelligence research, the augmentation of LLMs with external memory models proposes a promising avenue for addressing the challenges of knowledge dynamism. The paper introduces Larimar, a novel architecture that embeds a distributed episodic memory within LLMs to facilitate on-the-fly knowledge updating. This breakthrough architecture promises significant improvements in speed, flexibility, and scalability over existing models.

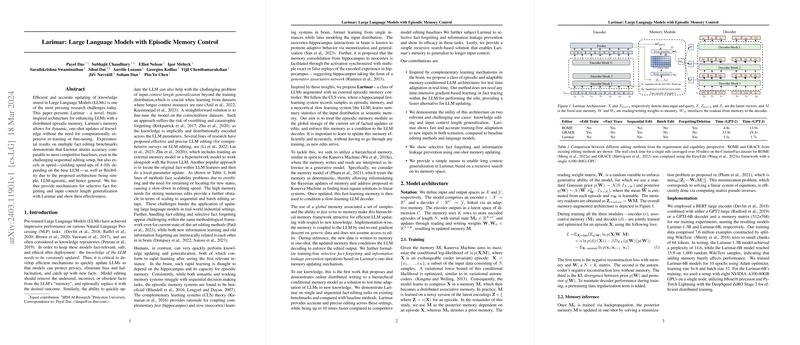

Model Architecture

Larimar is premised on the understanding that traditional LLMs, while powerful, are constrained by static knowledge bases that are not readily updatable without extensive re-training. The proposed architecture counters this limitation by integrating an episodic memory modeled on the human hippocampus, which is renowned for its rapid learning capabilities. Larimar's architecture features:

- An encoder for converting data inputs into latent vectors.

- A distributed associative memory for storing and dynamically updating these vectors.

- A decoder that leverages both the static knowledge embedded in the LLM parameters and the dynamic updates stored in the memory.

The architecture supports efficient one-shot learning, enabling immediate memory updates without gradient descent, thus accelerating the updating process significantly.

Memory Operations

The memory model supports basic operations akin to write, read, and generate, enabling dynamic updates, retrieval, and use of stored knowledge to influence model outputs. Furthermore, sequential writing and forgetting operations are detailed, showcasing Larimar's ability to modify its memory contents accurately in response to evolving information needs.

Experimental Results

Empirical evaluations demonstrate Larimar's capability to perform knowledge editing tasks with speed-ups ranging from 4-10x compared to leading baselines while maintaining competitive accuracy. The architecture's flexibility is further evidenced through applications in sequential fact editing and selective fact forgetting. Notably, Larimar exhibits resilience in retaining its performance even as memory demands scale, showcasing its potential for practical, real-world applications where knowledge bases are continually updated.

Speculations on Future Developments in AI

The introduction of episodic memory into LLMs as explored by Larimar opens up exciting prospects for the future of AI. It is conceivable that as techniques for dynamic memory management and integration with LLMs evolve, we could witness the emergence of models that not only adapt to new information more swiftly but do so with an enhanced understanding of context and temporality. This could pave the way for AI systems capable of more nuanced and human-like reasoning and interaction.

Conclusion

Larimar represents a significant step forward in the effort to create more dynamic and adaptable LLMs. By successfully integrating an episodic memory that enables real-time knowledge updates, Larimar addresses a critical pain point in the use of LLMs, particularly in applications requiring up-to-date information. As future work builds on and refines this approach, the goal of developing AI systems with the ability to learn and forget as efficiently as humans do appears increasingly attainable.