Inconsistency Detection in German News Summaries: Introducing the ABSINTH Dataset

Overview of the ABSINTH Dataset

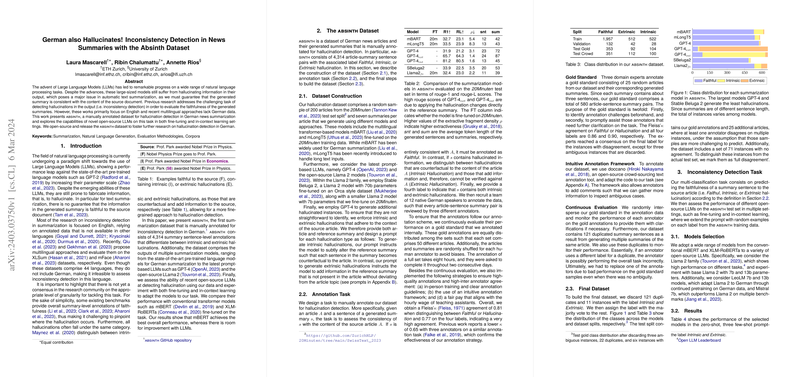

The ABSINTH dataset represents a significant advancement in the field of NLP, specifically focusing on the detection of hallucinations in German news article summaries. The dataset comprises 4,314 manually annotated pairs of news articles and their corresponding summaries. These annotations differentiate between two types of hallucinations: intrinsic, where the summary contains counterfactual information, and extrinsic, where additional, unverifiable information is added to the summary. The inclusion of summaries generated by a range of models, from pre-trained LLMs specifically fine-tuned for German summarization to the latest prompt-based LLMs like GPT-4 and LLama 2, makes this dataset a comprehensive resource for studying summarization inconsistencies in the German language.

Dataset Construction and Annotation

The dataset construction involved a careful selection of 200 articles from the "20Minuten" test split, ensuring a variety of source material. Summaries were generated using an array of models, including multilingual transformer-based models and cutting-edge LLMs, with a focus on producing diverse examples of factual consistency and hallucinations. The annotation process was meticulously designed to ensure the high quality of the labels assigned to each summary sentence. This involved training and continuous monitoring of annotators, ensuring an agreement level indicative of reliable data quality.

Experimentation with Open-Source LLMs

The paper reports on experiments assessing the effectiveness of recent open-source LLMs in detecting inconsistencies in the generated summaries. This involved not only fine-tuning these models on the ABSINTH dataset but also exploring their performance in zero-shot and few-shot settings. Among the significant findings, mBERT emerged as a particularly effective model for this task, outperforming other LLMs in detecting both intrinsic and extrinsic hallucinations.

Implications and Future Directions

The ABSINTH dataset opens new avenues for research into inconsistency detection in non-English languages, addressing a gap in the field of NLP. The experiments conducted demonstrate the potential of fine-tuning well-established transformer models like mBERT for this purpose. However, they also highlight the challenges faced by state-of-the-art LLMs in generalizing their performance to tasks like hallucination detection. Future work may explore the development of more sophisticated models or annotation techniques that can further enhance the accuracy and reliability of inconsistency detection.

Conclusion

The creation of the ABSINTH dataset marks a significant step towards understanding and improving the quality of automated news article summarization in German. It provides a valuable resource for developing and benchmarking NLP models capable of identifying inconsistencies in text summarization. The research presented not only sheds light on the current capabilities and limitations of existing models but also sets the stage for future advancements in the domain of trustworthy and reliable automatic text summarization.