Learning to Use Tools via Cooperative and Interactive Agents

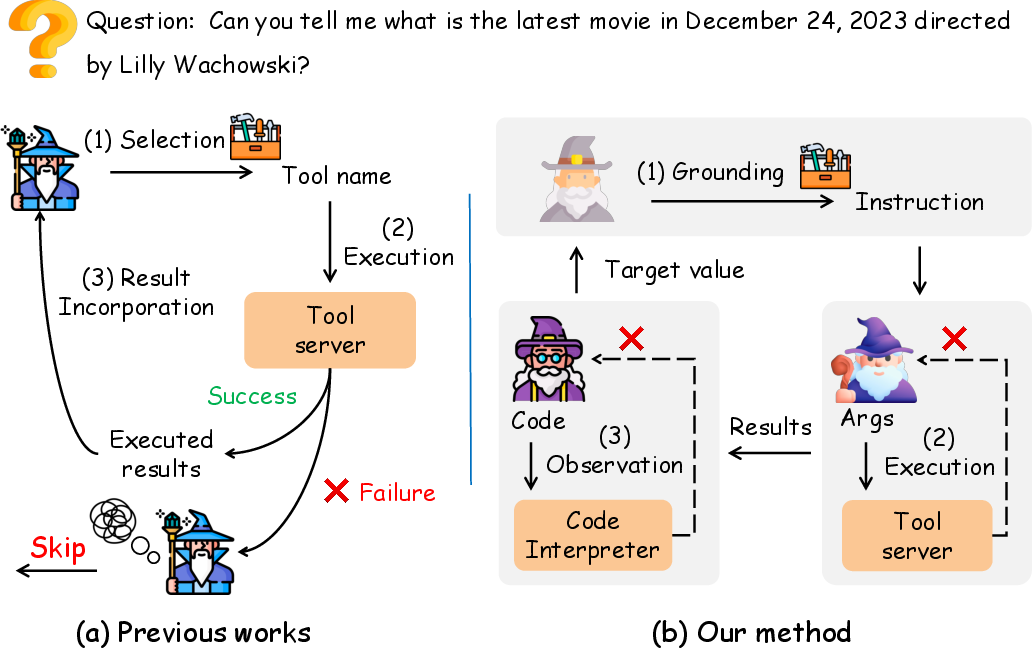

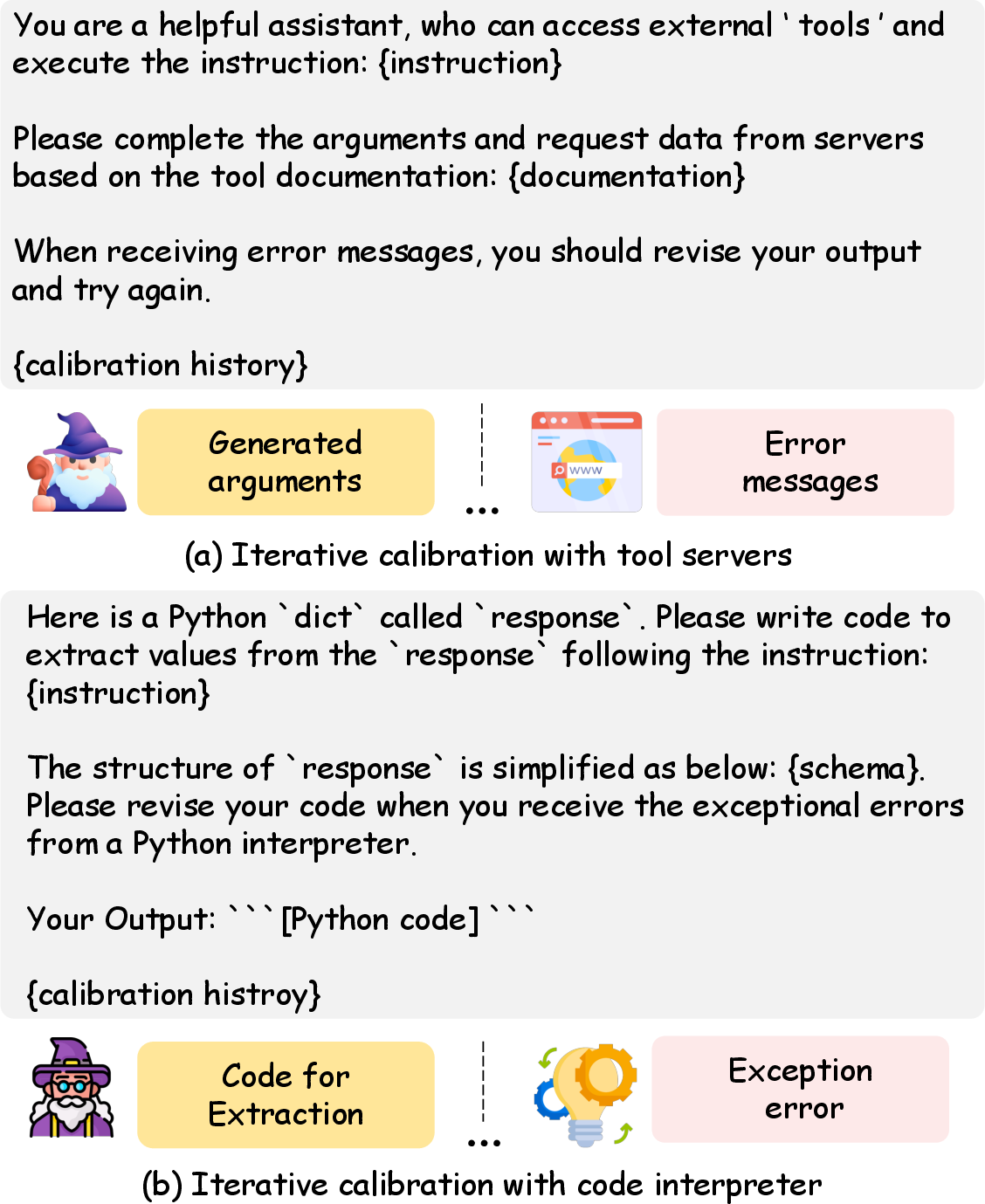

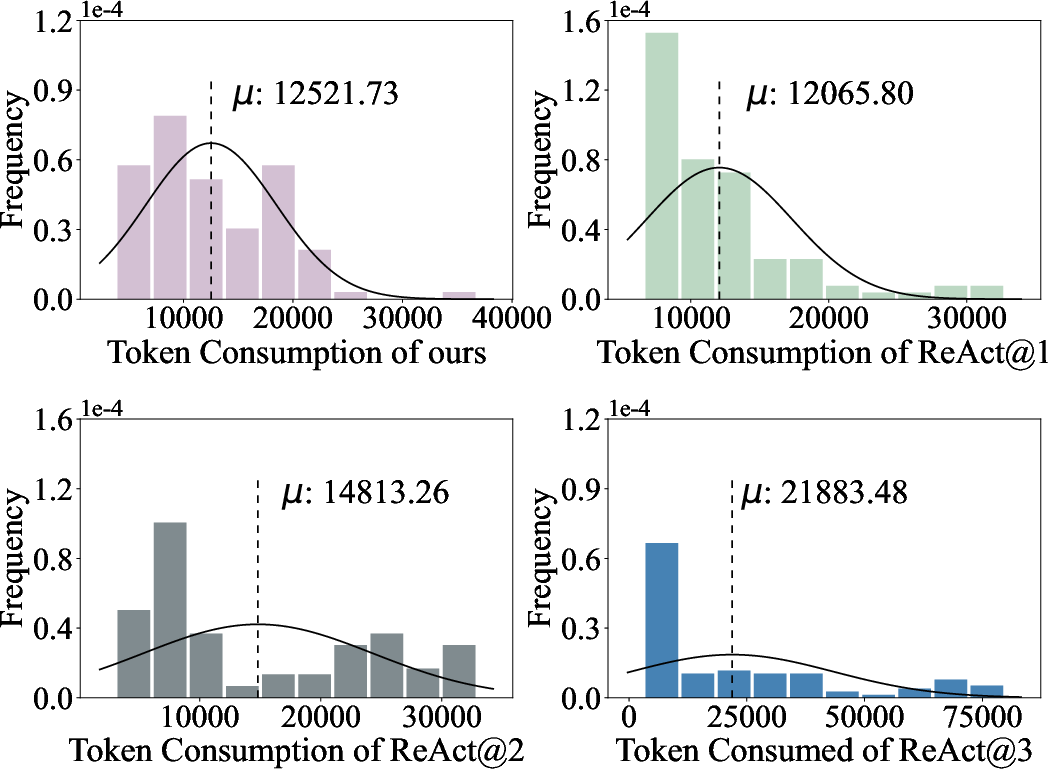

Abstract: Tool learning empowers LLMs as agents to use external tools and extend their utility. Existing methods employ one single LLM-based agent to iteratively select and execute tools, thereafter incorporating execution results into the next action prediction. Despite their progress, these methods suffer from performance degradation when addressing practical tasks due to: (1) the pre-defined pipeline with restricted flexibility to calibrate incorrect actions, and (2) the struggle to adapt a general LLM-based agent to perform a variety of specialized actions. To mitigate these problems, we propose ConAgents, a Cooperative and interactive Agents framework, which coordinates three specialized agents for tool selection, tool execution, and action calibration separately. ConAgents introduces two communication protocols to enable the flexible cooperation of agents. To effectively generalize the ConAgents into open-source models, we also propose specialized action distillation, enhancing their ability to perform specialized actions in our framework. Our extensive experiments on three datasets show that the LLMs, when equipped with the ConAgents, outperform baselines with substantial improvement (i.e., up to 14% higher success rate).

- Large language models as tool makers. arxiv.

- A survey on evaluation of large language models. ACM T Intel Syst Tec.

- Agentverse: Facilitating multi-agent collaboration and exploring emergent behaviors in agents. arxiv.

- Lm vs lm: Detecting factual errors via cross examination. arxiv.

- Improving factuality and reasoning in language models through multiagent debate. arxiv.

- Faith and fate: Limits of transformers on compositionality. arxiv.

- Improving language model negotiation with self-play and in-context learning from ai feedback. arxiv.

- PAL: Program-aided language models. In PMLR.

- Confucius: Iterative tool learning from introspection feedback by easy-to-difficult curriculum. arxiv.

- Toolkengpt: Augmenting frozen language models with massive tools via tool embeddings. arxiv.

- Metatool benchmark for large language models: Deciding whether to use tools and which to use. arxiv.

- Genegpt: Augmenting large language models with domain tools for improved access to biomedical information. arxiv.

- Language models can solve computer tasks. arxiv.

- Api-bank: A comprehensive benchmark for tool-augmented llms. In EMNLP.

- Encouraging divergent thinking in large language models through multi-agent debate. arxiv.

- Dynamic llm-agent network: An llm-agent collaboration framework with agent team optimization. arxiv.

- Chameleon: Plug-and-play compositional reasoning with large language models. arxiv.

- When not to trust language models: Investigating effectiveness of parametric and non-parametric memories. In ACL.

- Social learning: Towards collaborative learning with large language models. arxiv.

- Webgpt: Browser-assisted question-answering with human feedback. arxiv.

- Art: Automatic multi-step reasoning and tool-use for large language models. arxiv.

- Gorilla: Large language model connected with massive apis. arxiv.

- Adapt: As-needed decomposition and planning with language models. arxiv.

- Communicative agents for software development. arxiv.

- Experiential co-learning of software-developing agents. arxiv.

- Autoact: Automatic agent learning from scratch via self-planning. arxiv.

- WebCPM: Interactive web search for Chinese long-form question answering. In ACL.

- Tool learning with foundation models. arxiv.

- Toolllm: Facilitating large language models to master 16000+ real-world apis. arxiv.

- Toolformer: Language models can teach themselves to use tools. arxiv.

- Hugginggpt: Solving ai tasks with chatgpt and its friends in huggingface. arxiv.

- Large language models can be easily distracted by irrelevant context. In PMLR.

- Restgpt: Connecting large language models with real-world applications via restful apis. arxiv.

- Corex: Pushing the boundaries of complex reasoning through multi-model collaboration. arxiv.

- Yashar Talebirad and Amirhossein Nadiri. 2023. Multi-agent collaboration: Harnessing the power of intelligent llm agents. arxiv.

- Toolalpaca: Generalized tool learning for language models with 3000 simulated cases. arxiv.

- Mac-sql: Multi-agent collaboration for text-to-sql. arxiv.

- Mint: Evaluating llms in multi-turn interaction with tools and language feedback. arxiv.

- Self-instruct: Aligning language models with self-generated instructions. In ACL.

- Describe, explain, plan and select: Interactive planning with large language models enables open-world multi-task agents. arxiv.

- Visual chatgpt: Talking, drawing and editing with visual foundation models. arxiv.

- A survey on knowledge distillation of large language models. arXiv.

- Gpt4tools: Teaching large language model to use tools via self-instruction. arxiv.

- Mm-react: Prompting chatgpt for multimodal reasoning and action. arxiv.

- React: Synergizing reasoning and acting in language models. In ICLR.

- Tooleyes: Fine-grained evaluation for tool learning capabilities of large language models in real-world scenarios. arxiv.

- Toolsword: Unveiling safety issues of large language models in tool learning across three stages. arxiv.

- Lumos: Learning agents with unified data, modular design, and open-source llms. arxiv.

- Agentcf: Collaborative learning with autonomous language agents for recommender systems. arxiv.

- Toolqa: A dataset for llm question answering with external tools. arxiv.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.