Introducing SoftTiger: Elevating Healthcare Workflows through Clinical LLM

Overview

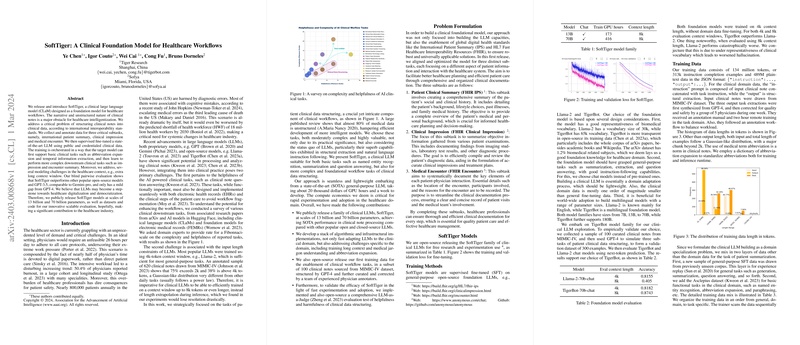

The healthcare industry confronts a myriad of challenges exacerbated by overburdened clinicians facing an unsustainable demand for their time and expertise. The burgeoning field of LLMs offers a beacon of hope, promising to streamline healthcare workflows through intelligized clinical notes processing. In this context, the paper presents SoftTiger, a clinical LLM specifically engineered to restructure clinical notes into structured data, adhering to international interoperability standards. Beyond presenting the model, the authors elaborate on their methodologies for tackling unique domain-specific challenges, such as handling extended context windows and medical jargons. This summary explores the foundational aspects of SoftTiger, unpacking its contributions, methodologies, and implications for the future of healthcare digitalization.

The Genesis of SoftTiger

At the heart of healthcare digitalization is the challenge of distilling actionable insights from the extensive narrative found in clinical notes. Traditional LLMs stumble upon two significant hurdles when applied to clinical practices: aligning with healthcare workflows and managing the extensive length of clinical notes. Through a meticulous analysis of existing clinical tasks, the authors identify the critical gap SoftTiger aims to bridge - transforming unstructured clinical notes into structured clinical data. The model hence focuses on essential clinical subtasks such as Patient Clinical Summary, Clinical Impression, and Medical Encounter, aiming to provide a robust foundation for various healthcare applications.

Model Architecture and Training

SoftTiger represents a significant leap forward, operating on the cutting edge of LLM technology by leveraging a state-of-the-art foundational model, fine-tuned specifically for the healthcare domain. Here are key takeaways from the training phase:

- Foundation Model Selection: Building on TigerBot, a foundation model known for its rich biomedical vocabulary and general-purpose task handling, SoftTiger enriches its capabilities through supervised fine-tuning (SFT), optimizing it for clinical applications.

- Training Data: The core of SoftTiger’s training regime comprised 134 million tokens from a mix of domain-specific and general-purpose datasets, with a significant emphasis on clinical structuring tasks.

- Challenges in Context: Addressing the challenge of lengthy clinical notes, SoftTiger efficiently handles a context window up to 8k tokens, a feature critical for the domain.

Evaluation and Contributions

The evaluation of SoftTiger, performed through next-token prediction and blind pairwise comparisons (LLM-as-a-Judge), showcases its superiority against widely used models like Llama-2 and even GPT-3.5, while closely rivaling GPT-4. These evaluations emphasize SoftTiger's capability in accurately processing and structuring clinical notes. The contributions of this paper are manifold:

- Release of SoftTiger Models: The authors have made two configurations of SoftTiger (13 billion and 70 billion parameters) publicly available, catering to a broad spectrum of clinical data processing needs.

- Bespoke Training and Evaluation Frameworks: By innovating in training methodologies and employing a cost-effective evaluation mechanism, the paper lays a foundation for future refinement and adaptation in the healthcare sector.

- Open-Source Contributions: In addition to the models, the authors release their training datasets, codes, and evaluation protocols, ensuring transparency and fostering community engagement in further development.

Future Directions and Implications

The unveiling of SoftTiger ignites a plethora of opportunities for advancing healthcare digitalization. By effectively structuring clinical data, SoftTiger not only streamlines clinical workflows but also paves the way for deploying more sophisticated AI-driven diagnostic and treatment strategies. As future directions, the authors ambitiously aim to tackle hallucination issues and explore retrieval-augmented generation methods, promising avenues that could further enhance the reliability and utility of clinical LLMs.

SoftTiger represents a pivotal step towards the digitalization and democratization of healthcare, encapsulating the tremendous potential of LLM technology to revolutionize an industry at the brink of a transformative shift.