Hierarchical Reinforcement Learning for LLMs Achieves Improved Sample Efficiency

Introduction to ArCHer Framework

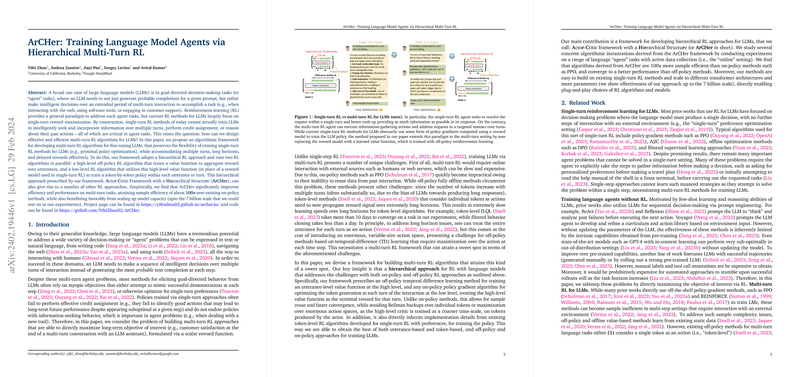

Agent tasks involving decision-making over multiple turns, where actions in one turn can affect outcomes in subsequent interactions, pose unique challenges in the field of reinforcement learning (RL), particularly when employing LLMs. Traditional RL methods often fall short in this context due to their focus on single-turn reward maximization, which fails to address the complexities inherent in multi-turn interactions. To bridge this gap, we introduce the Actor-Critic Framework with a Hierarchical Structure (ArCHer), a novel algorithmic approach designed to fine-tune LLMs for complex, multi-turn agent tasks by employing hierarchical reinforcement learning.

Key Contributions and Framework Overview

ArCHer distinguishes itself by operating on two levels: a high-level (utterance-level) and a low-level (token-level), running parallel RL algorithms at each tier. This dual structure offers several advantages, including improved sample efficiency, the ability to handle long horizons and delayed rewards more effectively, and scalability to larger model capacities. Specifically, the hierarchical approach allows for the segmentation of decision-making processes into manageable parts, thereby reducing the complexity of credit assignment and enhancing the capacity for long-term planning. Empirically, ArCHer has demonstrated significantly better efficiency and performance on multi-turn tasks, achieving about 100x improvement in sample efficiency over existing on-policy methods, while also benefiting from an increase in model capacity—up to a 7 billion parameter scale in tested experiments.

Theoretical Implications and Practical Benefits

ArCHer's design addresses several key theoretical challenges in training LLMs for agent tasks, including overcoming the difficulties associated with long training horizons and ensuring meaningful policy improvement beyond narrow constraints. The framework's flexibility in integrating various components for both high- and low-level methods opens new avenues for further research and development in hierarchical RL algorithms. Moreover, our findings suggest that ArCHer not only facilitates a more natural and effective way to leverage off-policy data but also underscores the importance of hierarchical structures in overcoming the limitations of existing RL approaches for LLMs.

Future Directions

While our paper has validated the efficacy of ArCHer with a focus on computational environments, extending the framework to support learning from live interactions with humans presents an exciting challenge for future work. This includes adapting the methodology to conditions where only a limited number of interactions are practical or feasible. Furthermore, exploring the potential of model-based RL techniques within the ArCHer framework could yield additional gains in both performance and efficiency. Continual research into novel instantiations of the ArCHer framework and its application across a broader range of tasks and models will undoubtedly enrich our understanding and capabilities in harnessing LLMs for sophisticated multi-turn decision-making tasks.

Closing Remarks

The development of the Actor-Critic Framework with a Hierarchical Structure marks a significant step forward in the application of reinforcement learning to LLMs, particularly in complex, multi-turn environments. By addressing the inherent challenges of sample efficiency, credit assignment, and scalability, ArCHer paves the way for more advanced, efficient, and capable LLM-based agents capable of tackling a wide array of decision-making problems with notable proficiency.