Optimizing Sub-billion Parameter LLMs for On-Device Applications

Introduction to MobileLLM

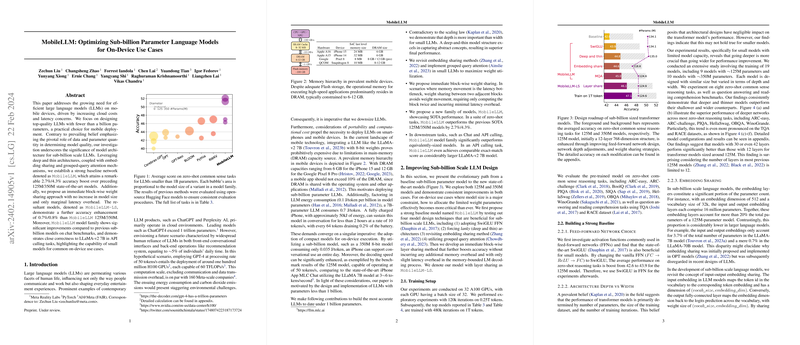

The advent of LLMs has significantly accelerated the pace of innovation in NLP. However, the deployment of these models, especially in on-device applications, has been challenging due to their colossal size. The paper entitled "MobileLLM: Optimizing Sub-billion Parameter LLMs for On-Device Use Cases" introduces an insightful approach towards designing efficient LLMs tailored for mobile environments. The authors challenge the conventional emphasis on data and parameter quantity as primary factors in model quality, spotlighting instead the crucial role of architectural considerations in sub-billion parameter models.

Key Findings

The research unveils several pivotal findings that contribute to the field of compact LLM design:

- Depth over Width: Contrary to prevalent scaling laws, the paper finds that for compact models, adopting a deep-and-thin architecture significantly enhances model performance.

- Efficient Weight Utilization Techniques: Techniques such as embedding sharing, grouped query attention, and an innovative immediate block-wise weight sharing method augment model efficiency without expanding its size.

- MobileLLM and MobileLLM-LS: The paper introduces two novel model families. MobileLLM sets a new state-of-the-art (SOTA) performance for sub-billion parameter models. The MobileLLM-LS models, employing layer-sharing, further enhance accuracy while ensuring compatibility with on-device constraints.

Practical Implications

The experimental results demonstrate the MobileLLM models' superiority over previous sub-billion parameter models across multiple benchmarks, including zero-shot common sense tasks and reading comprehension. Notably, MobileLLM models exhibit close correctness to much larger models like LLaMA-v2 7B in API calling tasks, highlighting their potential in common on-device use cases. Such advancements suggest a promising future where compact, efficient LLMs can be ubiquitously deployed in mobile devices, significantly reducing cloud dependency and operational costs.

Theoretical Contributions

This paper revisits and redefines the fundamental principles guiding the scaling and architecture of LLMs for compact, efficient models. By empirically disproving the universality of the scaling laws for sub-billion parameter models and demonstrating the efficacy of depth over width, the research paves the way for a new direction in LLM architecture design. Furthermore, the introduction of immediate block-wise weight sharing as an effective method to enhance model depth without incurring significant computational overhead contributes novel insights into the efficient design of neural networks.

Future Directions

The findings from this paper open numerous avenues for future research, particularly in exploring the bounds of model compactness while maintaining, or even enhancing, performance. Further exploration into neural architecture search (NAS) tailored for LLMs could automate the discovery of optimal architectures for specific on-device applications. Additionally, assessing the compatibility of MobileLLM with emerging model compression techniques could lead to even more significant efficiency gains. Finally, the practical execution strategies outlined in the paper, including compatibility with post-training quantization, suggest ample scope for integration into real-world applications, promising widespread accessibility to advanced AI capabilities on mobile devices.

Conclusion

"MobileLLM: Optimizing Sub-billion Parameter LLMs for On-Device Use Cases" substantially advances our understanding of designing efficient, compact LLMs suited for practical deployment in on-device scenarios. By challenging existing paradigms and introducing novel architectural optimizations, this work opens the door to a future where powerful AI applications can run locally on user devices, heralding a new era of accessibility, efficiency, and privacy in AI usage.