Middleware for LLMs: Enhancing Language Agent Performance in Complex Environments through Customized Tools

Introduction

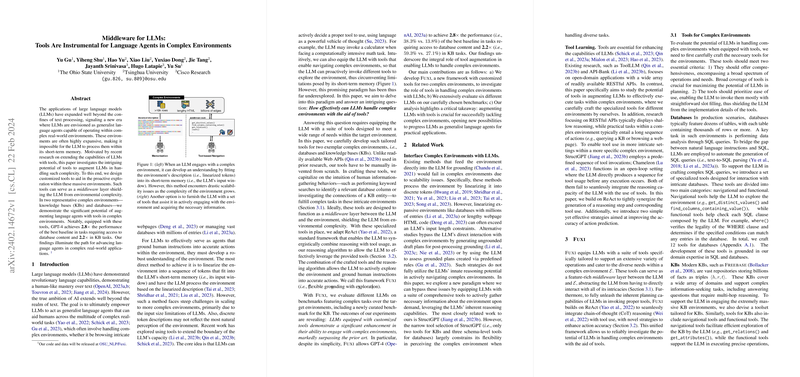

The expanding applications of LLMs have extended far beyond mere text processing, indicating an era where these models are envisioned as versatile language agents that can support a broad spectrum of complex real-world tasks. This paper explores the application of customized tools as a middleware layer that enables LLMs, specifically GPT-4, to significantly surpass performance baselines in navigating and executing tasks within complex databases and knowledge bases (KBs). Notably, we demonstrate a 2.8× improvement over the best baseline for database-related tasks and a 2.2× improvement for KB tasks.

Custom Tools: Bridging LLMs and Complex Environments

The core of our framework, named Fuxi, resides in the development of a comprehensive suite of tools designed for GPT-4 to interact with databases and knowledge bases proficiently. These tools are grounded in replicating human-like information-seeking behaviors for complex task execution within these environments. The tools developed span navigational aids for environment exploration and functional aids for specific operations, such as SQL query composition for databases and multi-hop reasoning in KBs. This approach essentially equips LLMs to bypass the inherent limitations of their short-term memory when dealing with expansive or intricate environments by proactively fetching and processing relevant information as required.

Methodology and Evaluation

Our methodology emphasizes the synergy between crafted tools and a reasoning algorithm, ReAct, facilitating an effective use of tools by the LLMs. Through extensive evaluations across six different LLMs on curated benchmarks featuring demanding tasks, Fuxi consistently outperformed existing baselines, showcasing substantial enhancements in LLM’s capability to interact with and execute complex tasks in both databases and KBs. Particularly, our evaluation in database environments leveraged the Bird dataset, notable for its complexity, while for KBs, a newly compiled benchmark, KBQA-Agent, was introduced to assess performance on intricate questions requiring profound engagement with the KB.

Insights and Implications

The substantial improvements observed with the introduction of Fuxi underscore the potential and necessity of tool augmentation for LLMs in handling complex real-world applications more effectively. The paper not only sets a new benchmark in the performance of LLMs in environments marked by their intricate nature but also opens up pathways for further research into the integration of LLMs in a wider variety of complex applications.

Our analysis also provides evidence that, while significant advancements have been achieved, there's a considerable margin for improvement, especially in environments without straightforward query interfaces. Furthermore, the design process of the tools, primarily based on our intuition and experience, pinpoints toward the necessity for a more structured approach in tool development to harness even greater performance gains.

Future Prospects

Moving forward, the exploration into embedding LLMs within an even broader range of complex environments stands as a promising avenue. Additionally, refining the tool development process through a more principled strategy could further enhance the efficacy of LLMs as generalist language agents. As we continue to push the boundaries of what LLMs can achieve, the integration of customized tools will undoubtedly play a pivotal role in transforming these models into more potent and versatile agents for real-world problem-solving.

Acknowledgements and Support

The efforts leading to these advancements were supported by collaborative insights from the THU KEG and OSU NLP groups, alongside practical aid from external partners including Cisco Research. This collective endeavor underlines the importance of communal effort in driving forward the boundaries of AI research and its applications.