OlympiadBench: Elevating Benchmark Challenges in AI with Olympiad-Level Bilingual Multimodal Scientific Problems

Introduction of OlympiadBench

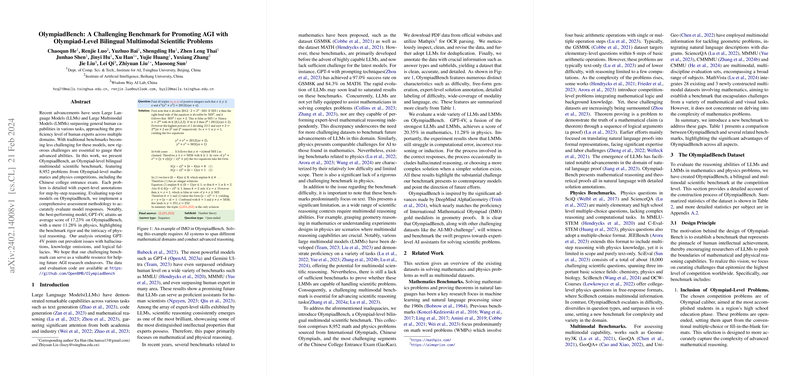

The rapid advancements in LLMs and Large Multimodal Models (LMMs) have necessitated the development of more rigorous assessment tools. OlympiadBench addresses this need by introducing a benchmark featuring 8,952 problems from high-level mathematics and physics competitions, with a focus on Olympiad-level challenges. This benchmark is distinct in its bilingual (English and Chinese) and multimodal attributes, each problem accompanied by expert annotations for step-by-step reasoning. Notably, the best-performing model, GPT-4V, achieves an average score of 17.23% on the benchmark, underscoring the challenges posed by OlympiadBench in modeling physical reasoning and problem-solving.

Key Features of OlympiadBench

- Comprehensive Problem Set: Comprising a vast collection of problems sourced from prestigious Olympiads and Chinese college entrance exams, OlympiadBench presents a diverse range of challenges designed to test the limits of current AI capabilities.

- Expert Annotations: Every problem includes detailed annotations from experts, providing valuable insights into the reasoning processes required to solve complex scientific issues.

- Bilingual and Multimodal Approach: By offering problems in both English and Chinese and incorporating multimodal data, OlympiadBench emphasizes the importance of versatility in language and medium for AI research.

- Robust Evaluation Methodology: Utilizing a thorough assessment methodology, this benchmark accurately evaluates AI responses, highlighting prevalent issues such as hallucinations, knowledge omissions, and logical fallacies in AI-generated solutions.

Challenges Highlighted by OlympiadBench

The findings from OlympiadBench highlight several pivotal challenges for AI models, particularly in solving physics problems and generating error-free reasoning. The benchmark's complexity is illustrated by the relatively low problem-solving success rates, which reveal significant gaps in AI capabilities compared to human experts. These challenges serve as a critical reminder of the considerable room for improvement and growth in the field of AI and AGI research.

Implications for Future Research

OlympiadBench sets a new precedent for the complexity and rigorousness of benchmarks in AI research. The benchmark not only challenges the AI research community to develop models that can tackle higher levels of scientific reasoning but also provides a novel dataset for training and testing next-generation AI systems. The bilingual and multimodal nature of the problems in OlympiadBench opens the door for exploration into new realms of AI capabilities, encouraging advancements in understanding and processing complex scientific texts and visuals in multiple languages.

Conclusion

OlympiadBench stands as a significant contribution to the field of AI, pushing the boundaries of what is considered a challenging benchmark. By placing a strong emphasis on Olympiad-level problems, this benchmark underscores the necessity for continuous innovation and development within AI research to reach and surpass human expert levels of problem-solving and reasoning. As the AI community strives towards the goal of achieving AGI, resources like OlympiadBench will be instrumental in benchmarking progress and guiding research efforts towards addressing the most daunting challenges in AI.