Recursive Video Captioning for Extended Videos: An Insight into Video ReCap

Introduction to Video ReCap

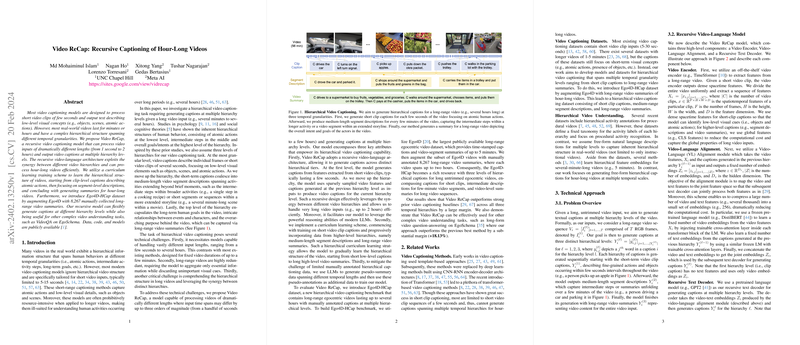

The burgeoning field of video understanding witnesses a notable advancement with the advent of Video ReCap, a model designed for hierarchical video captioning. Unlike conventional methods that primarily cater to short video clips, Video ReCap extends its horizon to video inputs ranging from a mere second to a staggering two hours. This model stands out for its recursive video-language architecture, enabling the processing of video contents at varying temporal granularities. The essence of Video ReCap lies in its ability to generate descriptions at multiple hierarchy levels, providing a granular understanding of video content over time.

The Challenge of Long-Form Video Captioning

Addressing long-form videos demands a model with the versatility to handle diverse input lengths and the redundancy typically found in extended footage. Furthermore, comprehending the hierarchical structure embedded in long videos presents a technical challenge, necessitating a sophisticated understanding of actions, activities, and overarching themes or goals. Video ReCap proposes a solution by employing a recursive architecture that intelligently leverages different levels of video detail, facilitated by a curriculum learning training scheme.

Recursive Architecture and Hierarchical Curriculum Learning

The operation of Video ReCap is delineated into three primary components: a video encoder for feature extraction, a video-language (VL) alignment module for mapping video and text features, and a recursive text decoder for generating captions across various hierarchy levels. This recursive model structure allows for efficient handling of very long video inputs while preserving the quality of generated captions. The curriculum learning approach mirrors human capability to perceive actions, starting from understanding atomic actions, moving to intermediate steps, and finally inferring overarching goals or intents, thereby effectively learning the hierarchical structure of videos.

Ego4D-HCap Dataset Contribution

To evaluate its performance, Video ReCap is put to the test on the Ego4D-HCap dataset— a novel benchmark introduced by the authors. This dataset embodies a commendable resource for hierarchical video captioning with its long-range egocentric videos and annotated captions at multiple levels, providing a rich ground for validating the effectiveness of Video ReCap and advancing research in complex video understanding tasks.

Empirical Evidence and Future Prospects

Significant numerical results underline the effectiveness of Video ReCap, showcasing superior performance over existing video captioning baselines across all temporal hierarchies. In particular, it achieves notable success in long-form video question answering on the EgoSchema dataset, illustrating the utility of hierarchical video captions in complex video understanding tasks. As the research horizon expands, potential future directions include exploring real-time caption generation, interactive video understanding, and video-based dialoging, promising further exploration into making video understanding models more robust and versatile.

Concluding Remarks

Video ReCap introduces a significant advancement in the domain of video understanding, especially in handling long-form videos with a nuanced appreciation of their hierarchical structure. Its recursive architecture coupled with a curriculum learning approach not only sets a new benchmark in the field but also opens new avenues for research and application in AI. As we look forward to the future development and application of Video ReCap and similar models, the potential for enhancing our interaction with and understanding of video content remains vast and largely untapped.