Enhancing Visual Question Generation with Contrastive Multimodal Learning

Introduction to Contrastive Visual Question Generation (ConVQG)

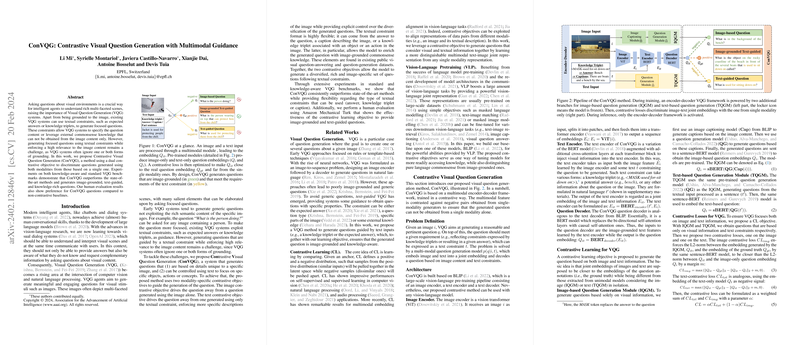

Visual Question Generation (VQG) is an essential task for developing advanced AI systems capable of engaging in meaningful interactions within visual environments. The proposed Contrastive Visual Question Generation (ConVQG) system introduces a significant advancement in the field by effectively enhancing the integration of multimodal information—image content and textual constraints—into the question-generation process. By employing a dual contrastive learning objective, ConVQG differentiates between questions generated purely from image details and those influenced by additional text-based guidance, such as knowledge triplets or expected answers. This approach ensures the production of questions that are not only relevant to the visual context but also aligned with specified textual parameters, fostering a deeper understanding and interaction with the visual data.

The Need for Advanced VQG Mechanisms

The ability to generate pertinent and contextually grounded questions about images is paramount for the development of conversational agents and visual dialog systems that can interact with users more naturally and effectively. Early VQG models often struggled to produce questions that fully leverage the rich semantic content available in images, resulting in generic or irrelevant queries. Furthermore, incorporating textual constraints to guide question specificity and relevance poses additional challenges—chief among them is maintaining fidelity to both the visual scene and the imposed textual guide. ConVQG addresses these issues head-on by introducing a method that guarantees high relevance questions through multimodal guidance and contrastive learning techniques.

Key Innovations and Methodology

The ConVQG framework operates on the premise of generating questions that significantly reflect specific image details and adhere to textual constraints. Its architecture employs contrastive learning objectives that optimize the generation process to favor questions that accurately represent a fusion of image content and textual insight. Crucially, this process enables the model to exploit external commonsense knowledge, represented through knowledge triplets, enriching the questions with context that might not be immediately discernible from the image alone. This approach results in a generation system capable of producing diverse, rich, and highly relevant sets of questions, a noted improvement over previous systems.

Evaluation and Human Assessment

Evaluation across standard and knowledge-aware VQG benchmarks demonstrates ConVQG's superior performance compared to state-of-the-art methods. These results are corroborated by human evaluation studies using Amazon Mechanical Turk, where ConVQG-generated questions were preferred for their relevance to both image content and textual guidance. These findings underscore the effectiveness of the contrastive learning objectives in achieving a high degree of multimodal alignment in question generation.

Implications and Future Horizons

ConVQG represents a significant step forward in the VQG domain, offering a robust framework for generating contextually rich and textually guided questions. Its success opens new avenues for research in multimodal learning and visual dialog systems, particularly in how textual constraints can be effectively integrated for more meaningful interactions. Looking forward, further exploration into scalable contrastive learning methods and their applications in multimodal contexts holds great promise for advancing AI's capability to understand and interact within complex visual environments.

In conclusion, by precisely aligning textual guidance with image content through a dual contrastive learning approach, ConVQG sets a new benchmark for the Visual Question Generation task, paving the way for more intuitive and context-aware AI systems.