Advances in Any-to-Any Multimodal Conversations with AnyGPT

Introduction

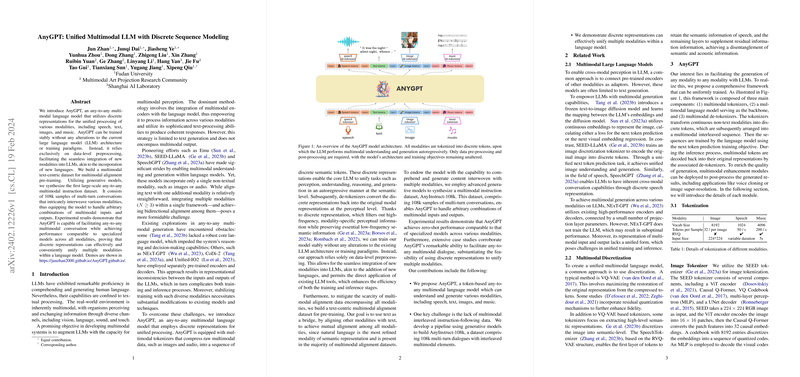

In the evolving landscape of machine learning, the integration of multimodal inputs and outputs into LLMs stands as a significant challenge. Traditionally, LLMs have excelled in handling text-based data but faltered when engaging with more varied modalities such as images, speech, and music. The paper introduces AnyGPT, a novel approach to any-to-any multimodal LLM. Utilizing discrete sequence modeling, it manages to seamlessly integrate a diverse set of modalities, retaining the architecture and training paradigms of existing LLMs. This is achieved through a combination of multimodal tokenization, generative model synthesis, and a refined data alignment process.

Multimodal Encapsulation via Tokenization

AnyGPT distinguishes itself by employing discrete representations for encoding and decoding multimodal data. This method hinges on transforming continuous modality-specific signals into a sequence of discrete tokens, which can then be processed autoregressively by the LLM. These tokens encapsulate semantic information, enabling the model to understand and generate content across text, speech, images, and music without altering the LLM's underlying structure. The implementation utilizes a suite of tokenizers for each modality, with the encoding strategy tailored to the specific characteristics of the data it represents.

Semantics-Driven Data Synthesis

Addressing the scarcity of multimodal aligned datasets, AnyGPT introduces AnyInstruct-108k, an instruction dataset synthesized through advanced generative models. It encompasses 108k samples of multi-turn conversations with meticulously interleaved multimodal elements. This curated dataset equips AnyGPT with the capacity to navigate complex conversational contexts involving any combination of the supported modalities. This approach not only enriches the model's understanding but also its ability to generate coherent and contextually appropriate multimodal responses.

Experimental Validation

The model's capabilities are rigorously tested across various tasks, showcasing its adeptness at handling multimodal conversations. Through zero-shot performance metrics, AnyGPT demonstrates a comparable efficacy to specialized models dedicated to single modalities. This evidence underscores the viability of discrete representations in bridging different modes of human-computer interaction within a unified linguistic framework.

Theoretical and Practical Implications

The conceptualization of AnyGPT lays a foundation for theoretical advancements in understanding how discrete representations can encapsulate and convey multimodal information. Practically, it paves the way for the development of more sophisticated and versatile AI systems capable of engaging in any-to-any multimodal dialogues. This holds promise for enhancing user experience in applications ranging from virtual assistants to interactive AI in gaming and education.

Looking Forward

Despite its impressive capabilities, AnyGPT invites further exploration and optimization. Future efforts could focus on expanding the model's comprehension of modality-specific nuances and improving the fidelity of generated multimodal content. Additionally, the creation of more comprehensive benchmarks for evaluating any-to-any multimodal interactions remains a critical area for ongoing research.

AnyGPT marks a significant step towards the seamless integration of multiple modalities within LLM frameworks. Its innovative approach to discrete sequence modeling not only enriches the model's interactive capabilities but also opens new avenues for the development of genuinely multimodal AI systems.