A Novel Approach to Post-Training Quantization for Hyper-Scale Transformers

Introduction

The evolution of generative AI models, particularly Transformers, has resulted in increasingly large architectures. The scale of these models, while contributing to their superior performance, generates a significant challenge when deploying them on resource-constrained devices. This paper presents an innovative post-training quantization (PTQ) algorithm named aespa, designed to address the efficient deployment of hyper-scale Transformer models on such devices.

The Challenge with Current PTQ Schemes

Previous PTQ methods have demonstrated success in quantizing smaller models but encounter limitations with large-scale Transformers, primarily due to the increased complexity in handling the inter-layer dependencies within the Transformer architecture. Traditional schemes either do not consider these dependencies or do so at the expense of significant computational resources, hindering their practical application.

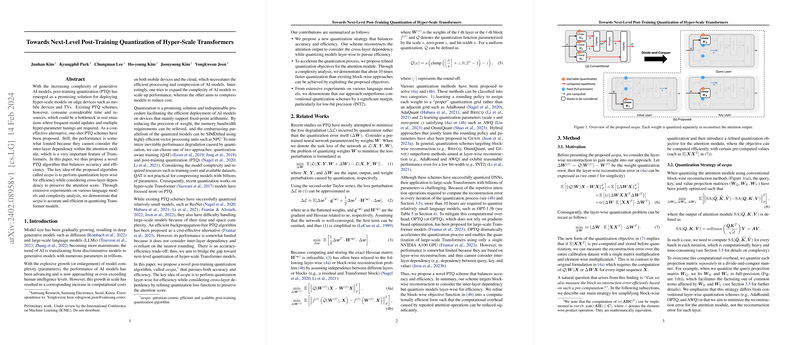

The Proposed aespa Algorithm

The aespa algorithm introduces a layer-wise quantization process that uniquely considers these cross-layer dependencies by refining quantization loss functions to preserve the attention scores crucial in Transformer models. This balance between accuracy and efficiency differentiates aespa from existing PTQ schemes, offering a cost-effective alternative without the need for extensive model updates or hyper-parameter tunings.

Key Contributions and Findings

- A new quantization strategy is proposed that maintains the integrity of the attention module outputs while optimizing layer-wise for efficiency. This ensures that the performance of hyper-scale models is not compromised during quantization.

- The introduction of refined quantization objectives for the attention module significantly accelerates the quantization process. Experimental results show that aespa can achieve about ten times faster quantization than existing block-wise approaches.

- Extensive experiments reveal that aespa consistently outperforms conventional PTQ schemes, notably in low-bit precision scenarios such as INT2, highlighting its robustness and versatility in quantizing Transformers.

Implications and Future Directions

The research implicitly suggests a new paradigm in post-training quantization, where the focus shifts towards maintaining the functional integrity of models' components, in this case, the attention mechanism. It opens up further exploration into quantization strategies that are both computationally efficient and sensitive to the internal dynamics of complex models like Transformers.

Furthermore, the proposed aespa algorithm's consideration of inter-layer dependencies suggests potential exploration into quantization strategies for other model architectures where such dependencies are crucial for performance. Additionally, while this paper focuses on the attention module, future work could extend aespa's principles to entire Transformer blocks or other complex layers, potentially offering more comprehensive quantization solutions.

In conclusion, the aespa algorithm presents an innovative approach to post-training quantization of hyper-scale Transformer models, balancing accuracy and efficiency. This work not only contributes a practical tool for deploying large AI models on resource-constrained devices but also opens new avenues for research in model optimization and deployment strategies.