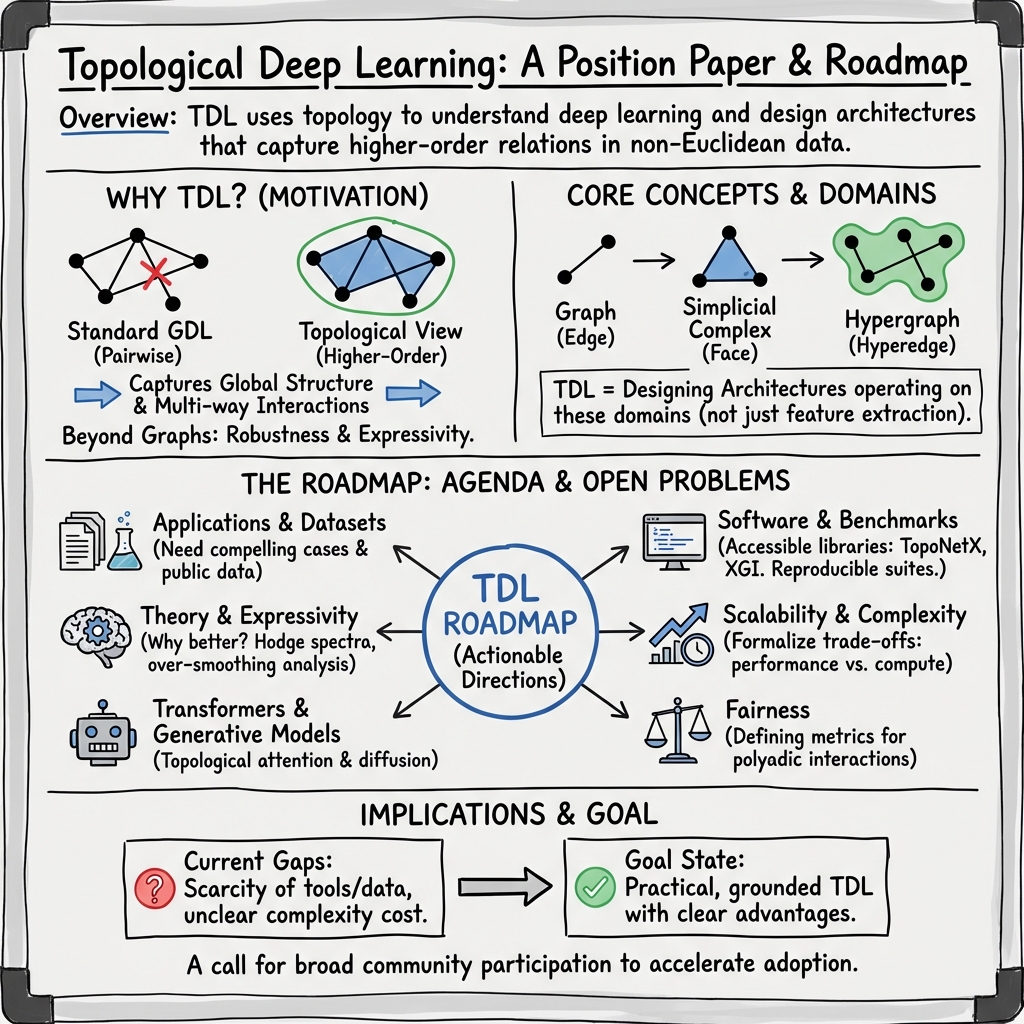

Position: Topological Deep Learning is the New Frontier for Relational Learning

Abstract: Topological deep learning (TDL) is a rapidly evolving field that uses topological features to understand and design deep learning models. This paper posits that TDL is the new frontier for relational learning. TDL may complement graph representation learning and geometric deep learning by incorporating topological concepts, and can thus provide a natural choice for various machine learning settings. To this end, this paper discusses open problems in TDL, ranging from practical benefits to theoretical foundations. For each problem, it outlines potential solutions and future research opportunities. At the same time, this paper serves as an invitation to the scientific community to actively participate in TDL research to unlock the potential of this emerging field.

- Quantifying extrinsic curvature in neural manifolds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 610–619, 2023.

- A survey on deep learning advances on different 3D data representations. arXiv preprint arXiv:1808.01462, 2018.

- Graph classification via heat diffusion on simplicial complexes. IEEE Access, 9:12291–12300, 2021.

- Quantum persistent homology. arXiv:2202.12965, 2022a.

- Quantum persistent homology for time series. In 2022 IEEE/ACM 7th Symposium on Edge Computing (SEC), pp. 387–392, 2022b.

- Metric space magnitude and generalisation in neural networks. In Proceedings of the 2nd Annual Workshop on Topology, Algebra, and Geometry in Machine Learning (TAG-ML), number 221 in Proceedings of Machine Learning Research, pp. 242–253. PMLR, 2023.

- Exploiting relational information in social networks using geometric deep learning on hypergraphs. In Proceedings of the 2018 ACM on International Conference on Multimedia Retrieval, pp. 117–125, 2018.

- Shape understanding by contour-driven retiling. The Visual Computer, 19(2):127–138, 2003.

- Multi-scale representation learning on hypergraph for 3D shape retrieval and recognition. IEEE Transactions on Image Processing, 30:5327–5338, 2021a.

- Hypergraph convolution and hypergraph attention. Pattern Recognition, 110:107637, 2021b.

- The contour spectrum. In Proceedings of the 8th Conference on Visualization ’97, pp. 167–ff. IEEE Computer Society Press, 1997.

- Topological signal processing over simplicial complexes. IEEE Transactions on Signal Processing, 68:2992–3007, 2020a.

- Topological signal processing: making sense of data building on multiway relations. IEEE Signal Processing Magazine, 37(6):174–183, 2020b.

- Learning from signals defined over simplicial complexes. In 2018 IEEE Data Science Workshop (DSW), pp. 51–55. IEEE, 2018.

- Semantic communications based on adaptive generative models and information bottleneck. IEEE Communications Magazine, 61(11):36–41, 2023.

- Parametric dictionary learning for topological signal representation. In 2023 31st European Signal Processing Conference (EUSIPCO), pp. 1958–1962. IEEE, 2023a.

- From latent graph to latent topology inference: differentiable cell complex module. arXiv preprint arXiv:2305.16174, 2023b.

- Generalized simplicial attention neural networks. arXiv preprint arXiv:2309.02138, 2023c.

- Tangent bundle convolutional learning: from manifolds to cellular sheaves and back. arXiv preprint arXiv:2303.11323, 2023d.

- Networks beyond pairwise interactions: structure and dynamics. Physics Reports, 874:1–92, 2020.

- Bauer, U. Ripser: efficient computation of Vietoris–Rips persistence barcodes. Journal of Applied and Computational Topology, 5(3):391–423, 2021.

- Vision transformers for remote sensing image classification. Remote Sensing, 13(3), 2021.

- Representation learning: a review and new perspectives. IEEE transactions on pattern analysis and machine intelligence, 35(8):1798–1828, 2013.

- Topological network traffic compression. In Proceedings of the 2nd on Graph Neural Networking Workshop 2023, CoNEXT 2023. ACM, 2023.

- On the complexity of neural network classifiers: a comparison between shallow and deep architectures. IEEE Trans. Neural Netw. Learn. Syst., 25(8):1533–1565, 2014.

- Describing shapes by geometrical-topological properties of real functions. ACM Computing Surveys (CSUR), 40(4):12, 2008.

- What are higher-order networks? arXiv preprint arXiv:2104.11329, 2021.

- Simplex2Vec embeddings for community detection in simplicial complexes. arXiv preprint arXiv:1906.09068, 2019.

- Intrinsic dimension, persistent homology and generalization in neural networks. In Advances in Neural Information Processing Systems, volume 34, pp. 6776–6789. Curran Associates, Inc., 2021.

- An effective computational method incorporating multiple secondary structure predictions in topology determination for cryo-EM images. IEEE/ACM transactions on computational biology and bioinformatics, 14(3):578–586, 2016.

- Bodnar, C. Topological deep learning: graphs, complexes, sheaves. PhD thesis, Apollo - University of Cambridge Repository, 2022.

- Weisfeiler and Lehman go cellular: CW networks. Advances in Neural Information Processing Systems, 34:2625–2640, 2021a.

- Weisfeiler and Lehman go topological: message passing simplicial networks. In Int. Conf. Mach. Learn., pp. 1026–1037. PMLR, 2021b.

- Neural sheaf diffusion: a topological perspective on heterophily and oversmoothing in GNNs. In Advances in Neural Information Processing Systems, volume 35, pp. 18527–18541. Curran Associates, Inc., 2022.

- Hybrid techniques for real-time radar simulation. In Proceedings of the November 12-14, 1963, Fall Joint Computer Conference, pp. 445–458. ACM, 1963.

- Geometric deep learning: grids, groups, graphs, geodesics, and gauges. arXiv preprint arXiv:2104.13478, 2021.

- Simplicial 2-complex convolutional neural nets. NeurIPS Workshop on Topological Data Analysis and Beyond, 2020.

- Higher-order signal processing with the Dirac operator. In 56th Asilomar Conference on Signals, Systems, and Computers, pp. 925–929. IEEE, 2022.

- TopologyNet: Topology based deep convolutional and multi-task neural networks for biomolecular property predictions. PLoS computational biology, 13(7):e1005690, 2017.

- Representability of algebraic topology for biomolecules in machine learning based scoring and virtual screening. PLoS computational biology, 14(1):e1005929, 2018.

- Carlsson, G. Topology and data. Bulletin of the American Mathematical Society, 46(2):255–308, 2009.

- Persistence barcodes for shapes. International Journal of Shape Modeling, 11(02):149–187, 2005.

- Simplifying flexible isosurfaces using local geometric measures. In IEEE Visualization, pp. 497–504. IEEE, 2004.

- The intrinsic attractor manifold and population dynamics of a canonical cognitive circuit across waking and sleep. Nature neuroscience, 22(9):1512–1520, 2019.

- A topological regularizer for classifiers via persistent homology. In AISTATS, 2019.

- Omicron BA. 2 (B. 1.1. 529.2): high potential for becoming the next dominant variant. The journal of physical chemistry letters, 13(17):3840–3849, 2022.

- Mutations strengthened SARS-CoV-2 infectivity. Journal of molecular biology, 432(19):5212–5226, 2020.

- Persistent Laplacian projected Omicron BA. 4 and BA. 5 to become new dominating variants. Computers in Biology and Medicine, 151:106262, 2022.

- Topological deep learning based deep mutational scanning. Computers in Biology and Medicine, 164:107258, 2023.

- A topological loss function for deep-learning based image segmentation using persistent homology. IEEE Transactions on Pattern Analysis & Machine Intelligence, 44(12):8766–8778, 2022.

- Topologically attributed graphs for shape discrimination. In Topological, Algebraic and Geometric Learning Workshops 2023, pp. 87–101. PMLR, 2023.

- Curto, C. What can topology tell us about the neural code? Bulletin of the American Mathematical Society, 54(1):63–78, 2017.

- A topological paradigm for hippocampal spatial map formation using persistent homology. PLoS Computational Biology, 8(8):e1002581, 2012.

- Road network reconstruction from satellite images with machine learning supported by topological methods. In Proc. 27th ACM SIGSPATIAL Intl. Conf. Adv. Geographic Information Systems (GIS), pp. 520–523, 2019.

- Computational topology for data analysis. Cambridge University Press, 2022.

- Generalization bounds with data-dependent fractal dimensions. In ICML, 2023.

- A generalization of transformer networks to graphs. arXiv preprint arXiv:2012.09699, 2020.

- Simplicial neural networks. NeurIPS Workshop on Topological Data Analysis and Beyond, 2020.

- Computational topology: an introduction. American Mathematical Society, Providence, RI, USA, 2010.

- Hypergraph neural networks. Proceedings of the AAAI Conference on Artificial Intelligence, 33(01):3558–3565, 2019.

- Fast graph representation learning with PyTorch Geometric. In ICLR Workshop on Representation Learning on Graphs and Manifolds, 2019.

- Backprop as functor: a compositional perspective on supervised learning. In Proceedings of the 34th Annual ACM/IEEE Symposium on Logic in Computer Science, LICS ’19. IEEE Press, 2021.

- Topology of learning in artificial neural networks. arXiv:1902.08160, 2019.

- A topology layer for machine learning. In AISTATS, 2020.

- Hypergraph learning: methods and practices. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020.

- HGNN+: general hypergraph neural networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

- Toroidal topology of population activity in grid cells. Nature, 602(7895):123–128, 2022.

- HEAT: hyperedge attention networks. Transactions on Machine Learning Research, 2022. ISSN 2835-8856.

- Generalizable cross-graph embedding for GNN-based congestion prediction. In IEEE/ACM Int. Conf. Computer Aided Design, ICCAD, pp. 1–9, 2021.

- Ghrist, R. W. Elementary applied topology, volume 1. Createspace Seattle, 2014.

- Neural message passing for quantum chemistry. In Int. Conf. Mach. Learn., pp. 1263–1272. PMLR, 2017.

- Addressing caveats of neural persistence with deep graph persistence. Transactions on Machine Learning Research, 2023. ISSN 2835-8856.

- Two’s company, three (or more) is a simplex: algebraic-topological tools for understanding higher-order structure in neural data. Journal of Computational Neuroscience, 41:1, 2016.

- Simplicial attention neural networks. arXiv preprint arXiv:2203.07485, 2022.

- Cell attention networks. In 2023 International Joint Conference on Neural Networks (IJCNN), pp. 1–8. IEEE, 2023.

- Simplicial attention networks. In ICLR 2022 Workshop on Geometrical and Topological Representation Learning, 2022.

- Generative hypergraph models and spectral embedding. Scientific Reports, 13(1):540, 2023.

- A systematic survey on deep generative models for graph generation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 45(5):5370–5390, 2022.

- Learning topological interactions for multi-class medical image segmentation. In Computer Vision – ECCV 2022, pp. 701–718, Cham, 2022. Springer Nature Switzerland.

- Topology-aware uncertainty for image segmentation. In NeurIPS, 2023.

- Diffusion models for graphs benefit from discrete state spaces, 2022.

- Exploring network structure, dynamics, and function using NetworkX. Technical report, Los Alamos National Lab.(LANL), Los Alamos, NM (United States), 2008.

- Cell complex neural networks. NeurIPS 2020 Workshop TDA and Beyond, 2020.

- Topological deep learning. NeurIPS Data-Centric AI Workshop, 2021a.

- Data-centric AI requires rethinking data notion. NeurIPS Data-Centric AI Workshop, 2021b.

- High skip networks: a higher order generalization of skip connections. In ICLR 2022 Workshop on Geometrical and Topological Representation Learning, 2022a.

- Simplicial complex representation learning. In Machine Learning on Graphs (MLoG) Workshop at 15th ACM International WSDM (2022) Conference, WSDM2022-MLoG, January 2022b.

- Combinatorial complexes: bridging the gap between cell complexes and hypergraphs. arXiv preprint arXiv:2312.09504, 2023a.

- Topological deep learning: going beyond graph data. arXiv, 2023b.

- TopoX: a suite of Python packages for machine learning on topological domains. arXiv preprint arXiv:2402.02441, 2024.

- Representation learning on graphs: methods and applications. IEEE Data Eng. Bull., 40(3):52–74, 2017.

- MeshCNN: a network with an edge. ACM Trans. Graph., 38(4):1–12, 2019.

- Toward a spectral theory of cellular sheaves. Journal of Applied and Computational Topology, 3(4):315–358, 2019.

- Hatcher, A. Algebraic topology. Cambridge University Press, 2005.

- Stable and transferable hyper-graph neural networks. arXiv preprint arXiv:2211.06513, 2022.

- HMSM-Net: hierarchical multi-scale matching network for disparity estimation of high-resolution satellite stereo images. ISPRS Journal of Photogrammetry and Remote Sensing, 188:314–330, 2022.

- A survey of topological machine learning methods. Frontiers in Artificial Intelligence, 4, 2021. ISSN 2624-8212. doi: 10.3389/frai.2021.681108.

- A primer on topological data analysis to support image analysis tasks in environmental science. Artificial Intelligence for the Earth Systems, 2(1):e220039, 2023.

- Deep learning with topological signatures. In Adv. Neural Inform. Process. Syst., pp. 1634–1644, 2017.

- Connectivity-optimized representation learning via persistent homology. In ICML, 2019.

- Topologically densified distributions. In ICML, 2020a.

- Graph filtration learning. In Proceedings of the 37th International Conference on Machine Learning, number 119 in Proceedings of Machine Learning Research, pp. 4314–4323. PMLR, 2020b.

- Equivariant diffusion for molecule generation in 3d. In International conference on machine learning, pp. 8867–8887. PMLR, 2022.

- Topological graph neural networks. In International Conference on Learning Representations, 2022.

- Exploring the geometry and topology of neural network loss landscapes. In Advances in Intelligent Data Analysis XX, pp. 171–184, Cham, Switzerland, 2022. Springer.

- Open graph benchmark: datasets for machine learning on graphs. In Advances in Neural Information Processing Systems, volume 33, pp. 22118–22133. Curran Associates, Inc., 2020.

- OGB-LSC: a large-scale challenge for machine learning on graphs. arXiv preprint arXiv:2103.09430, 2021a.

- Topology-preserving deep image segmentation. In Advances in Neural Information Processing Systems, volume 32. Curran Associates, Inc., 2019.

- Topology-aware segmentation using discrete Morse theory. In ICLR, 2021b.

- Trigger hunting with a topological prior for Trojan detection. In International Conference on Learning Representations, 2022.

- UniGNN: a unified framework for graph and hypergraph neural networks. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, IJCAI, 2021.

- Generative models for graph-based protein design. Advances in neural information processing systems, 32, 2019.

- Ddsl: Deep differentiable simplex layer for learning geometric signals. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8769–8778, 2019a.

- Dynamic hypergraph neural networks. In IJCAI, pp. 2635–2641, 2019b.

- Statistical ranking and combinatorial hodge theory. Mathematical Programming, 127(1):203–244, 2011.

- Topological representations of crystalline compounds for the machine-learning prediction of materials properties. npj computational materials, 7(1):28, 2021.

- Torsional diffusion for molecular conformer generation, February 2023.

- Molecular representations for machine learning. American Chemical Society, Washington, DC, USA, 2023.

- Kahng, A. B. Machine learning for CAD/EDA: the road ahead. IEEE Design and Test, 40(1):8–16, 2023. doi: 10.1109/MDAT.2022.3161593.

- Hypergraph attention networks for multimodal learning. In IEEE Conf. Comput. Vis. Pattern Recog., pp. 14581–14590, 2020.

- Extracting topographic terrain features from elevation maps. CVGIP: image understanding, 59(2):171–182, 1994.

- All of the fairness for edge prediction with optimal transport. In Proceedings of The 24th International Conference on Artificial Intelligence and Statistics, volume 130 of Proceedings of Machine Learning Research, pp. 1774–1782. PMLR, 2021.

- XGI: a Python package for higher-order interaction networks. Journal of Open Source Software, 8(85):5162, 2023.

- Computing the shape of brain networks using graph filtration and Gromov-Hausdorff metric. International Conference on Medical Image Computing and Computer Assisted Intervention, pp. 302–309, 2011.

- Lim, L.-H. Hodge Laplacians on graphs. SIAM Review, 62(3):685–715, 2020.

- Convolution in the cloud: Learning deformable kernels in 3D graph convolution networks for point cloud analysis. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 1800–1809, 2020.

- Towards graph foundation models: A survey and beyond. arXiv preprint arXiv:2310.11829, 2023a.

- Parallel algorithms for efficient computation of high-order line graphs of hypergraphs. In 2021 IEEE 28th International Conference on High Performance Computing, Data, and Analytics (HiPC), pp. 312–321. IEEE, 2021.

- MeshDiffusion: score-based generative 3D mesh modeling. In International Conference on Learning Representations, 2023b.

- Topological convolutional layers for deep learning. Journal of Machine Learning Research, 24(59):1–35, 2023.

- CF-GNNExplainer: counterfactual explanations for graph neural networks. In Proceedings of The 25th International Conference on Artificial Intelligence and Statistics, volume 151 of Proceedings of Machine Learning Research, pp. 4499–4511. PMLR, 2022.

- Extracting insights from the shape of complex data using topology. Scientific reports, 3:1236, 2013.

- Parameterized explainer for graph neural network. Advances in Neural Information Processing Systems, 33, 2020.

- DE-HNN: an effective neural model for circuit netlist representation. In 27th Intl. Conf. Artificial Intelligence and Statistics, 2024. To appear.

- Simplicial representation learning with neural k𝑘kitalic_k-forms. arXiv:2312.08515, 2023.

- The Gudhi library: simplicial complexes and persistent homology. In Hong, H. and Yap, C. (eds.), Mathematical Software – ICMS 2014, pp. 167–174, Berlin, Heidelberg, 2014. Springer Berlin Heidelberg.

- Nonparametric estimation of probability density functions of random persistence diagrams. Journal of Machine Learning Research, 20(151):1–49, 2019.

- A Bayesian framework for persistent homology. SIAM Journal on Mathematics of Data Science, 2(1):48–74, 2020.

- Unsupervised geometric and topological approaches for cross-lingual sentence representation and comparison. In Workshop on Representation Learning for NLP, 2022.

- Mendel, J. M. Tutorial on higher-order statistics (spectra) in signal processing and system theory: theoretical results and some applications. Proceedings of the IEEE, 79(3):278–305, 1991.

- Transformer for graphs: an overview from architecture perspective. arXiv preprint arXiv:2202.08455, 2022.

- A graph placement methodology for fast chip design. Nature, 594(7862):207–212, 2021.

- A topological deep learning framework for neural spike decoding. arXiv:2212.05037, 2023.

- Topological autoencoders. In Proceedings of the 37th International Conference on Machine Learning, number 119 in Proceedings of Machine Learning Research, pp. 7045–7054. PMLR, 2020.

- Weisfeiler and Leman go neural: higher-order graph neural networks. Proceedings of the AAAI Conference on Artificial Intelligence, 33(01):4602–4609, 2019.

- Persistent homology on electron backscatter diffraction data in nano/ultrafine-grained 18Cr–8Ni stainless steel. Materials Science and Engineering: A, 829:142172, 2022.

- Euler characteristic transform based topological loss for reconstructing 3D images from single 2D slices. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, pp. 571–579, 2023.

- Topology of deep neural networks. Journal of Machine Learning Research, 21(1), 2020.

- Bayesian topological learning for brain state classification. In 2019 18th IEEE International Conference On Machine Learning And Applications (ICMLA), pp. 1247–1252, 2019.

- Newman, M. E. J. Mixing patterns in networks. Phys. Rev. E, 67:026126, Feb 2003.

- Dg-gl: Differential geometry-based geometric learning of molecular datasets. International journal for numerical methods in biomedical engineering, 35(3):e3179, 2019.

- Mathematical deep learning for pose and binding affinity prediction and ranking in D3R grand challenges. Journal of computer-aided molecular design, 33:71–82, 2019.

- MathDL: mathematical deep learning for D3R grand challenge 4. Journal of computer-aided molecular design, 34:131–147, 2020.

- Revisiting over-smoothing and over-squashing using Ollivier-Ricci curvature. In Proceedings of the 40th International Conference on Machine Learning, volume 202 of Proceedings of Machine Learning Research, pp. 25956–25979. PMLR, 2023.

- Topology based data analysis identifies a subgroup of breast cancers with a unique mutational profile and excellent survival. Proceedings of the National Academy of Sciences, 108(17):7265–7270, 2011.

- Tofu: Topology functional units for deep learning. Foundations of Data Science, 4(4):641–665, 2022a.

- Bayesian topological signal processing. Discrete and Continuous Dynamical Systems - S, 15(4):797–817, 2022b.

- Olah, C. Neural networks, manifolds, and topology. Blog post, 2014.

- A random persistence diagram generator. Statistics and Computing, 32(5):88, 2022.

- ICML 2023 topological deep learning challenge: design and results. In Proceedings of 2nd Annual Workshop on Topology, Algebra, and Geometry in Machine Learning (TAG-ML), volume 221, pp. 3–8. PMLR, 2023.

- The topological BERT: transforming attention into topology for natural language processing. arXiv preprint arXiv:2206.15195, 2022.

- giotto-ph: a Python library for high-performance computation of persistent homology of Vietoris-Rips filtrations. arXiv preprint arXiv:2107.05412, 2021.

- Dreamfusion: text-to-3D using 2D diffusion. arXiv preprint arXiv:2209.14988, 2022.

- Nonlinear feature diffusion on hypergraphs. In Proceedings of the 39th International Conference on Machine Learning, volume 162 of Proceedings of Machine Learning Research, pp. 17945–17958. PMLR, 2022.

- Experimental observations of the topology of convolutional neural network activations. Proceedings of the 37th AAAI Conference on Artificial Intelligence (AAAI), 2023.

- PointNet: deep learning on point sets for 3D classification and segmentation. In IEEE Conf. Comput. Vis. Pattern Recog., pp. 652–660, 2017.

- Persistent spectral theory-guided protein engineering. Nature Computational Science, 3(2):149–163, 2023.

- Fairwalk: towards fair graph embedding. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, IJCAI’19, pp. 3289–3295. AAAI Press, 2019.

- Topological data analysis of decision boundaries with application to model selection. In International Conference on Machine Learning, pp. 5351–5360. PMLR, 2019.

- Topo-mlp: A simplicial network without message passing. In ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1–5. IEEE, 2023.

- TopoBERT: Exploring the topology of fine-tuned word representations. Information Visualization, 2023.

- Graph neural networks for materials science and chemistry. Communications Materials, 3(1):93, 2022.

- Persistent homology for the evaluation of dimensionality reduction schemes. Computer Graphics Forum, 34(3):431–440, 2015.

- A persistent Weisfeiler–Lehman procedure for graph classification. In Chaudhuri, K. and Salakhutdinov, R. (eds.), Proceedings of the 36th International Conference on Machine Learning (ICML), number 97 in Proceedings of Machine Learning Research, pp. 5448–5458. PMLR, 2019a.

- Neural persistence: a complexity measure for deep neural networks using algebraic topology. In International Conference on Learning Representations (ICLR), 2019b.

- Uncovering the topology of time-varying fMRI data using cubical persistence. In Advances in Neural Information Processing Systems (NeurIPS), volume 33, pp. 6900–6912. Curran Associates, Inc., 2020.

- HodgeNet: graph neural networks for edge data. In 2019 53rd Asilomar Conference on Signals, Systems, and Computers, pp. 220–224. IEEE, 2019.

- Principled simplicial neural networks for trajectory prediction. In Int. Conf. Mach. Learn., pp. 9020–9029. PMLR, 2021.

- Signal processing on cell complexes. ICASSP, 2022.

- Differentiable Euler characteristic transforms for shape classification. arXiv:2310.07630, 2023.

- High-resolution image synthesis with latent diffusion models, 2022.

- Using contour trees in the analysis and visualization of radio astronomy data cubes. arXiv preprint arXiv:1704.04561, pp. 1–7, 2017.

- Karate Club: an API oriented open-source Python framework for unsupervised learning on graphs. In Proceedings of the 29th ACM International Conference on Information and Knowledge Management (CIKM ’20), pp. 3125–3132. ACM, 2020.

- Topological signal processing over cell complexes. Proceeding IEEE Asilomar Conference. Signals, Systems and Computers, 2021.

- Distributed training of graph convolutional networks. IEEE Transactions on Signal and Information Processing over Networks, 7:87–100, 2021.

- Flow smoothing and denoising: graph signal processing in the edge-space. In 2018 IEEE Global Conference on Signal and Information Processing (GlobalSIP), pp. 735–739, 2018.

- Random walks on simplicial complexes and the normalized hodge 1-Laplacian. SIAM Review, 62(2):353–391, 2020.

- Signal processing on higher-order networks: livin’on the edge… and beyond. Signal Processing, 187:108149, 2021.

- Signal processing on simplicial complexes. In Higher-Order Systems, pp. 301–328. Springer, 2022.

- Deep generative models on 3D representations: a survey. arXiv preprint arXiv:2210.15663, 2022.

- Curvature filtrations for graph generative model evaluation. In Advances in Neural Information Processing Systems (NeurIPS), volume 36, 2023.

- Materials fingerprinting classification. Computer Physics Communications, 266:108019, 2021.

- FairDrop: biased edge dropout for enhancing fairness in graph representation learning. IEEE Transactions on Artificial Intelligence, 3(3):344–354, 2022.

- On the depth of deep neural networks: a theoretical view. In AAAI, 2016.

- Sunada, T. Topological crystallography with a view towards discrete geometric analysis. Springer, 2013.

- Fast end-to-end learning on protein surfaces. In 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 15267–15276, 2021.

- Hypergraph-MLP: learning on hypergraphs without message passing. arXiv preprint arXiv:2312.09778, 2023a.

- Hypergraph structure inference from data under smoothness prior. arXiv preprint arXiv:2308.14172, 2023b.

- giotto-tda: a topological data analysis toolkit for machine learning and data exploration. Journal of Machine Learning Research, 22(39):1–6, 2021.

- Hypergraph neural networks through the lens of message passing: a common perspective to homophily and architecture design. arXiv preprint arXiv:2310.07684, 2023.

- Topological data analysis of biological aggregation models. PloS one, 10(5):e0126383, 2015.

- Understanding over-squashing and bottlenecks on graphs via curvature. In International Conference on Learning Representations, 2022.

- Representation of molecular structures with persistent homology for machine learning applications in chemistry. Nature communications, 11(1):3230, 2020.

- Intrinsic dimension estimation for robust detection of AI-generated texts. In 37th Annual Conference on Neural Information Processing Systems (NeurIPS), 2023.

- Veličković, P. Message passing all the way up. In ICLR 2022 Workshop on Geometrical and Topological Representation Learning, 2022.

- Veličković, P. Everything is connected: graph neural networks. Current Opinion in Structural Biology, 79:102538, 2023.

- Graph attention networks. In International Conference on Learning Representations, 2018.

- Quantum graph neural networks. arXiv preprint arXiv:1909.12264, 2019.

- DiGress: discrete denoising diffusion for graph generation. arXiv preprint arXiv:2209.14734, 2023.

- Topological singularity detection at multiple scales. In Proceedings of the 40th International Conference on Machine Learning (ICML), number 202 in Proceedings of Machine Learning Research, pp. 35175–35197. PMLR, 2023.

- Improving metric dimensionality reduction with distributed topology, 2021. arXiv:2106.07613.

- Capturing shape information with multi-scale topological loss terms for 3D reconstruction. In Medical Image Computing and Computer Assisted Intervention (MICCAI), pp. 150–159, Cham, Switzerland, 2022. Springer.

- Equivariant and stable positional encoding for more powerful graph neural networks. In International Conference on Learning Representations, 2022.

- Deep Graph Library: a graph-centric, highly-performant package for graph neural networks. arXiv preprint arXiv:1909.01315, 2019.

- Equivariant hypergraph diffusion neural operators. In International Conference on Learning Representations (ICLR), 2023.

- Persistent spectral graph. International journal for numerical methods in biomedical engineering, 36(9):e3376, 2020.

- Mechanisms of SARS-CoV-2 evolution revealing vaccine-resistant mutations in Europe and America. The journal of physical chemistry letters, 12(49):11850–11857, 2021.

- De novo design of protein structure and function with rfdiffusion. Nature, 620(7976):1089–1100, 2023.

- Persistent sheaf Laplacians. arXiv preprint arXiv:2112.10906, 2021.

- Activation landscapes as a topological summary of neural network performance. In 2021 IEEE International Conference on Big Data (Big Data), pp. 3865–3870, 2021.

- Hypergraph convolution on nodes-hyperedges network for semi-supervised node classification. ACM Transactions on Knowledge Discovery from Data (TKDD), 16(4):1–19, 2022.

- Quantitative toxicity prediction using topology based multitask deep neural networks. Journal of chemical information and modeling, 58(2):520–531, 2018.

- Advective diffusion transformers for topological generalization in graph learning. arXiv preprint arXiv:2310.06417, 2023.

- A comprehensive survey on graph neural networks. IEEE transactions on neural networks and learning systems, 32(1):4–24, 2020.

- Persistent homology analysis of protein structure, flexibility, and folding. International journal for numerical methods in biomedical engineering, 30(8):814–844, 2014.

- Persistent topology for cryo-EM data analysis. International Journal for Numerical Methods in Biomedical Engineering, 31(8), 2015.

- Net2: a graph attention network method customized for pre-placement net length estimation. In Asia and South Pacific Design Automation Conference, ASPDAC, pp. 671–677, 2021.

- GeoDiff: a geometric diffusion model for molecular conformation generation, 2022.

- Convolutional learning on simplicial complexes. arXiv preprint arXiv:2301.11163, 2023.

- Versatile multi-stage graph neural network for circuit representation. In Advances in Neural Information Processing Systems, volume 35, 2022.

- SE(3) diffusion model with application to protein backbone generation. arXiv preprint arXiv:2302.02277, 2023.

- Topological attention for time series forecasting. In Advances in Neural Information Processing Systems, volume 34, pp. 24871–24882. Curran Associates, Inc., 2021.

- Lion: Latent point diffusion models for 3D shape generation. arXiv preprint arXiv:2210.06978, 2022.

- Hyper-relationship learning network for scene graph generation. arXiv preprint arXiv:2202.07271, 2022.

- A survey on graph diffusion models: generative AI in science for molecule, protein and material. arXiv preprint arXiv:2304.01565, 2023.

- The de Rham-Hodge analysis and modeling of biomolecules. Bulletin of mathematical biology, 82:1–38, 2020.

- 3D point capsule networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 1009–1018, 2019.

- Topological detection of Trojaned neural networks. In NeurIPS, 2021.

- Graph neural networks: a review of methods and applications. AI Open, 1:57–81, 2020.

- Visualizing and analyzing the topology of neuron activations in deep adversarial training. Topology, Algebra, and Geometry in Machine Learning (TAGML) Workshop at ICML, pp. 134–145, 2023.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.