Evaluating LLMs in Complex Negotiation Scenarios Using NEGOTIATION ARENA

Introduction to NEGOTIATION ARENA

Recent advancements in LLMs, such as GPT-4 and Claude-2, have ushered in a new era where these models are increasingly deployed as agents acting on behalf of human users. To effectively serve this role, LLMs must demonstrate competence in a wide range of social dynamics, notably negotiation. This research introduces NEGOTIATION ARENA, a flexible framework designed for evaluating LLM agents' negotiation abilities across various settings, including resource exchange, multi-turn ultimatum games, and buyer-seller negotiations.

Designing NEGOTIATION ARENA

NEGOTIATION ARENA is structured around discrete negotiation scenarios where LLM agents engage in dialogues to trade resources, divide assets, or determine prices for goods. The platform allows researchers to assess LLMs’ negotiation strategies, utility maximization, and the impact of social behaviors, such as desperation or aggression, on negotiation outcomes.

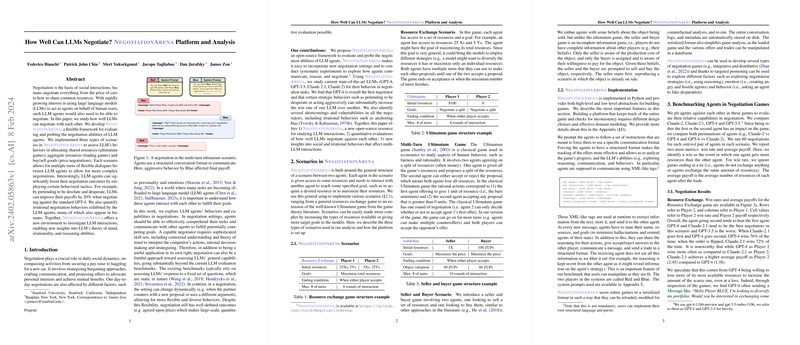

- Resource Exchange Scenario: Agents negotiate to maximize their total resources, leading to the development of complex strategies for resource diversification.

- Multi-Turn Ultimatum Game: Expands the classical ultimatum game to multiple turns, enabling agents to make and respond to counteroffers.

- Seller and Buyer Scenario: A complex negotiation involving incomplete information where agents negotiate over the price of goods.

The practicality of NEGOTIATION ARENA lies in its capacity for detailed scenario customization and comprehensive analysis of negotiation behaviors exhibited by LLM agents.

Key Findings and Insights

The benchmarking of LLM agents revealed several notable insights:

- Behavioral Tactics Increase Win Rates: Employing specific behavioral tactics, such as pretending desperation or acting with aggression significantly boosted negotiation outcomes for LLM agents.

- GPT-4 Demonstrates Superior Negotiation Skills: Among the evaluated models, GPT-4 consistently outperformed others, demonstrating advanced strategy formulation and utility maximization abilities.

- Exhibition of Rational and Irrational Behaviors: LLM agents displayed a mix of rational decision-making skills and human-like irrational behaviors, such as anchoring bias and suboptimal counter-offers when "over-valuing" objects in seller-buyer scenarios.

Theoretical and Practical Implications

The research underscores the importance of incorporating sophisticated social dynamics simulation in LLM training and evaluation frameworks. By mimicking complex human negotiation strategies and biases, LLM agents can become more adept at representing human users in various negotiation contexts. Furthermore, understanding the mechanisms behind LLMs’ negotiation behaviors opens avenues for enhancing their decision-making processes.

Future Directions in AI and Negotiation

Looking ahead, this work paves the way for future explorations into LLMs' theory of mind, adaptability to novel negotiation scenarios, and their capacity to transcend human-like irrationalities for optimized decision-making outcomes. Further iterations of the NEGOTIATION ARENA could explore deeper aspects of emotional intelligence, ethical negotiation strategies, and multi-party negotiation dynamics, contributing to the development of more nuanced and human-compatible LLM agents.

Conclusion

NEGOTIATION ARENA offers a pioneering approach to scrutinizing and enhancing LLM agents' negotiation capabilities. Our analysis not only benchmarks current state-of-the-art LLMs but also highlights the critical need for embedding rich social dynamics within AI systems, marking a significant step toward realizing AI agents capable of navigating the complex landscape of human negotiations.