Graph-enhanced LLMs in Asynchronous Plan Reasoning

The paper "Graph-enhanced LLMs in Asynchronous Plan Reasoning" by Fangru Lin et al. explores the capabilities of LLMs like GPT-4 and LLaMA-2 in executing complex asynchronous planning tasks. The paper is pivotal as it highlights both the strengths and limitations of current LLMs in mimicking human-like planning abilities which involve both sequential and parallel optimization.

Overview and Significance

Planning is an intrinsic aspect of human intelligence and a critical component for deploying autonomous agents in real-world scenarios. The challenge of asynchronous plan reasoning, which necessitates balancing sequential and parallel steps to minimize time costs, forms the crux of this investigation. The paper introduces a substantial benchmark, Asynchronous WikiHow (AsyncHow), encompassing 1.6K instances derived from robust data sources like ProScript and WikiHow.

Key Findings

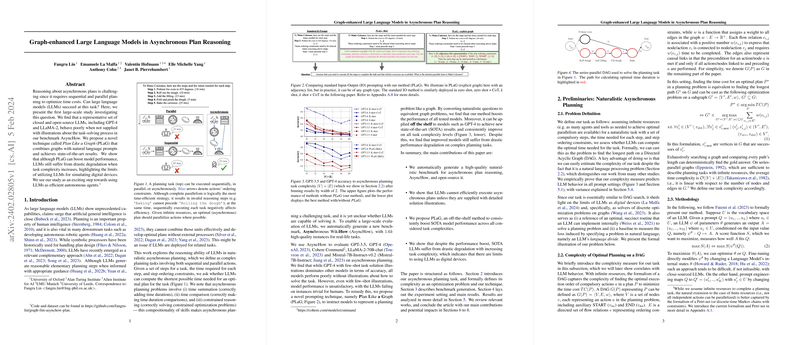

The findings underscore the underperformance of standard LLMs in asynchronous planning without external illustrative aids. Specifically, the paper demonstrates that even state-of-the-art models such as GPT-4 underperform without additional context or guidance. To address this, the authors propose a novel technique called Plan Like a Graph (PLaG), which integrates graph-based representations with natural language prompts. PLaG significantly enhances model performance, enabling LLMs to achieve better results by converting naturalistic planning problems into graph-based reasoning tasks.

Methodology

The research methodology includes generating the AsyncHow benchmark to evaluate LLMs’ capabilities. This benchmark was meticulously curated through a series of steps involving:

- Preprocessing to collect high-quality plans.

- Annotating time durations using GPT-3.5.

- Annotating step dependencies with GPT-4, using a consistent and rigorous methodology.

- Generating natural language prompts and equivalent Directed Acyclic Graphs (DAGs) representing the tasks.

The PLaG method was employed in two forms within the experiments: explicit graph prompting and the BaG (Build a Graph) approach. These approaches instructed models either to reason based on a pre-constructed graph or to generate and use a graph independently.

Results

The experimental results reveal several critical insights:

- Without the PLaG method, models including GPT-4 show poor performance, particularly in zero-shot settings.

- The introduction of PLaG, both in explicit graph and BaG formats, yields substantial performance improvements across all tested models, including those that were initially less capable.

- Despite the improvement, a notable performance degradation still exists as task complexity increases, indicating the inherent limitations of LLMs in handling highly complex planning tasks.

Specifically, GPT-4 achieved the highest accuracy in the BaG setting, significantly outperforming other models and settings. This suggests that enabling models to internally generate graph representations of tasks fosters better performance.

Implications

The implications of this research are both practical and theoretical:

- Practical: The findings offer a pathway towards enhancing current LLMs for applications requiring complex planning, such as autonomous agents and robotics. PLaG provides a feasible approach to integrate structured representations within LLMs to improve task handling accuracy and efficiency.

- Theoretical: The paper highlights the limitations of current LLMs in reasoning about complex, constrained optimization problems. The results suggest that while LLMs exhibit commendable performance on simpler tasks, their ability to manage intricate dependencies and optimizations is limited. This necessitates further exploration into hybrid methods combining symbolic reasoning with LLM capabilities.

Future Directions

Future research could focus on several avenues:

- Exploring extensions of PLaG to handle finite resource constraints, moving beyond the assumption of infinite resources.

- Developing more sophisticated graph-based prompting techniques that dynamically adapt to varying task complexities.

- Investigating the integration of multi-modal data inputs, combining textual descriptions with visual representations to further enhance planning performance.

- Examining the scalability of PLaG for even larger and more complex tasks, potentially involving thousands of nodes and edges.

In summary, the paper by Fangru Lin et al. offers a significant contribution to understanding and improving the planning capabilities of LLMs. While the introduction of graph-based reasoning techniques like PLaG marks a promising advance, the paper also illuminates the ongoing challenges and areas for future research to push the boundaries of what LLMs can achieve in autonomous planning and reasoning tasks.