Introduction

LLMs such as GPT-4 and Gemini have shown their prowess across a breadth of tasks, from casual conversational roles to complex coding problems. To employ these models viably in everyday applications, however, one must align them with human values and preferences—a process termed 'LM alignment'. Traditional reinforcement learning techniques for this task are notoriously complex and resource-intensive. The paper "LiPO: Listwise Preference Optimization through Learning-to-Rank" proposes an alternative that treats LM alignment as a Learning-to-Rank (LTR) problem, aiming to leverage the efficiency of ranking-based methods over traditional ones in optimizing LLMs according to human feedback.

The LiPO Framework

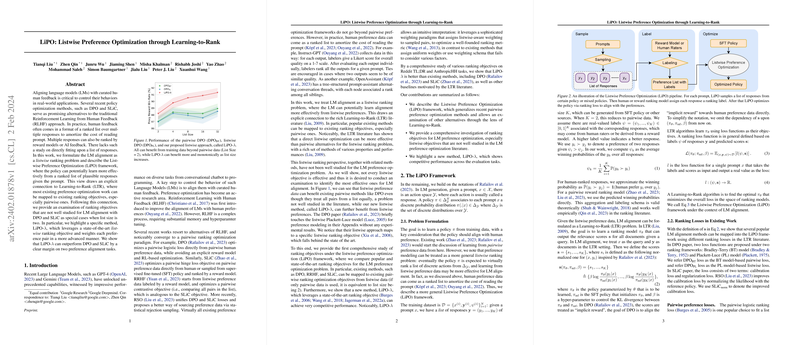

This work critiques that prevalent preference optimization methods scarcely go beyond pairwise alignments, which may be inadequate given that human feedback often takes the form of a ranked list. Responding to this, the authors devise the Listwise Preference Optimization (LiPO) framework, which poses LM alignment as a listwise ranking challenge. This framework not only generalizes existing models but allows for the exploration of more listwise objectives.

Under LiPO, previous alignment methods can be understood as specific cases of ranking optimizations. For instance, while earlier models like DPO and SLiC reduce to pairwise ranking problems, LiPO proposes listwise objectives that could better capture the essence of human rankings. What is particularly noteworthy in this paper is the introduction of LiPO-λ—a new method employing a sophisticated and theoretically grounded listwise ranking objective which has shown improved performance across evaluation tasks over its counterparts.

Advantages of Listwise Ranking

LiPO's advantage lies in its listwise perspective. Where traditional methods may consider response pairs in isolation, LiPO-λ infers from entire lists of responses, arguably a more holistic approach. Additionally, LiPO-λ innovatively incorporates label values within its optimization—a crucial detail that earlier methods ignored. In doing so, it understands the graded spectrum of quality, thereby making more informed alignment decisions. Empirically, through various experiments on the Reddit TL;DR and AnthropicHH datasets, LiPO-λ outperformed existing methods such as DPO and SLiC by clear margins, and its benefits intensified as the size of the response lists increased.

Evaluation and Applications

The evaluations using three distinct approaches: Proxy Reward Model, AutoSxS, and Human Evaluation, all converge to affirm LiPO-λ's strengths. Proxy Reward Model, for instance, found LiPO-λ's generated responses aligning more closely with the SFT target than other models it was pitted against. Moreover, its scalability with larger LM policies suggests wider applicability to various natural language processing tasks.

Concluding Remarks

Listwise Preference Optimization (LiPO) brings forth a nuanced approach to aligning LMs with human preferences. Its innovative incorporation of Learning-to-Rank techniques not only simplifies but also enhances the alignment process. The superior results of LiPO-λ substantiate its potential as a powerful tool in refining LLMs for real-world deployment, hailing in a new, more efficient phase for model alignment techniques. Future work beckons with numerous possibilities, from in-depth theoretical analyses of LambdaLoss's effectiveness in LM alignment to considering online learning strategies to further reduce distribution shift issues.