LLMs for Time Series: An Academic Overview

The paper "LLMs for Time Series: A Survey" presents an analytical compilation of methodologies and techniques for employing LLMs in time series data analysis. This emerging field addresses the adaptation of LLMs, traditionally designed for NLP, to process and interpret time series data effectively. Given the distinct characteristics between text and numerical time series data, the paper introduces a comprehensive taxonomy of approaches designed to transfer and apply the capabilities of LLMs to time series applications across various domains such as climate, IoT, healthcare, traffic, audio, and finance.

Core Methodologies

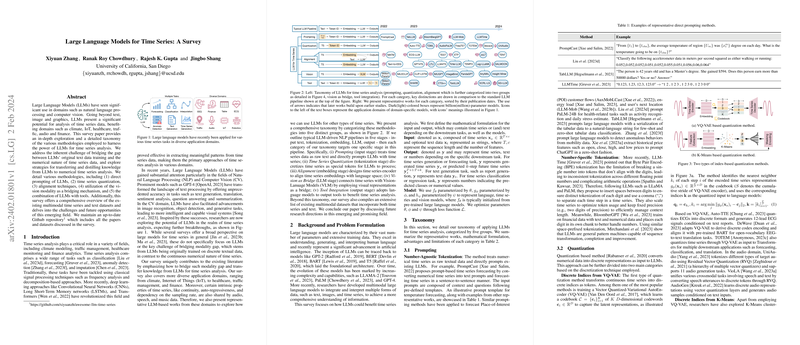

The survey delineates the primary methodologies into five categories, each focusing on distinct approaches to leveraging LLMs for time series:

- Direct Prompting: This approach involves the treatment of time series data as text, directly feeding it to LLMs without substantial preprocessing. While straightforward, this method risks losing essential numerical semantics, particularly when dealing with high precision data.

- Time Series Quantization: Quantization methods convert time series into discrete tokens or categories, thereby bridging the modality gap. Techniques such as VQ-VAE and K-Means clustering are explored. This method benefits from flexibility but may require complex multi-stage training.

- Alignment Techniques: These involve encoding time series into representations compatible with LLM semantic spaces. Strategies include similarity matching via contrastive or other loss functions, and using LLM backbones post time series embedding. While these methods provide seamless integration into LLM infrastructures, they require tailored alignment mechanisms.

- Vision as a Bridge: Leveraging vision-LLMs, this strategy translates time series into visual formats that inherently possess semantic similarities to textual data. This is particularly effective when time series can be interpreted as images or paired multimedia data.

- Tool Integration: Utilizing LLMs as intermediaries that generate auxiliary tools like code or APIs for time series tasks. This category highlights the potential of indirect, tool-based LLM applications, though it poses optimization challenges.

Implications and Prospects

The survey delivers key insights into the practical and theoretical implications of adopting LLMs for time series analysis. The discussed methodologies expand the potential capabilities of LLMs beyond text and image processing, embracing numerical data challenges. On a practical level, these approaches could significantly enhance sectors dependent on time series analysis by integrating sophisticated LLM reasoning with numeric data. Theoretically, they propose a fertile ground for further exploration into the generality and adaptability of LLM architectures.

Looking forward, the research underscores several future research avenues, including enhancing the theoretical understanding of LLM functionality on non-linguistic data, developing multimodal and multitask systems, and integrating domain-specific knowledge to enhance LLM-powered time series analysis. Furthermore, handling large-scale data efficiently and addressing customization and privacy concerns form an essential part of evolving this nascent field.

Conclusion

This survey articulates a structured framework for analyzing how LLMs can actively contribute to time series analysis, highlighting significant past contributions and identifying critical areas for future exploration. By meticulously cataloging existing methodologies and presenting an inquiry into both challenges and future directions, it sets a foundation for continued research and development, advocating for a nuanced understanding of LLMs in handling diverse data modalities. As the field progresses, these insights are poised to drive innovations in machine learning applications across multi-disciplinary domains.