Health-LLM: Personalized Retrieval-Augmented Disease Prediction System

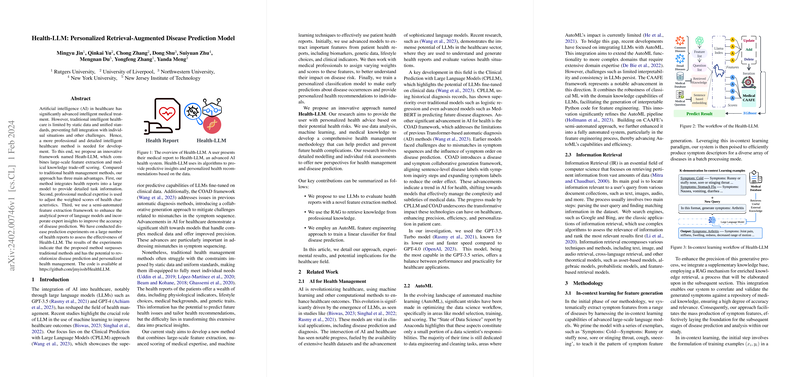

The paper presents Health-LLM, an advanced personalized disease prediction system utilizing retrieval-augmented generation (RAG) mechanisms and LLMs. The fusion of these technologies addresses the critical challenges in healthcare AI, such as handling vast data volumes and inconsistent symptom characterization standards. Health-LLM's strength lies in its novel framework, which enhances traditional health management applications by integrating comprehensive data analytics, machine learning, and medical knowledge.

The core innovation within the Health-LLM system includes large-scale feature extraction coupled with medical knowledge trade-off scoring—a method providing an intricate balance between general AI capabilities and domain-specific medical expertise. Through in-context learning, Health-LLM effectively transforms detailed health reports into actionable predictions. The system processes patient data using the Llama Index structure, which incorporates professional medical knowledge via RAG, ultimately scoring features pertinent to disease prediction.

In terms of performance evaluation, Health-LLM demonstrates a significant advantage over existing models, including GPT-3.5 and GPT-4, achieving an accuracy of 0.833 and an F1 score of 0.762. This improvement underscores the efficacy of Health-LLM in surpassing traditional methodologies, such as Pretrain-BERT, TextCNN, and Hierarchical Attention, as well as more recent fine-tuned large-scale LLMs like LLaMA-2. The system's success in experimental settings supports its potential to revolutionize personalized health management by offering precise disease predictions and tailored health recommendations based on patient data.

The integration of RAG mechanisms plays a pivotal role in enhancing the system's feature extraction process. By retrieving the most relevant data from extensive medical knowledge bases, Health-LLM constructs a foundation for high-quality diagnostic reasoning. This retrieval process, aligned with the LLM's generative capabilities, ensures that the system maintains domain-specific accuracy and relevance—an imperative aspect in clinical applications.

On the implementation front, the Health-LLM employs XGBoost for final disease prediction, training a classification model on scores derived from the Llama Index. The entire process involves generating symptom features through in-context learning, enriching these features with retrieved data, and iteratively refining model predictions via automated feature engineering frameworks. This methodological synergy allows Health-LLM to provide dynamic health insights, thus enhancing the personalization aspect of patient care.

The practical implications of Health-LLM are manifold, paving the way for future developments in AI-driven healthcare solutions. By incorporating multimodal data inputs alongside text-based features, future iterations of Health-LLM could further heighten the accuracy and range of its disease prediction capabilities. Additionally, leveraging comprehensive datasets and sophisticated model architectures could potentially address the present limitations related to data inconsistency and model robustness.

In conclusion, Health-LLM embodies a significant advancement in personalized health management systems, offering a promising approach to handling complex healthcare data. By merging LLM capabilities with retrieval-augmented techniques, the paper successfully demonstrates how AI can be tailored to meet the nuanced demands of individual patients, thereby contributing meaningfully to the ongoing progress in healthcare AI applications. Future exploration of multimodal integration and further refinement of prediction algorithms could further solidify Health-LLM's standing as a transformative tool in personalized healthcare.