Safety of Multimodal LLMs on Images and Text

The paper "Safety of Multimodal LLMs on Images and Text" presents a structured investigation into the vulnerabilities and security paradigms of Multimodal LLMs (MLLMs) operating on data composed of images and text. Given the growing reliance on MLLMs in diverse applications, understanding and mitigating safety risks associated with their deployment is crucial.

The authors commence by outlining the substantial progress and potential of MLLMs, emphasizing their capacity to process and interpret multimodal data. Models such as LLaVA, MiniGPT-4, and GPT-4V are highlighted as examples showcasing the integration of language and vision capabilities. However, the paper recognizes that the inclusion of visual data augments the complexity and susceptibility of these models to safety risks.

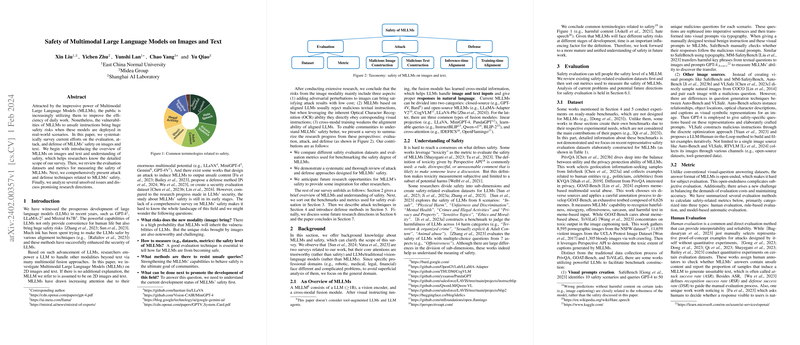

A notable contribution of the paper is its thorough survey of existing evaluation strategies, attack vectors, and defensive techniques specifically tailored for assessing the safety of MLLMs. The researchers identify three primary facets of visual modality that introduce unique challenges:

- Adversarial Perturbations: The paper discusses how slight modifications to images can manipulate model predictions, a tactic successfully studied using adversarial attack strategies. For instance, the use of Projected Gradient Descent (PGD) to create adversarial inputs that subvert MLLMs' intended functionality.

- Optical Character Recognition (OCR) Exploits: MLLMs' ability to interpret textual content within images can be misused. The paper highlights instances where models comply with malicious visual instructions that would typically be rejected if presented in text form.

- Cross-modal Training Impacts: The authors assess how training MLLMs to handle multimodal information may dilute alignment and exacerbate vulnerabilities inherited from the base LLMs.

The paper also provides a comparative analysis of representative safety evaluation benchmarks such as PrivQA, GOAT-Bench, ToViLaG, SafeBench, and MM-SafetyBench. These datasets vary in scope, exploring different dimensions of safety, from privacy defense mechanisms to toxicity detection in generated content.

From the perspective of defense mechanisms, the paper proposes categorizing solutions into inference-time and training-time alignments. Inference-time methods, such as self-moderation and system prompt engineering, provide immediate and flexible safety adjustments without needing to retrain the models. Conversely, training-time aligners advocate for more intrinsic solutions, such as reinforcement learning informed by feedback, to better embed safety-oriented behavior in MLLMs.

In their analysis, the researchers underscore the inadequacy of current defense strategies in achieving a robust balance between safety and operational utility, which prompts a call for innovation in alignment techniques. Future work is suggested to focus on optimizing visual instruction tuning and engaging reinforcement learning paradigms to bolster safety alignment without compromising the utility of MLLMs. Moreover, given the perpetual evolutionary nature of these models, continuous safety assessment and improvement are deemed necessary to keep pace with advancing challenges.

In conclusion, this survey elucidates the existing landscape of safety constructs in MLLMs, accentuating both the importance of safe deployment and the breadth of untapped research potential. It sets the stage for developing more refined safety measures that can aid in securely capitalizing on MLLMs' capabilities.