A Survey on Hallucination in Large Vision-Language Models

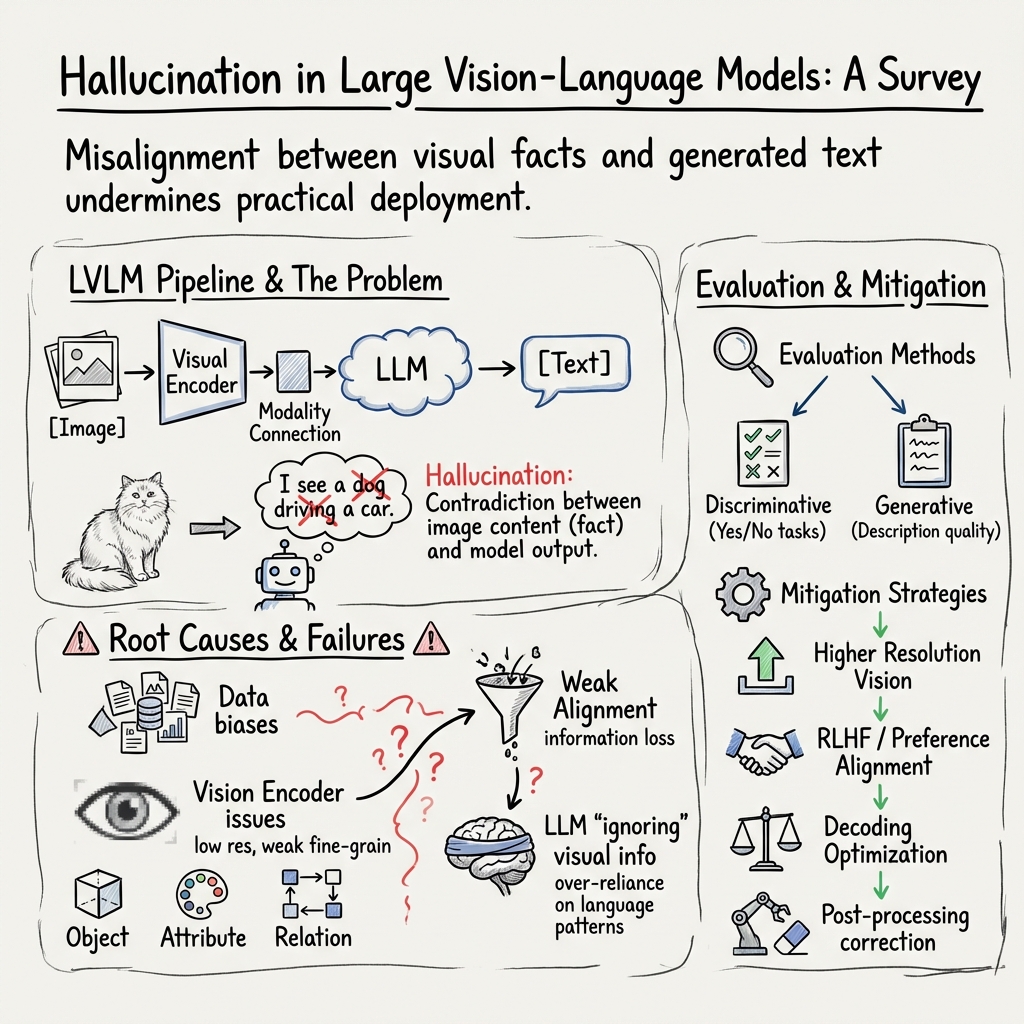

Abstract: Recent development of Large Vision-LLMs (LVLMs) has attracted growing attention within the AI landscape for its practical implementation potential. However, ``hallucination'', or more specifically, the misalignment between factual visual content and corresponding textual generation, poses a significant challenge of utilizing LVLMs. In this comprehensive survey, we dissect LVLM-related hallucinations in an attempt to establish an overview and facilitate future mitigation. Our scrutiny starts with a clarification of the concept of hallucinations in LVLMs, presenting a variety of hallucination symptoms and highlighting the unique challenges inherent in LVLM hallucinations. Subsequently, we outline the benchmarks and methodologies tailored specifically for evaluating hallucinations unique to LVLMs. Additionally, we delve into an investigation of the root causes of these hallucinations, encompassing insights from the training data and model components. We also critically review existing methods for mitigating hallucinations. The open questions and future directions pertaining to hallucinations within LVLMs are discussed to conclude this survey.

- Flamingo: a visual language model for few-shot learning. In NeurIPS, volume 35, 2022.

- Qwen-vl: A frontier large vision-language model with versatile abilities. arXiv preprint arXiv:2308.12966, 2023.

- Position-enhanced visual instruction tuning for multimodal large language models. arXiv preprint arXiv:2308.13437, 2023.

- Minigpt-v2: large language model as a unified interface for vision-language multi-task learning. arXiv preprint arXiv:2310.09478, 2023.

- Internvl: Scaling up vision foundation models and aligning for generic visual-linguistic tasks. arXiv preprint arXiv:2312.14238, 2023.

- Can we edit multimodal large language models? In EMNLP, 2023.

- Fine-grained image captioning with clip reward. In Findings of NAACL, 2022.

- Dola: Decoding by contrasting layers improves factuality in large language models. arXiv preprint arXiv:2309.03883, 2023.

- Instructblip: Towards general-purpose vision-language models with instruction tuning. arXiv preprint arXiv:2305.06500, 2023.

- Plausible may not be faithful: Probing object hallucination in vision-language pre-training. In EACL, 2023.

- Neural path hunter: Reducing hallucination in dialogue systems via path grounding. In EMNLP, 2021.

- Llama-adapter v2: Parameter-efficient visual instruction model. arXiv preprint arXiv:2304.15010, 2023.

- Imagebind one embedding space to bind them all. In CVPR, 2023.

- Detecting and preventing hallucinations in large vision language models. arXiv preprint arXiv:2308.06394, 2023.

- Imagebind-llm: Multi-modality instruction tuning. arXiv preprint arXiv:2309.03905, 2023.

- The curious case of neural text degeneration. In ICLR, 2020.

- Lora: Low-rank adaptation of large language models. In ICLR, 2022.

- Ciem: Contrastive instruction evaluation method for better instruction tuning. In NeurIPS 2023 Workshop on Instruction Tuning and Instruction Following, 2023.

- A survey on hallucination in large language models: Principles, taxonomy, challenges, and open questions. arXiv preprint arXiv:2311.05232, 2023.

- Opera: Alleviating hallucination in multi-modal large language models via over-trust penalty and retrospection-allocation. arXiv preprint arXiv:2311.17911, 2023.

- Vcoder: Versatile vision encoders for multimodal large language models. arXiv preprint arXiv:2312.14233, 2023.

- Survey of hallucination in natural language generation. ACM Computing Surveys, 55(12), 2023.

- Hallucination augmented contrastive learning for multimodal large language model. arXiv preprint arXiv:2312.06968, 2023.

- Faithscore: Evaluating hallucinations in large vision-language models. arXiv preprint arXiv:2311.01477, 2023.

- Volcano: Mitigating multimodal hallucination through self-feedback guided revision. arXiv preprint arXiv:2311.07362, 2023.

- Mitigating object hallucinations in large vision-language models through visual contrastive decoding. arXiv preprint arXiv:2311.16922, 2023.

- Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. In ICML, 2023.

- Evaluating object hallucination in large vision-language models. In EMNLP, 2023.

- Monkey: Image resolution and text label are important things for large multi-modal models. arXiv preprint arXiv:2311.06607, 2023.

- Video-llava: Learning united visual representation by alignment before projection. arXiv preprint arXiv:2311.10122, 2023.

- Mitigating hallucination in large multi-modal models via robust instruction tuning. arXiv preprint arXiv:2306.14565, 2023.

- Improved baselines with visual instruction tuning. arXiv preprint arXiv:2310.03744, 2023.

- Visual instruction tuning. In NeurIPS, 2023.

- Llava-plus: Learning to use tools for creating multimodal agents. arXiv preprint arXiv:2311.05437, 2023.

- Vision-and-language pretrained models: A survey. In IJCAI, 2022.

- Negative object presence evaluation (nope) to measure object hallucination in vision-language models. arXiv preprint arXiv:2310.05338, 2023.

- Neural baby talk. In CVPR, 2018.

- Evaluation and mitigation of agnosia in multimodal large language models. arXiv preprint arXiv:2309.04041, 2023.

- Factscore: Fine-grained atomic evaluation of factual precision in long form text generation. In EMNLP, 2023.

- OpenAI. Gpt-4 technical report. arXiv preprint arXiv:2303.08774, 2023.

- Learning transferable visual models from natural language supervision. In ICML, 2021.

- Direct preference optimization: Your language model is secretly a reward model. arXiv preprint arXiv:2305.18290, 2023.

- Object hallucination in image captioning. In EMNLP, 2018.

- Learning to summarize with human feedback. In NeurIPS, volume 33, 2020.

- Aligning large multimodal models with factually augmented rlhf. arXiv preprint arXiv:2309.14525, 2023.

- Llama: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971, 2023.

- Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288, 2023.

- Vigc: Visual instruction generation and correction. arXiv preprint arXiv:2308.12714, 2023.

- An llm-free multi-dimensional benchmark for mllms hallucination evaluation. arXiv preprint arXiv:2311.07397, 2023.

- Evaluation and analysis of hallucination in large vision-language models. arXiv preprint arXiv:2308.15126, 2023.

- A survey on multimodal large language models. arXiv preprint arXiv:2306.13549, 2023.

- Woodpecker: Hallucination correction for multimodal large language models. arXiv preprint arXiv:2310.16045, 2023.

- Ferret: Refer and ground anything anywhere at any granularity. arXiv preprint arXiv:2310.07704, 2023.

- Rlhf-v: Towards trustworthy mllms via behavior alignment from fine-grained correctional human feedback. arXiv preprint arXiv:2312.00849, 2023.

- Halle-switch: Rethinking and controlling object existence hallucinations in large vision language models for detailed caption. arXiv preprint arXiv:2310.01779, 2023.

- Recognize anything: A strong image tagging model. arXiv preprint arXiv:2306.03514, 2023.

- Siren’s song in the ai ocean: A survey on hallucination in large language models. arXiv preprint arXiv:2309.01219, 2023.

- Enhancing the spatial awareness capability of multi-modal large language model. arXiv preprint arXiv:2310.20357, 2023.

- Beyond hallucinations: Enhancing lvlms through hallucination-aware direct preference optimization. arXiv preprint arXiv:2311.16839, 2023.

- Analyzing and mitigating object hallucination in large vision-language models. In NeurIPS 2023 Workshop on Instruction Tuning and Instruction Following, 2023.

- Minigpt-4: Enhancing vision-language understanding with advanced large language models. arXiv preprint arXiv:2304.10592, 2023.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

A simple guide to “A Survey on Hallucination in Large Vision-LLMs”

What this paper is about

This paper looks at a problem called “hallucination” in large vision-LLMs (LVLMs). LVLMs are AI systems that can look at pictures (vision) and talk about them (language). Think of them as a combo of “eyes” (the vision part) and a “talking brain” (the language part). A hallucination happens when the AI says something about an image that isn’t true—like claiming there’s a cat in a photo with only birds. The paper doesn’t run one new experiment; instead, it surveys (summarizes) what researchers already know: how hallucinations show up, how to test for them, why they happen, and how to reduce them.

The key questions this paper explores

- What does “hallucination” mean for models that see and talk?

- How can we measure and score hallucinations fairly?

- Why do these mistakes happen in the first place?

- What are the best ideas so far to reduce hallucinations?

- What should researchers do next to make these systems more trustworthy?

How the authors approached the problem (in simple terms)

This is a survey paper. That means the authors:

- Explain how LVLMs are built: a vision encoder (the “eyes”), a connection module (the “translator” that links vision to text), and a LLM (the “talking brain”).

- Organize and explain the different types of hallucinations (like making up objects, getting attributes wrong, or mixing up relationships between things in the image).

- Review ways to test models for hallucinations:

- “Generation” tests: Have the model describe an image and then check how much of that description is wrong.

- “Discrimination” tests: Ask yes/no questions like “Is there a dog in the image?” and check if the model answers correctly.

- Gather and group the causes of hallucinations (data problems, vision limits, weak cross-modal alignment, and LLM habits).

- Summarize methods that try to fix hallucinations (better data, better visual detail, better alignment, better decoding, and post-processing fact-checkers).

- Point out gaps and future directions.

Technical terms in everyday language:

- Vision encoder: the part that turns an image into numbers the computer can understand (like how your eyes send signals to your brain).

- Connection/alignment module: a translator that helps image information make sense to the LLM.

- Instruction tuning / RLHF / DPO: ways to teach models to follow human preferences—like giving feedback to help them stop making things up.

- Tokens: tiny pieces of information the model uses to represent words and parts of images.

What the paper found (and why it matters)

1) Types of hallucinations

- Judgment mistakes: answering a yes/no question incorrectly (e.g., “Yes, there’s a cat” when there isn’t).

- Description mistakes: making up or misreporting details when describing an image.

- By meaning (semantics), errors fall into:

- Objects: inventing things that aren’t there (“a laptop” on an empty table).

- Attributes: wrong details (wrong color, count, size—like calling short hair “long”).

- Relations: wrong relationships (e.g., “the bike is in front of the man” when it’s behind).

Why this matters: LVLMs are used for assistance, education, accessibility (like helping describe images to people with low vision), and safety-critical tasks. False statements can confuse or mislead users.

2) How researchers test for hallucinations

Two main styles of evaluation:

- Non-hallucinatory generation (free descriptions):

- Handcrafted pipelines: break the model’s sentence into simple facts and compare each fact to what’s in the image. These methods are clear but can struggle with many object types and open-ended language.

- Model-based end-to-end scoring: use strong LLMs (like GPT-4) or trained classifiers to judge if a response hallucinates. These methods are flexible but depend on the judge’s own accuracy.

- Hallucination discrimination (yes/no questions):

- Ask questions like “Is there a person?” with different strategies for picking trickier absent objects. Score the model’s accuracy.

Takeaway: Both styles are useful. Generative tests look at richer language (including attributes and relations), while discrimination tests are simpler and focus mainly on object presence.

3) Why hallucinations happen

Hallucinations are caused by multiple, intertwined factors—like a team problem where eyes, translator, and brain each contribute:

- Data problems:

- Bias in training: too many “Yes” answers in datasets make models say “Yes” too often.

- Low-quality or mismatched labels: auto-generated instructions may mention things not actually in the image.

- Lack of variety: limited training on fine details or local relationships leads to generic or wrong guesses.

- Vision encoder limits (the “eyes”):

- Low image resolution misses small or subtle details.

- Focus on only the most obvious objects; struggles with counting, tiny items, or precise spatial relations.

- Weak alignment (the “translator”):

- Simple connection layers may not transfer detailed visual info into the LLM’s space.

- Using only a small number of visual tokens can leave out important information.

- LLM habits (the “talking brain”):

- Not attending to the image enough and relying on language patterns to sound fluent.

- Randomness in text generation can increase made-up content.

- Being pushed to do tasks beyond what the model truly “knows.”

4) Ways to reduce hallucinations

The survey groups fixes by where the problem occurs:

- Better data:

- Add balanced yes/no questions and include “negative” examples (where the right answer is “No”).

- Create richer, fine-grained datasets that explicitly label objects, attributes, and relations.

- Better “eyes” (vision):

- Use higher-resolution images or split images into tiles to capture more detail.

- Add extra perception signals (like segmentation maps or depth) to improve spatial understanding.

- Better “translator” (alignment):

- Use stronger connection modules (e.g., multi-layer networks instead of a single linear layer).

- Improve training objectives that explicitly bring vision and language features closer together.

- Better “brain” behavior (LLM):

- Smarter decoding that forces the model to pay attention to image tokens and not over-trust its own summaries.

- Train with human preferences so non-hallucinated answers are favored (e.g., RLHF, DPO).

- Post-processing “fact-checkers”:

- After the model answers, run a second pass that extracts key claims, checks them against visual evidence, and fixes mistakes—like a built-in editor.

5) Unique challenges

- Detecting errors is harder with both pictures and words, especially for attributes and relations.

- The causes are tangled: a mistake may be part vision, part language, part alignment.

- Some fixes (like ultra-high-resolution vision encoders) are expensive to train and run.

What this means for the future

The paper suggests several directions that could make AI systems more trustworthy and useful:

- Better training goals: teach models to understand spatial details, count accurately, and ground words in specific parts of an image.

- More modalities: add signals like audio, video, or depth to strengthen understanding.

- LVLMs as “agents”: let the model call specialized tools (like an object detector or OCR) when it needs precise facts.

- Deeper interpretability: understand exactly when and why the model starts to “make things up,” so we can fix the root causes.

In short, if we want AI that sees and speaks reliably, we need better data, sharper “eyes,” smarter translation between vision and language, careful training with human feedback, and solid fact-checking. This survey maps the current landscape and points the way toward building LVLMs that you can trust to tell you what’s really in the picture.

Collections

Sign up for free to add this paper to one or more collections.