Overview of KVQuant: Enhancing Large Context Length Inference with KV Cache Quantization

The paper entitled "KVQuant: Towards 10 Million Context Length LLM Inference with KV Cache Quantization" by Hooper et al. explores a novel approach to address the memory efficiency challenges in LLMs that require long context lengths. As LLMs increasingly find applications necessitating vast context windows—such as long document summarization and complex multi-turn conversational systems—the ability to manage memory efficiently becomes paramount. The paper introduces KVQuant, a meticulously designed quantization technique aimed at compressing Key-Value (KV) cache activations to sub-4-bit precision, achieving minimal accuracy degradation.

Methodological Advances

KVQuant introduces a series of innovative methodologies designed to enhance the precision of quantized activations:

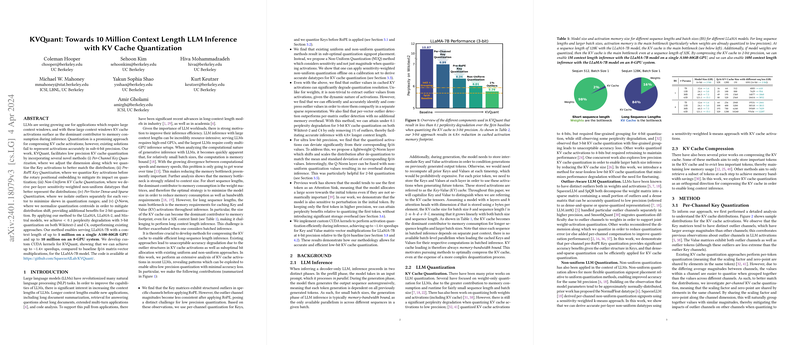

- Per-Channel Key Quantization: By quantizing Key activations on a per-channel basis, the authors strategically adapt to the channel-specific distributions inherent in these matrices. This approach effectively accommodates the structured outliers present in Key channels, thereby mitigating the impact of such extremities on quantization error.

- Pre-RoPE Key Quantization: The quantization of Key activations occurs before the application of rotary positional embeddings (RoPE). This preemptive step is pivotal in maintaining the integrity of quantization as RoPE tends to obfuscate the outlier structure by rotating pairs of channels, which complicates post-RoPE quantization processes.

- Non-Uniform KV Cache Quantization: This method leverages a sensitivity-weighted k-means approach to derive non-uniform quantization signposts, addressing the inadequacies of uniform quantization methods. By aligning quantization points with the non-linear distribution of activation values, precision is enhanced without incurring significant additional computational cost during inference.

- Per-Vector Dense-and-Sparse Quantization: Isolating numerical outliers in each vector through sparse representation enables accurate quantization by restricting the range that needs to be represented. By handling these elements separately, the skew in quantization ranges is minimized, thereby preserving essential information contained in the KV cache.

- Q-Norm: Implementing a normalization technique that aligns the centroids of quantized values with the mean and standard deviation of the original distribution, Q-Norm counteracts potential distribution shifts induced by ultra-low precision quantization, especially crucial at 2-bit levels.

Empirical Validation and Impact

The empirical evaluation on LLaMA, LLaMA-2, and Mistral models demonstrates KVQuant's capacity to achieve sub-0.1 perplexity degradation at 3-bit quantization across Wikitext-2 and C4 datasets. Furthermore, KVQuant supports inference with context lengths reaching up to 10 million on multi-GPU systems, highlighting its scalability and utility in real-world applications necessitating extensive context handling. Its custom CUDA kernels showcase up to 1.4x inference speedup compared to baseline FP16 multiplications, reinforcing the efficiency of the quantization process.

Implications and Future Directions

The techniques pioneered in this paper have profound implications for the future of LLM deployment in memory-constrained environments, particularly in domains requiring extensive context utilization such as law, scientific research, and technical documentation processing. Future research may explore even lower bit precision quantization, optimizing the trade-offs between memory footprint and inferential accuracy. Additionally, extending these techniques to a broader range of models and tasks will further cement the viability of KV cache quantization as a standard approach in LLM inference.

In conclusion, KVQuant represents a significant stride forward in the efficient use of memory for LLMs, promising enhanced performance in applications with large context length requirements. Its combination of technical innovation and empirical rigor makes it a compelling contribution to the field of AI and machine learning.