Assessing LLMs' Efficacy in Software Pentesting Through Prompt Engineering

Introduction

The field of cybersecurity has increasingly turned towards the incorporation of artificial intelligence to bolster defenses against pervasive threats. Particularly in software pentesting, where the identification of vulnerabilities in code is paramount, the potential of LLMs for automating security tasks has been a subject of considerable interest. In responding to this emerging frontier, a paper conducted by researchers at the University of South Florida delved into evaluating the capability of LLMs in identifying software security vulnerabilities, under the hypothesis that LLM-based AI agents could progressively improve their performance in specific security tasks through interactive prompt engineering with human operators.

Background and Motivation

Software pentesting forms an essential part of secure software development practices, aimed at uncovering vulnerabilities before they can be exploited maliciously. Traditional tools used in pentesting, such as static application security testing (SAST) tools, often return a high volume of false positives, leading to pentester fatigue. Conversely, LLMs hold promise due to their capability for nuanced reasoning and potential for on-the-fly training through prompt adjustments. This paper is motivated by the anticipation that LLMs, through iterative refinements in the prompts they are fed, could adapt to pentesting tasks with increasing acumen, providing a sustainable, dynamic approach to software security analysis.

Methodology

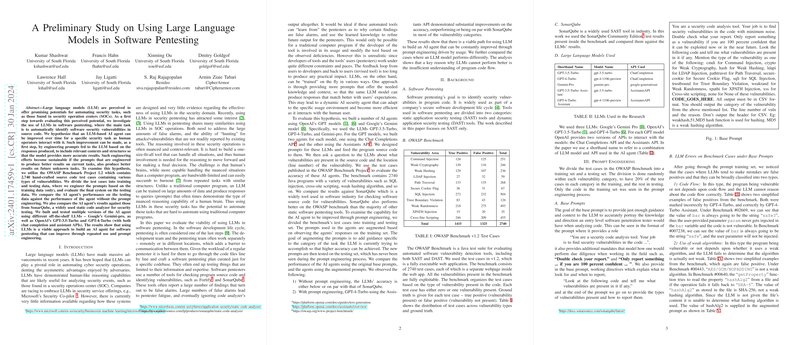

The paper utilized the OWASP Benchmark Project 1.2, featuring 2,740 source code test cases with known vulnerabilities, to train and evaluate several AI agents built upon different off-the-shelf LLMs, including Google's Gemini-pro, OpenAI's GPT-3.5-Turbo, and GPT-4-Turbo. The performance of these AI agents was measured against that of SonarQube, a widely used SAST tool, both with and without the application of prompt engineering. The process involved the division of the benchmark's test cases into training and testing sets, with the engineered prompts being refined based on the AI agents' performance on the training set, then evaluated on the unseen testing set.

Results

The results from this exploratory paper reveal a nuanced landscape:

- AI agents leveraging LLMs exhibited potential for improving their accuracy in identifying software vulnerabilities through prompt engineering.

- In particular, the GPT-4-Turbo model utilizing the Assistants API demonstrated notable improvements post-prompt engineering, performing on par with or better than SonarQube in a majority of vulnerability categories.

- The paper identified two primary categories wherein LLMs tended to err under base prompts: misinterpretations related to code flow and the identification of weak algorithms. Through prompt engineering, these errors were significantly mitigated.

Implications and Future Directions

This investigation substantiates the viable trajectory for LLMs in automating aspects of software pentesting, with prompt engineering serving as a critical mechanism for adapting these models to the nuances of security analysis. The findings underscore the importance of tailored prompt engineering strategies, catering to the specific strengths and weaknesses of different LLMs. Looking ahead, the paper paves the way for a more in-depth exploration into the bounds of LLM capabilities in security-centric applications, including the potential for real-time learning and adaptation in the face of evolving software development practices and emerging threat landscapes.

Conclusion

The preliminary paper by the University of South Florida offers a promising outlook on the integration of LLMs into the domain of software pentesting, highlighting the role of prompt engineering in enhancing the accuracy and adaptability of AI agents. As cybersecurity moves increasingly towards automation, the insights garnered from this research could inform the development of more effective, dynamic tools for securing software in an ever-evolving digital age.

This research was partially supported by the National Science Foundation and the Office of Naval Research, reflecting a broad interest in advancing the frontiers of cybersecurity through the integration of cutting-edge artificial intelligence technologies.