LLMs as On-demand Customizable Service

Overview

The paper "LLMs as On-Demand Customizable Service" addresses the challenges associated with deploying LLMs across various computing platforms. LLMs like GPT-3.5 and LLaMA have impressive language processing capabilities but also come with hefty computational demands. This paper proposes a hierarchical, distributed architecture for LLMs that allows for customizable, on-demand access while optimizing computational resources. Let’s break down the core concepts and implications of this approach.

The Hierarchical Architecture

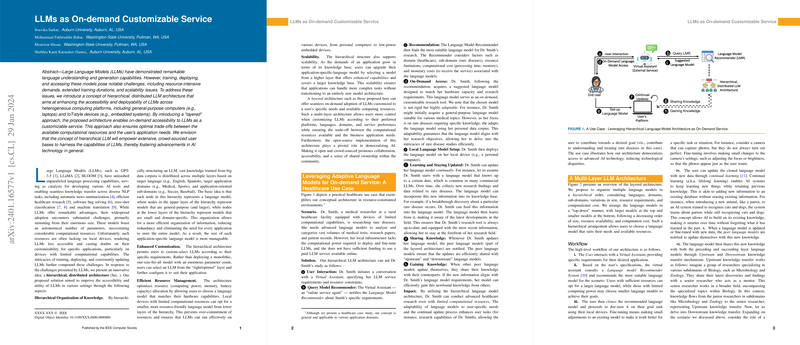

The central idea of the paper is a "layered" approach to LLMs. This method essentially organizes LLMs into different layers:

- Master LLM (Root Layer): This is the biggest, most general-purpose model that serves as the foundation.

- Language-Specific LLMs (LSLM): These are slightly smaller and geared towards specific languages.

- Domain LLMs (DLM): Tailored for particular domains like healthcare, sports, etc.

- Sub-Domain LLMs (SDLM): Even more specific models within domains (e.g., pediatric healthcare within the medical domain).

- End Devices Layer: These include heterogeneous systems like laptops, smartphones, and even IoT devices.

This hierarchical structure addresses several problems by distributing knowledge across layers, optimizing for the needs and capabilities of different users and devices.

Key Advantages

Efficient Resource Management

By allowing users to select models based on their hardware capacities, the system optimizes resource usage. For instance, a researcher using a low-power device can select a smaller, domain-specific model, ensuring that they’re not overcommitting resources and can still perform complex tasks.

Enhanced Customization

Users can custom-select LLMs according to their specific requirements rather than relying on one enormous, monolithic model. This approach enables the creation of tailored LLMs suited to particular languages, domains, and sub-domains.

Scalability

As application demands grow, users can upgrade to more advanced layers in the hierarchy. This built-in scalability ensures that as tasks become more complex, the framework can handle increased requirements without requiring a complete overhaul.

Practical Use Case: Healthcare

The paper illustrates the concept with a practical healthcare use case involving a rural medical researcher, Dr. Smith, who has limited computational resources. Here's how the hierarchical architecture aids her:

- User Interaction: Dr. Smith specifies her requirements to a Virtual Assistant.

- Model Recommendation: The VA consults a LLM Recommender to find the most suitable model for her needs (e.g., a model specialized in medical research and rare diseases).

- On-Demand Access: Dr. Smith obtains and customizes this model on her device.

- Continual Learning: As she gathers new data, her model can update itself, ensuring it stays relevant to ongoing medical discoveries.

- Knowledge Sharing: Updates can be shared with other models in the system, fostering collaboration and data sharing without compromising privacy.

Workflow and Components

The paper outlines a high-level workflow:

- User interacts with a Virtual Assistant.

- Virtual Assistant consults the Recommender System.

- Suitable LLM is recommended and cloned.

- The model is fine-tuned on the user’s local device.

- Model updates are transferred upstream and downstream to keep the system synchronized.

Components:

- Virtual Assistant (VA): Acts as the interface between user and system.

- Master LLM: The most comprehensive model.

- LSLM, DLM, SDLM: Incrementally smaller and more specialized models.

- End Devices: User's local hardware.

Challenges and Future Directions

Identifying Suitable Models

The variability in computational resources and application accuracy requirements necessitates a comprehensive paper to recommend optimal models for users.

Coordinating Continuous Updates

Without effective collaboration and continual learning across layers, maintaining update consistency is challenging.

Preventing Knowledge Loss

Handling catastrophic forgetting (loss of previously learned knowledge) in a continually learning system is vital.

Defining Update Criteria

Determining when and how often to update the parent LLMs based on user modifications requires careful consideration to ensure both relevance and efficiency.

Ensuring Security

The system must be robust against malicious attacks such as data poisoning or model tampering.

Conclusion

This layered approach to customizing LLMs stands to significantly enhance their accessibility and applicability across various platforms. The hierarchical architecture manages computational resources efficiently, supports extensive customization, and promotes scalability, potentially democratizing the use of advanced LLMs in numerous fields such as healthcare, sports, and law. While there are challenges, these avenues for further research present exciting opportunities for future developments in AI.

By addressing these challenges, this innovative framework can drive broader adoption of LLMs, enabling more efficient and effective solutions across a wide array of applications.