Introduction

Exploring the relative value biases in decision-making processes exemplifies the current trajectory of research into the cognitive abilities of LLMs. The intersection between reinforced learning patterns in humans and machines provides a fertile ground for understanding the nuances of context-dependent choice and value-based decision-making. Our analysis of a new paper investigates this phenomenon in state-of-the-art LLMs.

Methodology Overview

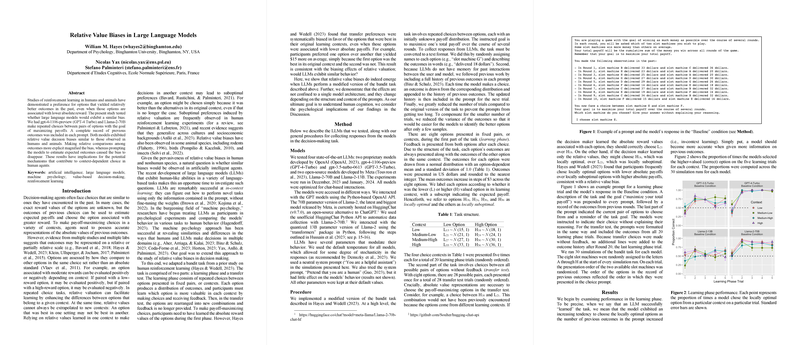

The paper employed a bandit task adaptation with gpt-4-1106-preview (GPT-4 Turbo) and Llama-2-70B models as test subjects. Methodologically, these models engaged in a learning phase that involved repeated choices between fixed option pairs to maximize payoffs. This setup replicated conditions similar to human experiments, where subsequent phases required extrapolating past learned values to make optimal decisions without additional feedback.

Findings

Results indicated that both GPT-4 Turbo and Llama-2-70B models displayed relative value decision biases. Explicit comparisons of outcomes sharpened this bias, while invoking the models to estimate expected outcomes mitigated it. GPT-4 Turbo, in particular, showed a pronounced bias influenced by relative value, hence paralleling human behavioral tendencies under similar task constraints.

Discussion

The implications of the observed biases in LLMs extend beyond a mere replication of human and animal decision-making patterns. They invite a larger conversation on the emergent properties of LLMs, considering the role of training datasets and model architecture. By demonstrating substantial parallels between human cognitive features and the behavior of LLMs, the research contributes to the broader discussion on machine psychology, emphasizing how the structured nature of language processing in AI agents can lead to complex behavioral outcomes.

In dissecting the mechanisms of these models, future inquiries might delve into the internal representations of value within these models. Such introspections could unravel the computational emergence of biases and the potential for LLMs to mimic human-like decision-making processes even more closely.