Introduction

LLMs have become central to numerous natural language processing tasks with their unparalleled ability to understand and generate human-like text. Deployment, however, remains a significant challenge, principally due to their extensive memory requirements and computational costs. Conventional methods frequently resort to larger-than-necessary data types such as FP16 for weights representation during inference, exacerbating these challenges. Recognition of 6-bit quantization (FP6) as a promising alternative has been growing, given its potential to balance inference cost and model quality proficiently.

6-bit Quantization Challenges and FP6-Centric Solution

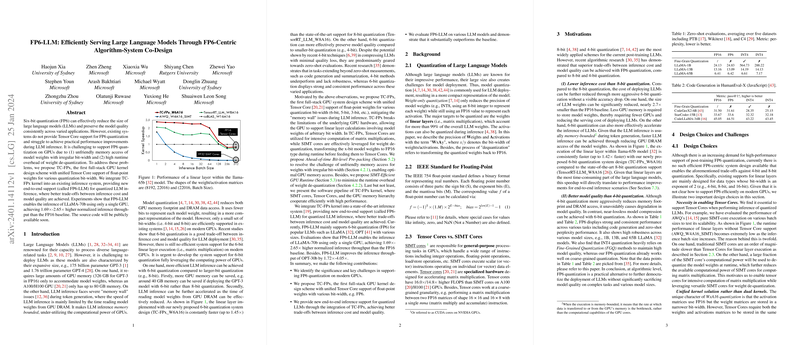

The paper posits that existing systems lack support for FP6 data types and struggle with practical performance enhancements. Two main hurdles are the unfriendly memory access patterns caused by irregular bit-widths of model weights and the runtime overhead for weight de-quantization. To counter these challenges, the authors propose a full-stack GPU kernel design scheme, TC-FPx. This is the first to provide unified support across various quantization bit-widths. By incorporating this framework into their inference system dubbed FP6-LLM, the authors forge new pathways for quantized LLM inference that promises fiscally and computationally more efficient trade-offs without compromising quality.

FP6-LLM Empirical Advantages

Empirical benchmarks illustrate that FP6-LLM, leveraging the TC-FPx design, can serve models such as LLaMA-70b on a single GPU, significantly increasing normalized inference throughput by up to 2.65x compared to FP16 baseline executions. Crucially, these benefits stem from choices like ahead-of-time bit-level pre-packing and SIMT-efficient de-quantization runtime that together circumvent GPU memory access issues and dilute computation overheads during inference.

Conclusion

The paper concludes with the assertion of FP6-LLM's capabilities to efficiently facilitate the inference process for LLMs, relying on innovative algorithm-system co-design. By effectively overhauling the GPU kernel infrastructure to include FP6 support, it opens the door for wider adoption of quantization strategies. Consequently, FP6-LLM stands as a promising solution for deploying large, computationally demanding LLMs more broadly, enhancing their practicality and accessibility.