Methodology

The focus of the paper is on CompactifAI, a method considered novel due to its use of quantum-inspired Tensor Networks (TNs) for compressing LLMs. The paper outlines an innovative technique for compression that diverges from traditional methods such as pruning, distillation, quantization, and low-rank approximations, which typically truncate the number of effective neurons or reduce the numerical precision of weights.

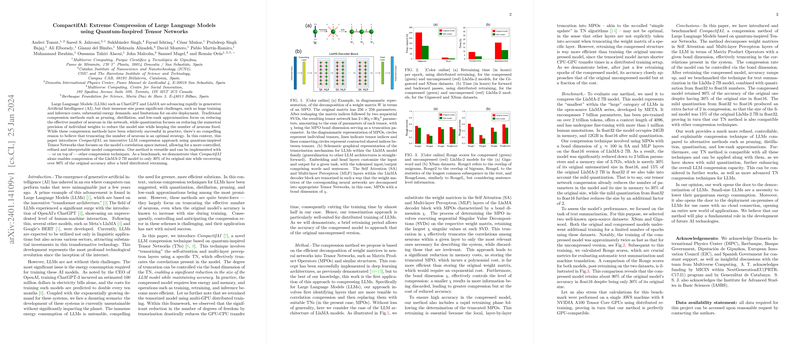

The CompactifAI approach targets the correlation space within the model, favoring a more nuanced and controlled compression strategy. Versatile by design, it can augment existing compression techniques to drive further model efficiency. The authors demonstrate that even after massive compression, the model retains over 90% of its initial accuracy with a brief period of distributed retraining.

Implementation

The authors describe a compression pipeline where weight matrices in neural networks are decomposed into Tensor Networks like Matrix Product Operators (MPOs). Truncating the correlations in the LLMs' layers, specifically in self-attention and multi-layer perceptron layers, is enabled through controlling the bond dimension of the TN. A crucial benefit of this method is its efficiency with significantly diminished energy and memory requirements. The highlighted LlaMA models have their weight matrices duly reshaped and decomposed, with a substantial parameter count reduction. Subsequently, the paper explains retraining the tensorized model using distributed training enables near-original accuracy of the compressed version, emphasizing its suitability for LLM fine-tuning.

Results

Benchmarking the CompactifAI methodology involved the LlaMA-2 7B model, part of META’s LlaMA series. The authors used quantization to halve the memory requirement from float32 to float16, followed by a Tensor Network compression that reduced the model to 30% of its size in float16. Noteworthy is the fact that following additional retraining on text summarization tasks using the XSum and Gigaword datasets, the compressed model achieved nearly 90% of the accuracy of the original model.

Conclusions & Prospects

The CompactifAI method presents a significant advancement in creating energy-efficient and more accessible LLMs. It allows for profound reductions in model size with minimal accuracy loss, offering a more sophisticated alternative to existing compression techniques. This work potentially paves the path for on-premises deployment of LLMs, expanding application fields to areas not reliant on cloud connectivity. The compatibility with other compression methods further strengthens the case for CompactifAI as a versatile and potent tool in AI development, potentially driving the democratization of AI technologies and mitigating their environmental footprint.