Introduction

The paper introduces a new text-to-image generation and editing framework called Recaption, Plan and Generate (RPG), leveraging the chain-of-thought reasoning ability of multimodal LLMs to enhance diffusion models' compositionality. The RPG framework employs MLLMs as a 'global planner' that decomposes complex imaging tasks into simpler sub-tasks, linked to distinct subregions within the image. It introduces regional diffusion techniques that allow for region-wise compositional image generation and proposes a unified, closed-loop approach for both image generation and image editing tasks. The experiments reveal that RPG outperforms established models like DALL-E 3 and SDXL, specifically in handling complex prompts with multiple categories and semantic alignments.

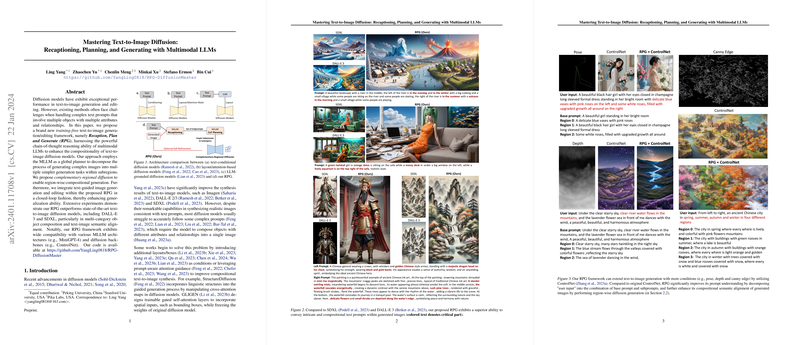

Methodology Overview

The RPG framework operates sans additional training, employing a three-step strategy that includes Multimodal Recaptioning, Chain-of-Thought Planning, and Complementary Regional Diffusion. MLLMs decompose text prompts into descriptive subprompts, which allow for detailed descriptions and semantic alignment during diffusion processes. CoT Planning is applied to allocate subprompts to complementary regions, treating the complex generation task as a collection of simpler ones. Complementary Regional Diffusion is proposed to realize regional generation and spatial merging, effectively navigating around the challenge of content conflicts in overlapping image components.

Compositional Generation and Editing

The RPG framework demonstrates versatility in handling both generation and editing tasks. For editing, it employs MLLMs to provide feedback identifying semantic discrepancies between generated images and target prompts, leverages CoT planning to delineate editing instructions, and utilizes contour-based diffusion for precise region modification. The framework showcases an ability to refine the generation process iteratively through a closed-loop implementation that incorporates feedback from earlier rounds of editing.

Experiments and Findings

The evaluation of RPG is carried out extensively; figures within the paper illustrate the framework's superiority in aligning complex textual prompts with generated image contents. Multiple datasets and benchmarks are utilized to assess RPG's performance, including the T2I-Compbench. RPG exhibits the capacity to adapt to different MLLM architectures and diffusion backbones, proving its flexibility and potential for wide application. In image editing comparisons, RPG outshines other state-of-the-art methods by producing more precise and semantically aligned edited images. Through iterative refinements, the method achieves further alignment and improvement in results.

Conclusion and Outlook

The RPG framework sets a new bar in handling complex and compositional text-to-image tasks, effectively leveraging the reasoning capabilities of MLLMs to plan image compositions for diffusion models. It presents a training-free, versatile approach and is compatible with various architecture types. Future research will aim at expanding the RPG framework to accommodate even more complex modalities and apply it to a broader spectrum of practical scenarios, solidifying text-to-image generation's position as a key technology in creative and design applications.