Introduction

LLMs epitomize a significant advancement in the field of artificial intelligence, with their vast capabilities in text generation being applied in various sectors. Yet, these models continue to face the challenge of aligning their response styles with human expectations—a process that conventionally depends on laborious human annotation and has limitations in scalability and directional control. Existing pretraining and instruction tuning practices lay the foundational capabilities for text generation; however, they often fall short in the alignment phase, necessitating an additional pass with human preference data to refine context-specific outputs.

Background and Related Work

The confluence of RLHF techniques and the LLM policy model's utility in content generation has been a research focal point. Despite its utility, RLHF encounters issues such as training instability and memory demands, leading to the exploration of DPO as a solution. DPO removes the necessity for an explicit reward model, optimizing the LLM via maximum likelihood. It presents an adept alternative to RLHF, lowering complexity and retaining the alignment performance. Prior related work in the domain includes RLAIF, which aims to diminish the dependency on human feedback through the use of existing LLMs, and 'Constitutional AI', which emphasizes AI self-improvement guided by principles.

Method

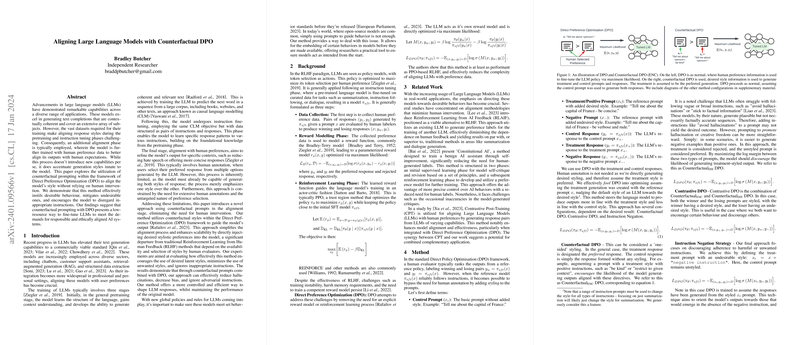

The core innovation in this research hinges on counterfactual prompting blended with DPO's framework to steer LLM output styles. The process introduces controlled prompts designed to direct the model's responses, both in desired and undesired styles, without explicit human annotation. The concept of a Control Prompt (plain, unstyled instruction) and Treatment Prompt (inclusive of the desired styling) is central to this strategy. To facilitate the alignment with human preferences without human supervision, different configurations were drilled into the models: Counterfactual DPO ENC, Counterfactual DPO DIS, Contrastive DPO, and Instruction Negation. Through this finesse, models were adeptly tuned to preferred latent styles, restrained from unwanted styles, and instigated to dismiss inappropriate instructions.

Experiments and Discussion

The series of trials executed on the Mistral-7B-Instruct-v0.2 model evidenced the efficacy of the counterfactual and contrastive DPO methods. The contrasts between desired and undesired prompting unveiled that not only could these methods effectively reduce biases and hallucinations in model outputs, but they could also equip models to neglect certain instructions, ensuring a layer of safety and ethical compliance. Particularly noteworthy was the performance of Contrastive DPO, a balanced blend of the two Counterfactual DPO methods, which proved to be a robust approach across varied testing spheres.

Aside from presenting a trailblazing alignment technique, this research prompts further inquiry into its scalability, adaptability across multiple contexts, and the iterative integration of various styles. These methods pave the way for LLMs to be renitently aligned with ethical standards before their widespread diffusion—a major stride underscoring the symbiotic relationship between AI evolution and human-centric values.