Evaluating Multimodal LLMs on Image Aesthetics Perception: The AesBench Framework

The paper "AesBench: An Expert Benchmark for Multimodal LLMs on Image Aesthetics Perception" introduces a structured approach to evaluate the capabilities of multimodal LLMs (MLLMs) in perceiving image aesthetics. Recognizing the indeterminate performance of MLLMs like ChatGPT and LLaVA in aesthetic perception tasks, the authors propose the AesBench benchmark to bridge this gap.

Expert-Labeled Aesthetics Perception Database (EAPD)

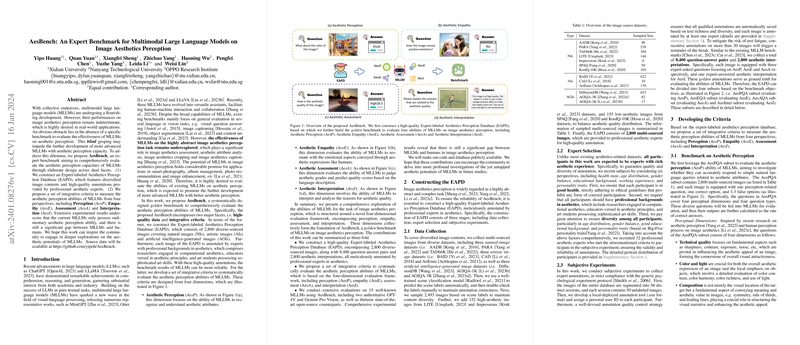

At the core of the AesBench is the Expert-labeled Aesthetics Perception Database (EAPD), a comprehensive collection of 2,800 images categorized into natural images (NIs), artistic images (AIs), and AI-generated images (AGIs). These images are meticulously annotated by professionals, including computational aesthetics researchers and art students, ensuring high-quality data for evaluating MLLMs.

Evaluation Framework

AesBench assesses MLLMs through a four-dimensional framework:

- Aesthetic Perception (AesP): This dimension evaluates the ability of models to recognize and comprehend various aesthetic attributes across images. The paper introduces an AesPQA subset with a focus on technical quality, color and light, composition, and content.

- Aesthetic Empathy (AesE): The AesE dimension measures an MLLM's ability to resonate with the emotional essence conveyed through images, focusing on emotion, interest, uniqueness, and vibe.

- Aesthetic Assessment (AesA): The task involves assigning aesthetic ratings to images, with the models tasked with categorizing images into high, medium, or low visual appeal.

- Aesthetic Interpretation (AesI): This dimension evaluates how effectively MLLMs can articulate reasons for the aesthetic quality of images, requiring nuanced language generation capabilities.

Experimental Insights and Implications

The researchers conducted extensive evaluations with 15 MLLMs, including well-known models such as GPT-4V and Gemini Pro Vision. The results indicate significant variability in performance, with Q-Instruct and GPT-4V being the top performers across several tasks, though their overall accuracy remains below optimal human levels. This underscores the substantial gap between current MLLM capabilities and human-like aesthetic perception.

Particularly notable is the varied performance of MLLMs on different image sources, with models generally performing better on natural images than on artistic or AI-generated images. Additionally, the precision in aesthetic interpretation emerged as a critical challenge, suggesting prevalent hallucination issues within MLLMs when generating language-based aesthetic analyses.

Future Directions

The insights from AesBench suggest that future MLLMs could greatly benefit from developing more robust mechanisms to understand and assess aesthetic attributes. The implications of such advancements are significant for real-world applications like smart photography, image enhancement, and personalized content curation, all of which require sophisticated aesthetic perception abilities.

Overall, the AesBench benchmark offers a pivotal step towards systematically evaluating and improving the aesthetic perception capabilities of MLLMs. As these models evolve, further refinement of benchmarks like AesBench will be essential to guide research and application development in the field of image aesthetics perception.