Introduction to Pragmatics in LLMs

The field of NLP has been revolutionized by LLMs capable of performing a wide range of language-based tasks with increasing competency. An important aspect of language understanding is pragmatics - the ability to interpret language based on context, intentions, presuppositions, and implied meanings. Although LLMs excel at understanding semantics, their ability to grasp pragmatics is not as well studied. A recent research effort evaluates this by introducing a benchmark called the Pragmatics Understanding Benchmark (PUB).

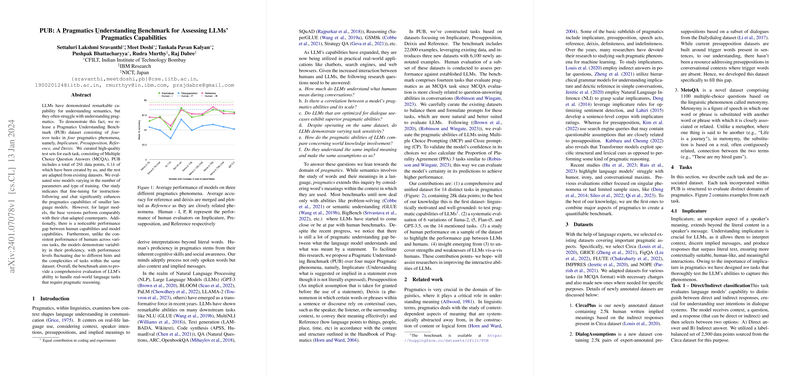

Evaluating LLMs with PUB

PUB consists of 28,000 data entries, specially curated for 14 tasks over four pragmatic phenomena: Implicature, Presupposition, Reference, and Deixis. The tasks revolve around Multiple Choice Question Answers (MCQA), simulating real-world language use scenarios. In this comprehensive benchmark paper, a wide range of models, including base and chat-adapted versions varying in size and training approach, were evaluated. The research illuminates the effectiveness of fine-tuning small models for instruction-following and chat tasks in enhancing pragmatic understanding.

Interpretation of Pragmatic Phenomena

The benchmark looks into distinguishing indirect from direct responses, classifying responses, implicature recovery in dialogue contexts, and several other tasks that involve figurative language such as sarcasm detection and agreement. It becomes evident through this paper that instruction-tuned and chat-optimized LLMs exhibit improved pragmatic capabilities over their base counterparts. However, large models, despite their size, do not always maintain superiority in pragmatics, with some showing comparable performance to their chat-adapted equivalents.

Insights and Future Directions

Notwithstanding significant progress, LLMs have yet to match human-level pragmatics. Human evaluations maintain consistent performance across tasks, whereas models show varied proficiency, indicating room for improvement. One clear takeaway is the importance of context-based understanding for LLMs to provide more nuanced and human-like interactions. The PUB has substantiated certain gaps in LLMs' abilities to fully comprehend pragmatics and is expected to steer further research towards refining their interactive abilities, moving closer to a genuine conversational understanding.