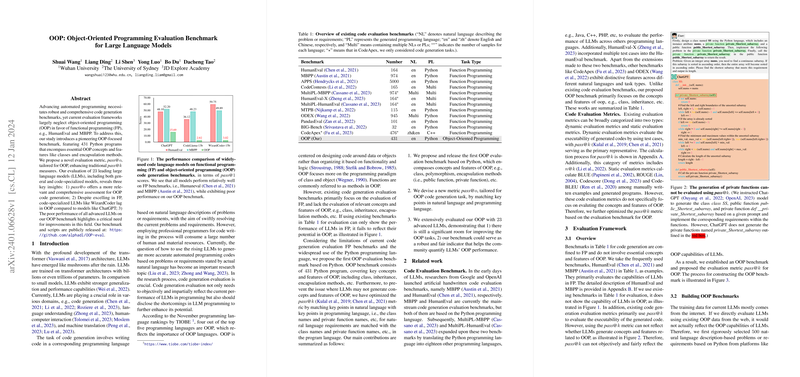

Overview of the Object-Oriented Programming Benchmark

LLMs have demonstrated prolific success in various fields, notably in automated code generation tasks. However, the evaluation of these models has predominantly centered around functional programming tasks. A significant gap exists in the assessment of LLMs' capabilities in Object-Oriented Programming (OOP). Recognizing this, a new paper has introduced an OOP benchmark tailored for Python, aiming to gauge LLM performance in areas of class-based design, encapsulation, and other key components of OOP.

Evolving Assessment Metrics

Traditional metrics like pass@k have limitations when it comes to OOP evaluation since they may overlook whether LLMs are correctly implementing OOP concepts. To address this, the paper proposes a new metric, pass@o, which compares key concepts expressed in the model's output to those in the benchmark, ensuring a more rigorous and targeted assessment.

Insights from LLM Evaluations

An extensive evaluation of leading LLMs using the newly introduced OOP benchmark and pass@o metric displayed three primary findings. Firstly, it was apparent that while some LLMs excelled at functional programming, their performance lagged in complex OOP tasks. Secondly, models that were specifically geared toward code generation, such as WizardCoder, did not outperform more general models like ChatGPT in terms of OOP capabilities. Lastly, the overall lackluster performance across all models signaled a clear pathway for further improvements in LLMs' understanding and execution of OOP principles.

Characteristics of the OOP Benchmark

The pioneering benchmark is comprehensive, containing 431 Python programs that exercise a host of OOP principles. The team involved meticulously selected and adapted these programs to ensure a challenging yet fair evaluation context for LLMs. Catering to varied difficulty levels, it comprises simple tasks that test knowledge of classes and public methods, while progressively including advanced concepts such as inheritance and polymorphism at higher difficulty tiers.

Implications and Future Directions

The research exposes a dichotomy in the capabilities of current LLMs, highlighting their relative mastery of functional programming but underdevelopment in object-oriented constructs. This realization provides a clarion call for refining these models. In looking ahead, there's an emphasis on enhancing the OOP features within LLM training. Additionally, the paper opens up the opportunity for further exploration into the design of assessment benchmarks not just for other programming paradigms but for multiple programming languages.