Introduction to Multimodal LLMs

The domain of artificial intelligence has seen a noteworthy leap forward with the integration of visual data into LLMs, leading to the creation of Multimodal LLMs (MLLMs). These advanced systems have demonstrated their efficacy in understanding images, answering visual questions, and following instructions that involve visual content. Among the latest innovative models is GPT-4V(ision) which has established a new benchmark in the field.

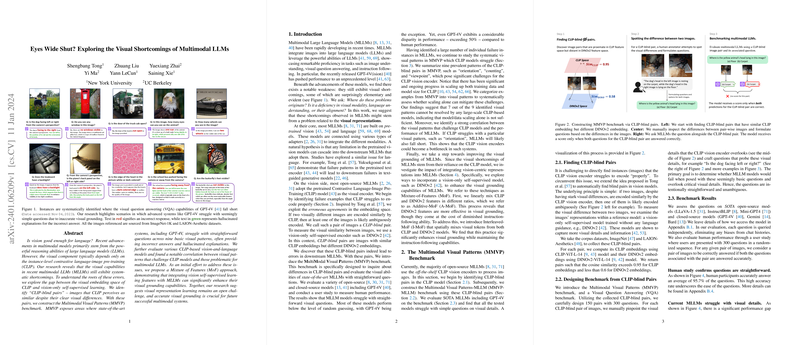

Exploring Visual Limitations

Despite these strides, a critical examination suggests that MLLMs are still hindered by essential visual understanding issues. This paper explores the possibility that these challenges may originate from the visual representations learned during the training process, utilizing a methodology based on Contrastive Language-Image Pre-Training (CLIP) vision encoders. The paper purposefully identifies pairs of visually distinct images that the CLIP model erroneously perceives as similar. Termed as "CLIP-blind pairs", they serve as a benchmark called the Multimodal Visual Patterns (MMVP) to evaluate the visual aptitude of MLLMs. When examined against this benchmark, it was found that the models, including GPT-4V, often struggled with basic visual queries, suggesting that visual representation learning models might not yet be sufficiently developed for robust multimodal understanding.

Visual Patterns Challenging MLLMs

Further investigation identified nine specific visual patterns that persisted in tripping up CLIP-based MLLMs, such as orientation, counting, and viewpoint. Observing that not all limitations were resolved by scaling up models, the research brought to light a significant correlation: difficulties faced by the CLIP model in recognizing certain visual patterns were often reflected in the overall performance of the MLLMs. This correlation hints that constraints inherent to the design of the vision components in these models might be limiting their capabilities.

Improving Visual Grounding in MLLMs

The paper didn’t stop at merely identifying the deficiencies; it also proposed a path towards enhancement. A novel approach named Mixture-of-Features (MoF) indicates a way to improve the visual grounding of MLLMs. By incorporating features from vision-only self-supervised learning models into the CLIP features, it is plausible to strengthen the visual representation without negatively impacting the models' language instruction-following prowess.

Conclusion and Future Directions

This paper shines a light on a pertinent issue in today's advanced MLLMs: their visual understanding is not as profound as might be assumed. Even models celebrated for their complexity and range, such as GPT-4V, are prone to making elementary mistakes when decoding visual information. This research suggests that an effective way forward may include improved or alternative visual representation methods, in conjunction with more diversified evaluation benchmarks. By addressing these visual shortcomings and laying the groundwork for future innovations, the advancement of MLLMs can continue in a direction that is truly representative of both the literal and figurative 'big picture.'