Introduction

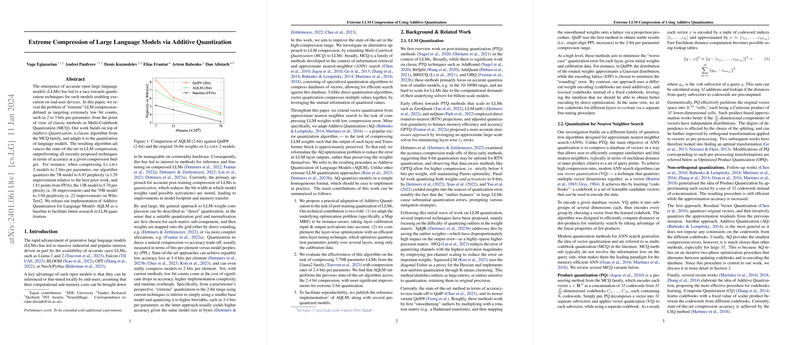

LLMs have seen significant advancement, attracting industrial and popular interest due to their precision and the potential for localized operation on user devices. Compression of these models is vital for their deployment on hardware with limited computation and memory resources. Quantization, the primary approach for post-training compression, aims to reduce the bit-width of model parameters and consequently improve the memory footprint and computational efficiency of the models. However, the quest for high compression often introduces a trade-off where extreme quantization leads to accuracy loss. This paper presents a novel approach to LLM compression utilizing Additive Quantization (AQ), advancing the state-of-the-art in maintaining accuracy under tight compression budgets.

Methodology

The paper details a modified version of AQ, a classic algorithm from the multi-codebook quantization (MCQ) family, adapted to compress LLM weights while preserving the functionality of the models. The new approach, named Additive Quantization for LLMs (AQLM), reformulates the standard AQ optimization problem to minimize the error in the LLM layer outputs rather than the weights themselves. By modifying the algorithm to be instance-aware and incorporating layer calibration, AQLM achieves a homogeneous and simple quantization format that maintains high accuracy even at extreme compression levels like 2 bits per parameter.

Results

In the results section, AQLM showcases superior performance when compressing and quantizing LLMs of various sizes, demonstrating significant improvement over existing methods across several bit compression ranges. The paper presents extensive evaluations using popular benchmarks such as the Llama 2 models, measuring both perplexity and zero-shot task accuracy. Notably, substantial improvements in perplexity are recorded, particularly at the extreme low-end of 2-bit parameter compression.

Conclusion

AQLM stands as a significant contribution to the field of LLM quantization, showing that it is possible to maintain high accuracy even at low bit counts. It serves as a critical step toward making complex LLMs accessible within a more extensive range of environments, especially those with limited resources. The release of its implementation further supports ongoing research and development, providing a foundation for future exploration into efficient LLM deployment on consumer-grade devices. Further work aims to streamline AQLM's computational process and explore optimal parameter settings for model compression.