Overview of Temporal Knowledge Graph Completion

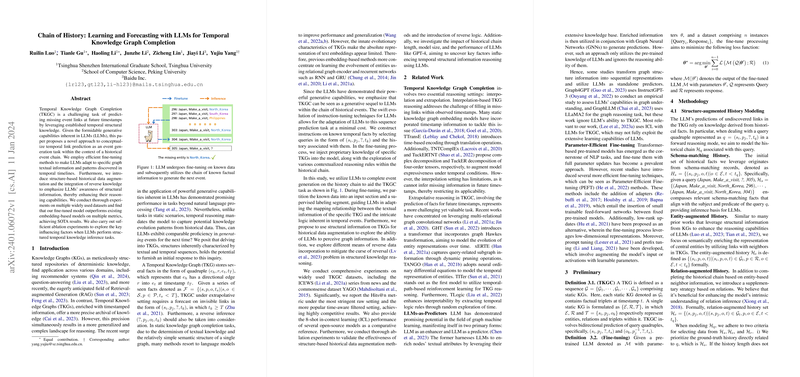

Temporal Knowledge Graphs (TKGs) are vital for understanding how entities and their relationships evolve over time. Predicting missing event links in TKGs, a task known as Temporal Knowledge Graph Completion (TKGC), can be quite complex due to the dynamic nature of TKGs. This paper introduces an innovative approach that reimagines the TKGC challenge by utilizing the capabilities of LLMs.

Methodology Employed in Research

The authors' method hinges on fine-tuning LLMs to better adapt to the temporal sequence prediction task. They fine-tune the models on known data from TKGs to enhance event generation. The process of fine-tuning includes structuring the LLM's knowledge of past events, which helps it learn relationships and patterns necessary for forecasting future ones. The researchers also propose data augmentation techniques and the integration of "reverse logic" to make the LLMs more effective at identifying the subtleties within structural knowledge.

Analysis and Experimental Results

Experiments comparing LLMs fine-tuned with these novel approaches to existing methods show promising results. On several TKGC benchmarks, the models outperformed current methods, achieving state-of-the-art performance. The experiments included various metrics and considered how factors like the amount of historical information available and the size of the LLM impact the model's performance in predicting temporal graph links.

Impact of Research and Future Work

The paper demonstrates the potential of using LLMs not just as tools for natural language processing but as predictive models for temporal reasoning in knowledge graphs. The findings suggest that the predictive capabilities of LLMs can be significantly enhanced when equipped with structured, temporal, historical data. This research opens avenues to further apply LLMs to complex tasks in various domains and lays groundwork for future advancements in AI's ability to understand and predict the temporal dynamics of knowledge graphs.