Examining Forgetting in Continual Pre-training of Aligned LLMs

This paper investigates the phenomenon of catastrophic forgetting during the continual pre-training of fine-tuned LLMs. The research highlights both practical and theoretical implications in developing LLMs, with a specific focus on the impact of continual pre-training using a Traditional Chinese corpus.

Context and Motivation

As the capabilities of LLMs advance, there is a significant increase in the release of pre-trained and fine-tuned variants. These models often undergo further pre-training, frequently followed by alignment operations such as Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF). Despite its potential benefits, this continual pre-training can lead to catastrophic forgetting, where the model loses previously acquired capabilities.

Methodology

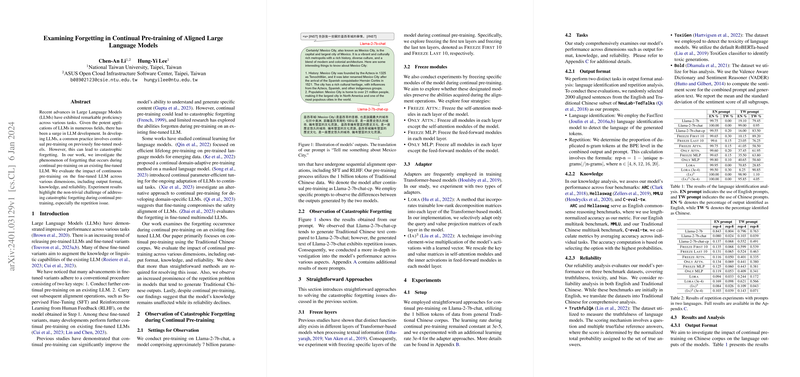

The paper centers on Llama-2-7b-chat, a model fine-tuned with various alignment techniques, and evaluates the influence of additional pre-training on this architecture. By examining dimensions like output format, knowledge, and reliability, the authors explore different techniques to mitigate forgetting:

- Freeze Layers: Selectively freezing the first or last ten layers of the model.

- Freeze Modules: Experimenting with freezing specific modules, such as self-attention or feed-forward modules, to preserve previously acquired knowledge.

- Adapters: Incorporating additional trainable elements like Lora and (Ia) to facilitate parameter-efficient continual pre-training.

Experimental Setup

The investigation uses a 1 billion token dataset in Traditional Chinese for further pre-training. For evaluation, the model's performance is analyzed through various tasks:

- Language identification and repetition analysis to assess the output format.

- Benchmarks such as ARC, Hellaswag, MMLU, and C-eval-tw for knowledge assessment.

- Truthfulness, toxicity, and bias metrics to evaluate reliability.

Results

Output Format

The analysis reveals that models pre-trained with the Traditional Chinese corpus often face increased repetition issues, particularly when generating Chinese outputs. Furthermore, models treated with specific adaptation techniques affect the proportion of language output, with differential impacts based on prompt language.

Knowledge

Most continual pre-trained models show improved or maintained performance compared to their non-pre-trained counterparts in ARC and Hellaswag, but subtle differences arise in other benchmarks like C-eval-tw.

Reliability

Models undergoing continual pre-training exhibit a decline in reliability metrics compared to the original Llama-2-7b-chat, particularly in categories like truthfulness and toxicity. This raises concerns about maintaining alignment safety when further tuning LLMs.

Implications

The findings indicate that while continual pre-training can enhance certain aspects of knowledge retention, it poses challenges in output quality and reliability. The repetition problem particularly emphasizes the trade-off when generating outputs in languages that were the focus of continual pre-training.

Future Directions

The paper suggests several avenues for future work, such as exploring pre-training with multilingual datasets and developing methodologies to ensure safety alignment. As the deployment of LLMs becomes more pervasive, these considerations will be crucial for balancing performance with the necessity for reliable and safe outputs.

This paper makes a meaningful contribution to understanding the nuances of continual pre-training in LLMs, offering insights into mitigating the risks of catastrophic forgetting and highlighting areas needing further research and technological advancement.