MLLM-Protector: Enhancing Safety in Multimodal LLMs

Understanding the Need for MLLM-Protector

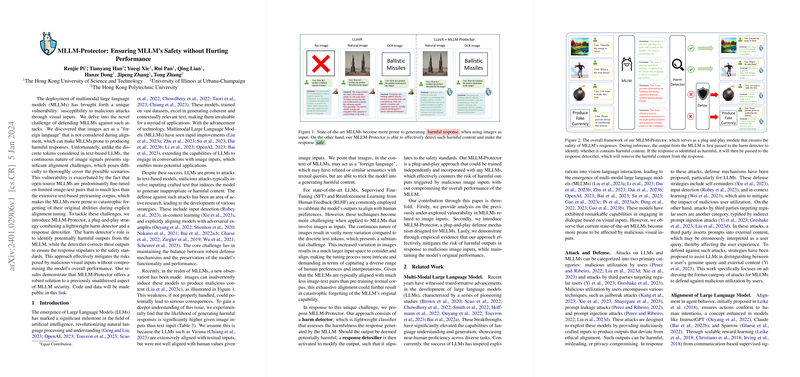

The proliferation of LLMs and their extension, Multimodal LLMs (MLLMs), has ushered in a new era of AI capabilities, particularly in natural language processing. These advancements, however, come with increased vulnerabilities, especially regarding the generation of harmful content in response to malicious inputs. This issue is particularly pronounced in MLLMs, where images can serve as inputs, further complicating the challenge of ensuring content safety. The research presented here introduces MLLM-Protector, a methodology designed to safeguard against such vulnerabilities without detracting from the models' performance.

The Challenge: Safeguarding Performance and Safety

MLLMs' susceptibility to producing unsolicited outputs when presented with manipulated image inputs is a pressing concern. Traditional alignment and tuning strategies, such as Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF), face challenges in effectively mitigating these risks for MLLMs due to the complex, continuous nature of image data. Furthermore, existing defense mechanisms often lead to a degradation in the model's original capabilities or fail to generalize across the diverse scenarios MLLMs encounter.

MLLM-Protector: Approach and Architecture

MLLM-Protector addresses MLLMs' vulnerabilities through a two-pronged approach: a harm detector and a response detoxifier. The harm detector is a lightweight classifier trained to identify potentially harmful content generated by the MLLM. Upon detection, the response detoxifier, another trained component, amends the output to adhere to safety standards. This approach maintains the model's performance while ensuring outputs remain within acceptable content boundaries.

Model Components and Training

- Harm Detector: Utilizes a pretrained LLM architecture, modified for binary classification to discern harmful content.

- Response Detoxifier: Aims to correct harmful responses while maintaining relevance to the user's query, achieving a balance between harmlessness and utility.

The training methodology leverages existing QA datasets annotated with acceptability indicators and exploits powerful models like ChatGPT to generate diverse training samples, encompassing a wide array of potential scenarios and malicious inputs.

Empirical Validation and Insights

The efficacy of MLLM-Protector is demonstrated through rigorous experimentation, showing a notable reduction in the attack success rate (ASR) across various scenarios without significant performance trade-offs. Specifically, the approach almost entirely neutralizes harmful outputs in critical areas such as illegal activity and hate speech, underlining its practical utility.

Future Prospects and Concluding Thoughts

MLLM-Protector sets a precedent for developing robust defense mechanisms that do not compromise on the functional integrity of MLLMs. It opens avenues for future research focused on further refining safety measures, exploring the scalability of such methods, and extending their applicability to newer, more complex MLLM architectures. As the landscape of MLLMs evolves, ensuring these models' safety and reliability will remain paramount, necessitating continual advancements in defense strategies like MLLM-Protector.