Background

LLMs are widely recognized for their exceptional performance in NLP tasks, enhanced by a training method known as instruction tuning. Despite their capabilities, they often encounter performance ceilings when processing multiple tasks due to fixed model capacities. Traditional methods to scale up these models can be resource-intensive and impractical. A novel approach is imperative for enabling LLMs to transcend these bounds without incurring prohibitive costs.

Introducing Parameter-Efficient Sparsity Crafting

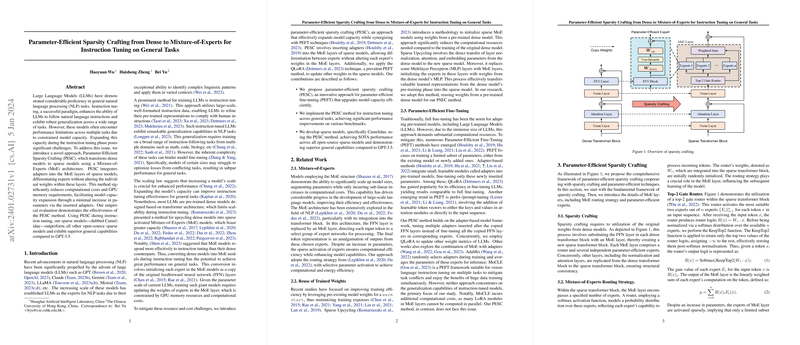

The paper presents Parameter-Efficient Sparsity Crafting (PESC), bridging the gap between the need to scale and resource limitations. PESC transitions a dense LLM into a sparse model by employing a Mixture-of-Experts (MoE) framework. It does so by incorporating adapters — small tunable modules — into the MoE layers, effectively allowing the model to expand its processing capacity without the need to modify existing weights. This methodology not only promises computational efficiency but also introduces a minimal set of new parameters, thus avoiding the intensive resource demands typically associated with model scaling.

PESC's Empirical Validation

Empirically, the sparse models developed using the PESC method, referred to as Camelidae, have outperformed all open-source sparse models in testing. Moreover, they exhibit broader general capabilities than the well-known GPT-3.5 in various standard benchmarks. The research meticulously documents the methods of implementing PESC and compares the performance of Camelidae against several other benchmarks, showcasing its superior efficacy in general tasks.

Advantages and Future Potential

PESC stands out by elegantly addressing the perennial conflict of scaling model capacity with manageable increases in parameters and computational demand. The documented results indicate that the models created with PESC could significantly influence the future of model tuning. They hint at the possibility of crafting LLMs that not only understand and generate human-like language but do so with an underlying architecture that is both powerful and efficient.

Overall, the PESC approach marks a notable advance in the domain of LLM fine-tuning. It yields models like Camelidae that could potentially set new standards in NLP tasks while maintaining manageable computational and resource overhead.