GPT-4V(ision) is a Generalist Web Agent, if Grounded

The paper "GPT-4V(ision) is a Generalist Web Agent, if Grounded" authored by Boyuan Zheng et al. from The Ohio State University explores the burgeoning potential of large multimodal models (LMMs) such as GPT-4V(ision) in the field of web navigation tasks. The researchers explore how these models, when appropriately grounded, can act as robust generalist web agents capable of handling diverse tasks across various websites. This paper takes inspiration from recent advancements in LMMs and employs them to develop a generalist web agent with a specific focus on addressing the grounding challenge.

Overview

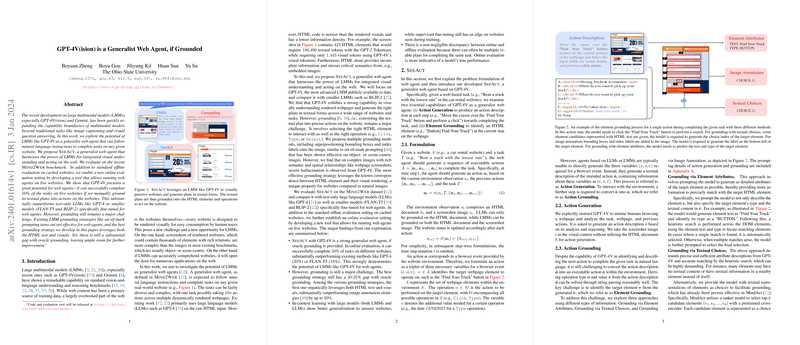

The authors introduce SeeAct, a novel approach to leverage the capabilities of GPT-4V for web navigation tasks by integrating visual understanding and textual planning. The evaluation is conducted using the Mind2Web benchmark, which provides a rigorous set of tasks and real-world website interactions. The experiments encompass both offline evaluation on cached websites and a unique online evaluation on live websites, providing a comprehensive assessment of the model's real-world applicability.

Methodology

SeeAct is designed to balance action generation and grounding:

- Action Generation: Utilizing GPT-4V to generate a detailed textual plan based on the visual context of the webpage and the task requirements.

- Action Grounding: Converting the textual plan into executable actions. Three grounding strategies are explored:

- Grounding via Element Attributes: Using heuristic searches based on detailed descriptions of target elements.

- Grounding via Textual Choices: Employing a candidate ranking model to select elements from textual descriptions.

- Grounding via Image Annotation: Adding visual labels to elements and requiring the model to generate corresponding labels accurately.

Key Findings

- Performance: SeeAct with GPT-4V exhibits significant potential, achieving a task completion rate of 51.1% on live websites with oracle grounding. This outperforms text-only models like GPT-4 significantly, which achieved a completion rate of 13.3%.

- Grounding Challenge: Despite the promise shown by LMMs, grounding remains a significant bottleneck. Among the grounding strategies, textual choices proved the most effective, while image annotation faced substantial issues with hallucinations and spatial linking errors.

- Evaluation Discrepancy: There is a notable discrepancy between offline and online evaluations, with online evaluations providing a more accurate measure of a model's performance due to the dynamic nature of the web and the presence of multiple viable task completion plans.

Implications and Future Directions

The paper's implications are multi-faceted, addressing both theoretical and practical realms:

- Web Accessibility and Automation: The potential of LMMs like GPT-4V as generalist web agents can significantly enhance web accessibility and automate complex sequences of actions on websites, aiding users with disabilities and streamlining routine tasks.

- Improvement in Grounding Techniques: The persistent gap between current grounding methods and oracle grounding highlights the need for further research. Better utilization of the unique properties of web environments, such as the correspondence between HTML and visual elements, could mitigate hallucinations and improve model accuracy.

- Evaluation Metrics: The difference between offline and online evaluations suggests that future models should be tested dynamically on live websites to ensure robust performance in real-world scenarios.

Conclusion

The research presents a thorough and insightful analysis of employing LMMs for web navigation tasks, emphasizing the critical role of grounding in converting multimodal model capabilities into practical, real-world applications. While GPT-4V and similar models hold substantial promise, addressing the grounding challenge remains pivotal for realizing their full potential as generalist web agents. Future work in this area should focus on refining grounding strategies and possibly developing new evaluation frameworks to better capture the dynamic and multifaceted nature of web tasks. This paper lays a strong foundation for subsequent advancements in the intersection of web automation and multimodal AI.