Understanding Legal Hallucinations in AI

LLMs, like ChatGPT and others, hold promise for revolutionizing the legal industry by automating some tasks traditionally done by lawyers. However, as this paper reveals, the road ahead is not without pitfalls. A pressing concern is the phenomenon of "legal hallucinations"—when these models generate responses inconsistent with legal facts.

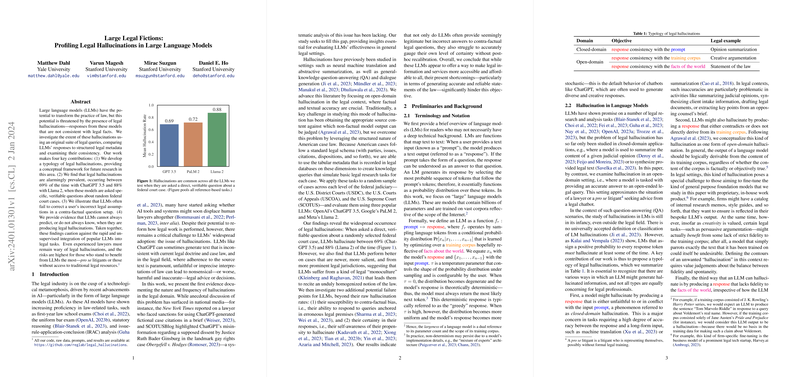

The Extent of Legal Hallucinations

An extensive examination revealed that legal hallucinations occur alarmingly often. Law-specific queries to models like ChatGPT and Llama induced incorrect responses between 69% and 88% of the time. Interestingly, the occurrence of inaccurate information was connected to several factors ranging from the complexity of legal queries to the hierarchy of courts involved. For example, the frequency of hallucinations intensified for queries about lower court cases as opposed to the Supreme Court.

Models’ Response to Erroneous Legal Premises

Further complicating matters, LLMs displayed a troubling inclination to reinforce incorrect legal assumptions presented by users. When faced with questions built upon false legal premises, the models often failed to correct these assumptions and responded as if they were true, thus misleading users.

Predicting Hallucinations

Another layer to this challenge is the LLMs' ability to predict or be aware of their own hallucinations. In ideal circumstances, LLMs would be calibrated to recognize and convey when they are likely issuing a non-factual response. However, the paper found that models, particularly Llama 2, were poorly calibrated, often expressing undue confidence in their hallucinated responses.

Implications for Legal Practice

The implications are significant. While the use of LLMs in legal settings presents opportunities for making legal advice more accessible, these technologies are not yet reliable enough to be used unsupervised, especially by those less versed in legal procedures. The research thus calls for cautious adoption of LLMs in the legal domain and emphasizes that even skilled attorneys need to remain vigilant while using these tools.

Future Directions for Research and Use

The paper's findings underscore that combating legal hallucinations in LLMs is not only an empirical challenge but also a normative one. Developers must decide which contradictions to minimize—those of the training corpus, the user's inputs, or the external facts—and communicate these decisions clearly.

As a way forward, developers must make informed choices about how their models reconcile these inherent conflicts. Users, legal professionals, or otherwise, should be aware of these dynamics and deploy LLMs with a critical eye, constantly validating the accuracy and certainty of the generated legal texts. Until these challenges are fully addressed, the full potential of LLMs in augmenting legal research and democratizing access to justice remains unrealized.