Introduction

Documents like forms and contracts represent a substantial part of data within enterprises. These documents are not just about the text they contain but also about how the text is organized spatially. Understanding such documents computationally presents a challenge due to their complex layouts and varied formats. While significant progress has been made in Document AI (DocAI) for tasks like extraction and classification, issues remain with accurately processing real-world applications. Conventional LLMs, such as GPT or BERT, generally handle text input and often fall short when dealing with multimodal data, such as documents that integrate text with visual layout cues. Multimodal LLMs that factor in both visual and textual information typically require complex image encoders, which can be resource-intensive.

The DocLLM Framework

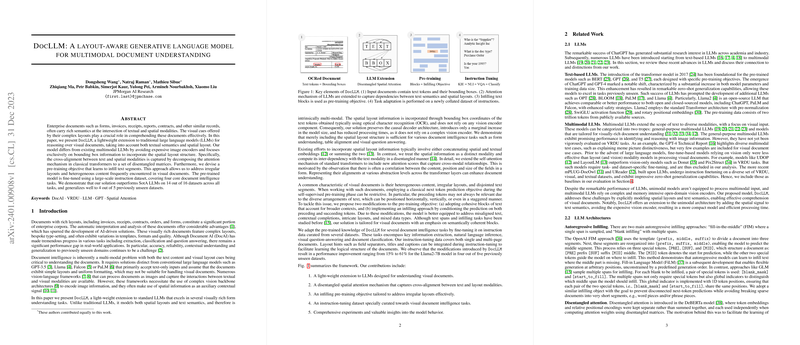

The paper presents DocLLM, a refined model that extends the capabilities of LLMs to multimodal document understanding. This model integrates spatial layout via bounding box data, capturing the cross-modal alignment between text and visual features without the need for image encoders. By expanding the traditional attention mechanism in transformers to account for spatial information, DocLLM can disentangle textual semantics from spatial structure, providing focused analysis when needed.

The innovation does not stop there; the pre-training objective is adapted to be more suitable for the unique traits of visual documents. Instead of the conventional token-by-token prediction, DocLLM is designed to predict blocks of text, considering the preceding and succeeding contexts, which is more aligned with the way humans process information in documents.

Additionally, DocLLM undergoes instruction-tuning on an extensive dataset covering multiple document intelligence tasks, enhancing its performance by utilizing layout hints such as separators and captions within the instructions. The experimental results reveal that DocLLM outperforms SotA models on a range of document analysis tasks, displaying noteworthy generalization capabilities.

Experiments and Results

The model is rigorously evaluated through various experiments. The evaluation encompasses two perspectives: assessing performance on the same types of documents but with different data splits, and analyzing generalizability to entirely unseen datasets. In both scenarios, DocLLM shows robust capabilities, surpassing equivalent models in numerous tasks.

Tests are performed against other leading models, including benchmarks like Llama and GPT-4, indicating that DocLLM's architecture is beneficial across document types and tasks. Notably, its adeptness at handling documents with a mix of image and text information, thanks to its spatial attention mechanism, positions it as a favorable choice for enterprises dealing with diverse document types.

Ablation Studies

Delving deeper into the workings of DocLLM, ablation studies highlight the significance of the model's components. The studies examine the added value of spatial attention, confirming that incorporating layouts through disentangled attention improves the model's understanding. They compare the performance of block infilling objectives against traditional causal learning to prove the effectiveness of DocLLM's approach towards pre-training. The predictive power of the causal decoder configuration further validates the chosen architecture for downstream tasks.

Conclusion and Future Work

DocLLM showcases an evolved approach to document processing, blending the complex spatial layouts with the linguistic parsing LLMs are known for. Its design choices circumvent the need for the complex vision encoders found in many multimodal LLMs while providing comparable, if not superior, performance. The paper emphasizes the model's potential applications and suggests future enhancements, like incorporating vision more seamlessly into the DocLLM framework, expanding its reach within the document intelligence sphere.