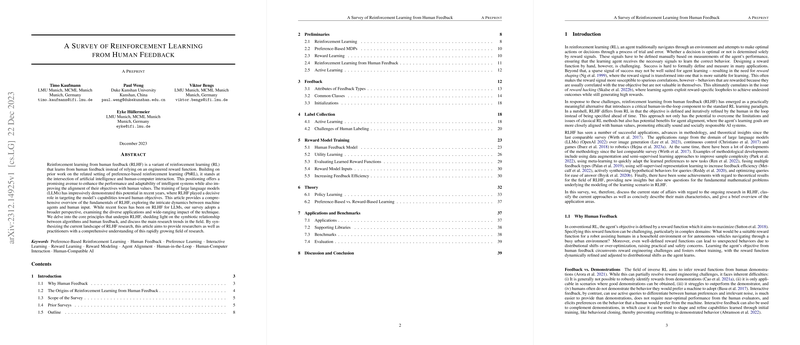

Summary of Reinforcement Learning from Human Feedback (RLHF)

Introduction

Reinforcement learning from human feedback (RLHF) is a variant of reinforcement learning (RL) that focuses on learning behavioral models directly from human-generated feedback, replacing traditional, engineered reward functions. This crossover field integrates AI and human-computer interaction, aiming to improve the alignment of agent objectives with human preferences and values. The approach is exemplified by its applications in training LLMs through human-aligned objectives.

Feedback Mechanisms

In RLHF, feedback types vary in their information content and complexity. Attributes determining a feedback type's classification include arity (unary, binary, n-ary), involvement (passive, active, co-generative), and intent (evaluative, instructive, descriptive, literal). While binary comparisons and rankings are common forms of feedback, other methods, such as critique, importance indicators, and corrections, offer additional mechanisms for preference expression. Interaction methods like emergency stops and feature traces also present alternative feedback modalities.

Active Learning and Label Collection

Active learning techniques are critical for efficient RLHF, as they enable selective querying of human feedback. These methods prioritize queries based on factors such as uncertainty, query simplicity, trajectory quality, and human labeler reliability. Additionally, psychological considerations, including biases and the relationship between researcher goals and labeler responses, significantly impact the effectiveness of preference elicitation. Understanding human psychology aids in designing interactions that facilitate informative query responses.

Reward Model Training

Training a reward model in RLHF involves various components such as selecting an appropriate human feedback model, learning utilities based on feedback, and evaluating learned reward functions. Approaches range from empirical risk minimization to Bayesian methods, and incorporate features like human-specific rationality coefficients and alternative utility notions.

Increasing Feedback Efficiency

Improving feedback efficiency is crucial for RLHF. This objective can be achieved through techniques like leveraging foundation models, meta- and transfer learning for reward model initialization, as well as self-supervised and semi-supervised training. Data augmentation and actively generating informative experiences further enhance learning efficiency.

Benchmarks and Evaluation

Evaluating RLHF approaches is challenging due to the involvement of human feedback and the absence of clear ground-truth task specifications. Benchmarks like B-Pref and MineRL BASALT offer standardized means to measure performance, addressing issues in reward learning evaluation. Libraries like imitation, APReL, and POLAR provide foundational tools for RLHF research, facilitating experimentation with various methods.

Discussion and Future Directions

The field of RLHF is growing rapidly, exploring new methods and addressing challenges such as the incorporation of offline preference-based reward learning and more complex objective functions. Benchmarks and frameworks that facilitate research in this area are continuously evolving, paving the way for methodologies that manage human feedback's complexity and variability effectively. With advancements in theory and practice, promising prospects for further robust algorithms and efficient use of human feedback lie ahead.