Analysis of "Optimizing Distributed Training on Frontier for LLMs"

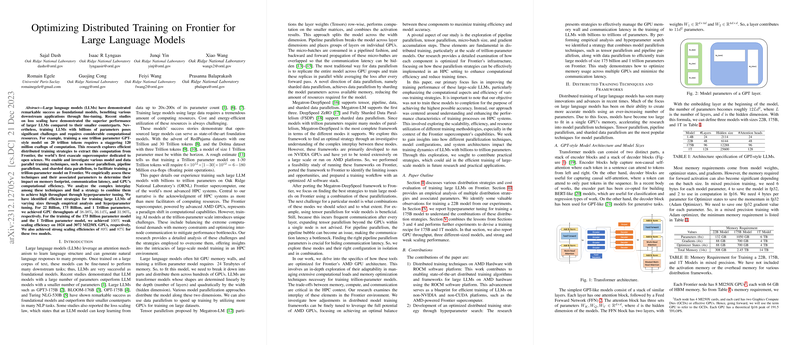

The paper, "Optimizing Distributed Training on Frontier for LLMs," authored by a team at Oak Ridge National Laboratory and Université Paris-Saclay, addresses the substantial computational challenges inherent in training LLMs on exascale supercomputing systems. The focus is on efficient utilization of the Frontier supercomputer, the world's first such system dedicated to open science, aiming to optimize the training performance of models with billions to trillion-plus parameters.

Key Contributions

The research outlines a comprehensive analysis of distributed training methodologies specifically tailored for the AMD GPU architecture, emphasizing the integration and adaptation of distributed frameworks for LLMs. Here are the significant factors and outcomes highlighted:

- Distributed Training Techniques:

- The paper explores tensor parallelism, pipeline parallelism, and sharded data parallelism to manage the extensive demands of trillion-parameter models.

- These parallelism strategies are combined in a 3D parallelism framework, utilizing Megatron-DeepSpeed, adapted for Frontier's AMD architecture, to optimize GPU throughput and minimize memory footprint and communication latency.

- Empirical Performance:

- The researchers successfully achieved notable GPU throughput rates of 38.38%, 36.14%, and 31.96% for 22 Billion, 175 Billion, and 1 Trillion parameter models respectively. These metrics highlight their efficient strategy in harnessing the full potential of the architectural setup.

- Scalability Insights:

- Weak and strong scaling efficiencies were rigorously assessed, displaying 100% weak scaling efficiency on substantial GPU counts, and strong scaling efficiencies of 89% and 87% for the 175 Billion and 1 Trillion parameter models respectively.

Methodology and Optimization Techniques

The paper meticulously details the interplay between different distributed training techniques and their parameters to identify optimal strategies. Some crucial observations include:

- Limiting tensor parallelism within a node due to communication overheads incurred by inter-node GPU interactions.

- Utilizing pipeline parallelism optimally by managing the pipeline bubble size with large batch sizes and appropriate scheduling, such as the 1F1B strategy.

- Incorporating sharded data parallelism via DeepSpeed's ZeRO optimizers to manage resource constraints effectively.

Software Porting and Framework Adaptation

An essential aspect of the research involves adapting the Megatron-DeepSpeed framework, initially developed for NVIDIA GPUs, to Frontier's AMD architecture using the ROCM platform. This adaptation required:

- Conversion of CUDA code to ROCM-compatible HIP code.

- Prevailing over challenges associated with the JIT compilation on the ROCM platform.

Implications and Future Directions

The findings bear significant implications for LLM training on non-NVIDIA, non-CUDA platforms. Such developments are particularly relevant for other systems like the Intel-powered Aurora supercomputer, which may benefit from these optimization strategies. By demonstrating the integration of advanced distributed training frameworks on AMD architectures, the research opens avenues for further exploration into scalable deep learning solutions on exascale systems.

Looking ahead, addressing loss divergence due to large batch sizes and enhancing training performance with reduced per-replica batch sizes remain critical. These areas will be pivotal in minimizing time-to-solution for large model training. Moreover, the lessons learned could incentivize future research to focus on maximizing hardware-specific features and cross-platform acceptability of training frameworks, which are indispensable as we move towards increasingly large and complex LLMs in artificial intelligence.