Introduction

In the domain of AI, Large Multimodal Models (LMMs) play a pivotal role in understanding and processing complex combinations of text and visual content. This evaluation concentrates on the performance of two state-of-the-art LMMs, GPT-4V and Gemini, employing an online Visual Question Answering (VQA) approach derived from real-world user interactions on the Stack Exchange platform. The paper taps into these interactions to test the general capabilities of the models across various criteria, including the alignment of these interactions with actual user needs.

Dataset Overview

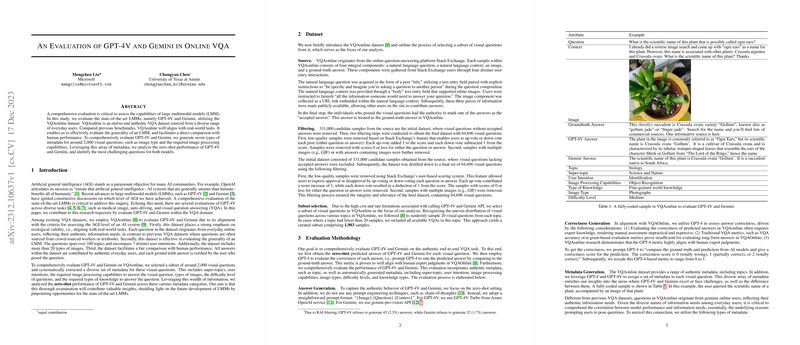

Researchers used the VQAonline dataset, which comprises natural language questions, contexts, images, and verified answers obtained from the Stack Exchange platform, to create a robust environment for evaluating the models. The filtering process guarantees a focus on questions with higher-quality content, and a subset was chosen to manage the expensive and rate-limited API calls to the evaluated LMMs. The final subset used for evaluation consists of 1,903 samples, providing depth and variety in content.

Evaluation Methodology

The methodology is designed to appraise the zero-shot performance of GPT-4V and Gemini without the use of advanced prompt-engineering techniques. Answers generated by the models are checked for correctness against ground-truth answers using GPT-4, and this rating is cross-validated through its alignment with human expert judgments. Metadata generation is another aspect of evaluation, adding layers such as user intention and image processing capabilities to the analysis.

Evaluation Results

The assessment revealed the following insights:

- GPT-4V and Gemini displayed commendable proficiency in domains like language learning and economics, while struggling with topics such as puzzling and LEGO-related queries.

- GPT-4V surpassed Gemini in understanding social sciences and natural science topics and demonstrated strengths in non-visual questions, indicating no need for image processing.

- Questions tagged under user intention as 'Identification' were difficult for both models, highlighting an area for potential improvement.

- When it came to image processing capabilities, GPT-4V faced the most challenges with feature extraction tasks, whereas Gemini showed a strong command in scene understanding.

- Both models performed least efficiently on questions requiring expert knowledge, possibly indicating the need for more specialized training in domain-specific areas.

- The most challenging image types for both models included "3D Renderings" and "Sheet Music," suggesting a gap in interpreting these image variants.

- As the difficulty level escalated, the performance of both models declined.

Conclusion

This investigation provides a comprehensive analysis of the capabilities and limitations of GPT-4V and Gemini on an authentic, user-generated VQA dataset. The findings offer significant insights into areas where these LMMs excel and where they could be improved upon. While the analysis confirms the adeptness of the models in handling a range of topics and questions, it also indicates the need for enhancement in processing complex images and expert knowledge areas. Future work is aimed at refining the analysis methods and incorporating additional datasets to deepen the understanding of LMM performances.