The paper "How to Configure Good In-Context Sequence for Visual Question Answering" investigates the enhancement of In-Context Learning (ICL) for Visual Question Answering (VQA) tasks, specifically utilizing Large Vision-LLMs (LVLMs). While LVLMs have shown potential for ICL, their performance is often suboptimal when relying on simple configurations like random sampling of in-context sequences. This research aims to explore diverse in-context configurations that can improve ICL's effectiveness and reveals insights into the latent properties of LVLMs.

The authors conduct exhaustive experiments on three VQA datasets: VQAv2, VizWiz, and OK-VQA, using retrieval-based demonstration configurations and manipulating the in-context sequence. They propose several retrieval strategies, such as:

- Retrieving via Similar Image (SI): This strategy uses images similar to the query to form the in-context sequence, utilizing CLIP embeddings for similarity measurement.

- Retrieving via Similar Question (SQ): This method utilizes the question text to retrieve similar examples.

- Retrieving via Similar Question-Answer (SQA): A strategy that incorporates both questions and answers for retrieval, although practical limitations exist since it requires pre-knowledge of the answer.

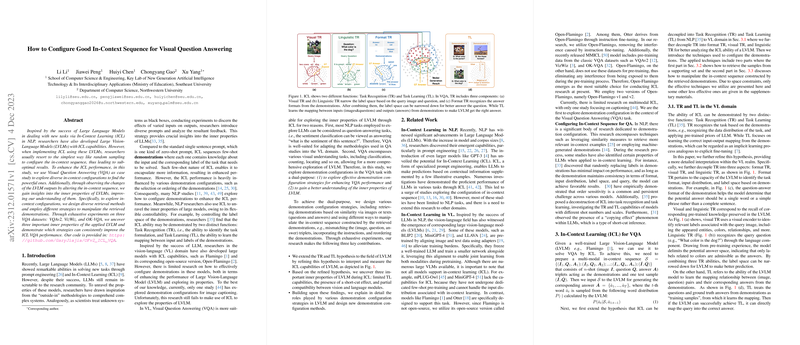

The manipulation of the sequence involves techniques like mismatching elements within the demonstrations and reordering demonstrations based on similarity in a different modality. The paper uncovers several insightful findings regarding LVLMs:

- Task Recognition (TR) vs. Task Learning (TL): The research observes that TR, which involves the identification based on task formulation from examples, plays a more critical role than TL in LVLMs. Even when examples are altered, performance remains significantly intact, indicating a stronger reliance on TR.

- Short-cut Effect: The models exhibit tendencies for short-cut inference, often copying responses from similar question pairs in the demonstrations rather than relying on learned mappings, which can lead to errors.

- Compatibility Issues between Vision and Language Modules: The paper identifies a disparity in how vision and language encoders are weighted, revealing that the language component often dominates due to misalignment, leading to a biased reliance on linguistic over visual cues.

Despite these challenges, the paper identifies strategies that improve ICL performance:

- Utilizing Similar Demonstrations: Selection of demonstrations that share similarity in both visual and textual modalities exhibits consistent improvement, countering the reliance on short-cuts.

- Incorporation of Instructional Prompts: Particularly for more linguistically advanced models such as Open-Flamingo version 2, providing detailed instructions enhances performance, especially in scenarios with limited demonstrations.

- Pseudo Answer Utilization: Employing pseudo answers can dynamically improve performance by providing a clearer input-output mapping, albeit with greater effect in scenarios requiring external knowledge, such as the OK-VQA dataset.

In conclusion, the paper advances the understanding of how in-context demonstrations and configurations can be leveraged to enhance LVLM capabilities in VQA tasks. While focusing on Open-Flamingo as a principal model, the methodologies are applicable to a broader spectrum of LVLMs and contribute to refining the application of ICL in vision-language contexts.