An Overview of GeoChat: A Grounded Vision-LLM for Remote Sensing

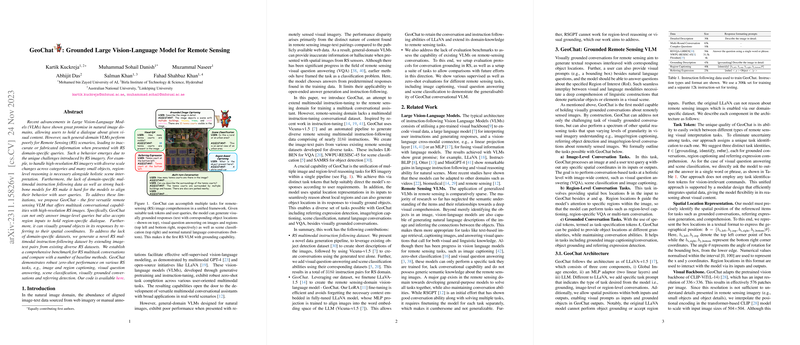

The paper presents GeoChat, a Large Vision-LLM (VLM) specifically designed to address the unique challenges posed by remote sensing (RS) imagery, leveraging conversational capabilities for enhanced decision-making. General-domain VLMs, despite their success in handling natural images, typically struggle with RS scenarios due to the distinct nature of high-resolution RS imagery with varying scales and numerous small objects. To mitigate these issues, region-level reasoning must accompany holistic scene interpretation.

Methodological Contributions

GeoChat emerges as the first grounded VLM optimized for RS, capable of performing multitask conversational functions with RS images. The model accepts both image-level and region-specific inputs, facilitating spatially grounded dialogues, and is underpinned by several key methodological components:

- RS Instruction Dataset: The paper introduces a novel RS multimodal dataset composed of $318k$ image-instruction pairs derived by extending and integrating existing RS datasets. This dataset fills the gap of domain-specific data for multimodal instruction following.

- Model Architecture: GeoChat is developed on the LLaVA-1.5 framework, integrating a CLIP-ViT backbone with a LLM to handle textual and visual inputs. GeoChat uniquely incorporates task-specific tokens and spatial location representation to guide its multitask operations effectively.

- Unification of RS Tasks: GeoChat harmonizes multiple tasks, enabling robust zero-shot performance across diverse RS tasks, including region captioning, visually grounded conversations, and referring object detection, through a single architecture.

Evaluation and Benchmarking

GeoChat demonstrates its capabilities on various benchmarks and surpasses general VLMs in RS-specific tasks, showing high zero-shot performance in scene classification, visual question answering, and grounded dialogues. The paper introduces quantitative evaluations and new benchmarks for the RS domain, emphasizing GeoChat's efficacy. Noteworthy results include an 84.43% classification accuracy on the UCMerced dataset and comparable results on VQA tasks using the RSVQA dataset.

Implications and Future Directions

GeoChat's introduction implies a significant enhancement in RS data interpretation by enabling detailed and relevant responses to user queries. This could streamline tasks ranging from environmental monitoring to disaster management by offering spatially grounded insights into high-resolution RS imagery. The architecture and comprehensive dataset provide a foundation for future research in RS VLMs, allowing models to be further fine-tuned or expanded upon with more specialized datasets or refined tasks.

In conclusion, GeoChat positions itself as a versatile tool for RS applications, advancing the field of VLMs through its grounded and multi-faceted approach. Future research could focus on refining the model's performance in handling even more granular or complex RS tasks and expanding its applicability across varied environmental surveillance and monitoring applications.