Evaluating LLMs on Machine Learning Tasks Using Open-source Libraries

In recent years, LLMs have made substantial progress in the field of code generation, a trend reflected in the new benchmark revealed by Liu et al. named ML-Bench. This benchmark uniquely focuses on assessing LLMs' abilities to leverage existing functions within open-source libraries to accomplish machine learning tasks, rather than merely generating code from scratch.

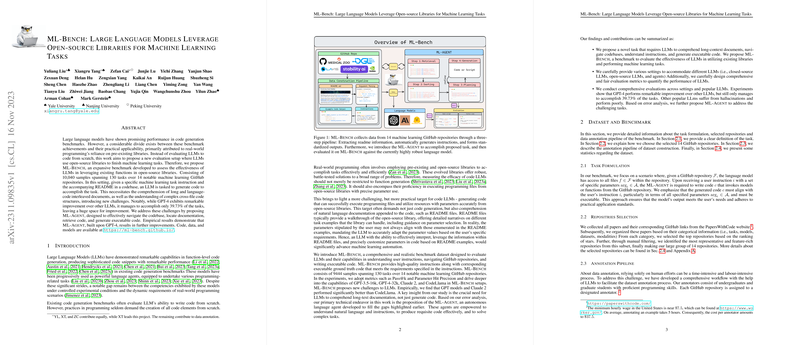

ML-Bench Overview

ML-Bench comprises 10,040 samples spanning 130 tasks across 14 prominent machine learning GitHub repositories. The core objective here is to evaluate how effectively LLMs can generate executable code by utilizing open-source functions, a practical necessity in real-world programming scenarios often overlooked by traditional code generation benchmarks.

One notable finding from ML-Bench is the superior performance of GPT-4 over other models such as GPT-3.5 and Claude 2, yet it only successfully accomplishes 39.73% of the benchmark tasks. This result underscores significant potential for model improvement and highlights the practical challenges faced when relying on LLMs for code completion in mixed language-code environments.

Challenges and Approach

The paper identifies the primary challenges for LLMs, such as comprehending long, interleaved language-code documents and managing complex cross-file code structures. The authors address these with ML-Agent, an innovative system built upon GPT-4. This agent autonomously navigates code bases, retrieves pertinent documentation, and generates executable code, improving on previous capabilities demonstrated by traditional LLMs.

The authors propose comprehensive and rigorous evaluation metrics, including Pass@k and Parameter Hit Precision, to quantify LLM performance in this new setting. These metrics are crucial given the emphasis on ensuring that the generated code not only compiles successfully but also aligns precisely with the specified parameter details provided in task instructions.

Empirical Findings

The paper provides detailed experimental results, confirming that GPT models outperform CodeLlama and other popular LLMs in this benchmark. However, the prevalence of hallucination errors and knowledge gaps in current models highlights the need for enhanced comprehension capabilities. Additionally, the findings reveal that providing models with Oracle Segments (relevant task-related code snippets) significantly boosts performance over full README inputs or BM25 retrieval.

Implications and Future Directions

This research has dual implications: practically, in guiding the development of more sophisticated LLMs that can robustly handle the nuances of real-world codebases, and theoretically, in shaping future AI research paradigms towards models that better mimic human ability to leverage existing code resources. The authors suggest that bridging these gaps could accelerate machine learning automation and streamline programming workflows.

Future research is likely to target the integration of even more refined retrieval mechanisms and enhanced domain-specific knowledge bases. This work paves the way for further exploration into agent-based LLMs capable of fully contextual understanding and dynamic interaction with multifaceted code environments.

In conclusion, ML-Bench represents a critical step forward in evaluating code generation capabilities, setting a challenging yet practical benchmark that foregrounds the effective utilization of existing software libraries in LLM-driven programming tasks.